AI Meets the Metaverse: Teachable AI Agents Living in Virtual Worlds

by Lifeboat Foundation Scientific Advisory Board member Ben Goertzel.

Overview

Online virtual worlds have the power to accelerate and catalyze the development of artificial general intelligence (AGI). As AGIs involved in this metaverse become progressively more intelligent from their interaction with the social network of human beings and reach human-level intelligence (the Singularity), they will already be part of the human social network. If we build them right and teach them right, they will greet us with open arms.

Virtual Worlds as the Catalyst for an AI Renaissance

The AI field started out with grand dreams of human-level artificial general intelligence. During the last half-century, enthusiasm for these grand AI dreams — both within the AI profession and in society at large — has risen and fallen repeatedly, each time with a similar pattern of high hopes and media hype followed by overall disappointment. Throughout these fluctuations, though, research and development have steadily advanced on various fronts within AI and allied disciplines.

Recently, in the first years of the 21st century, AI optimism has been on the rise again, both within the AI field and in the science and technology community as a whole. One possibility is that this is just another fluctuation — another instance of excessive enthusiasm and hype to be followed by another round of inevitable disappointment (see McDermott, 2006 for an exposition of this perspective). Another possibility is that AI’s time is finally near, and what we are seeing now is the early glimmerings of a rapid growth phase in AI R&D, such as has not been seen in the field’s history to date.

I’m placing my bets on the latter, more optimistic possibility. I have many reasons for making this judgment, and in this essay I’m going to focus on one of them — online virtual worlds, and the power I see them as having to accelerate and catalyze the development of artificial general intelligence. My thesis here is that virtual worlds have the potential to serve as the “golden path” to advanced AI, so that the first powerful nonhuman intelligences on Earth are likely to be resident in virtual worlds such as Second Life or its descendants.

This is a hypothesis I’m trying to actualize via my own current work using the Novamente Cognition Engine to control virtual agents in virtual worlds, via collaborative projects between Novamente LLC and Electric Sheep Company. But it’s also something that I see as having a fundamental importance beyond any particular project, or any particular approach to the nitty-gritty of AI design.

Adequate Interaction in Virtual Worlds Requires AGI

Whenever I talk about AI I like to make the distinction between

narrow AI — programs that solve particular, highly specialized types of problems

- general AI or AGI — programs with the autonomy and self-understandings to come to grips with novel problem domains and hence solve a wide variety of problem types

Unlike an AGI, a narrow AI program need not understand itself or what it is doing, and it need not be able to generalize what it has learned beyond its narrowly constrained problem domain. My principal point in this essay is that virtual worlds are an ideal arena for the maturation of AGI software. They have the potential to provide AGIs with effective education; and, they are a commercial arena in which AGI rather than narrow-AI is going to be required for dramatic success. Most research work in the AI field today has to do with narrow AI rather than directly with AGI; but my prediction is that during the next 3–7 years, successes of AGI in the virtual-worlds domain may start to cause a major change in this bias.

A few examples may clarify the narrow-AI/AGI distinction. For example, a narrow-AI program for playing chess, no matter how powerful, need not be able to transfer any of its strategic or methodological insights to Shogi (Japanese chess) or checkers… and probably not even to chess variants like Fisher random chess (though a human programmer might be able to take some of the knowledge implicit in a narrow-AI chess playing program and use this to make a better program for playing other games; in this case the general intelligence exists mainly in the human being not the programs).

A narrow-AI program for driving a car in the desert need not be able to utilize its knowledge to drive a car in the city or a motorcycle in the desert. A narrow-AI program for parsing English cannot learn any other language, whether or not the other language has a similar syntactic and semantic structure.

Or to mention an area in which I’ve done a lot of research myself, biomedical research — a narrow-AI program for diagnosing Chronic Fatigue Syndrome will always be useless for diagnosing other diseases such as, say, Chronic Upper Airway Cough Syndrome (though the same narrow-AI framework may be used by humans to create narrow-AI programs for diagnosing various sorts of diseases).

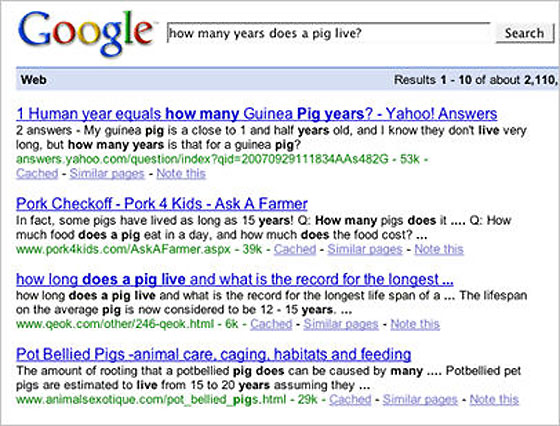

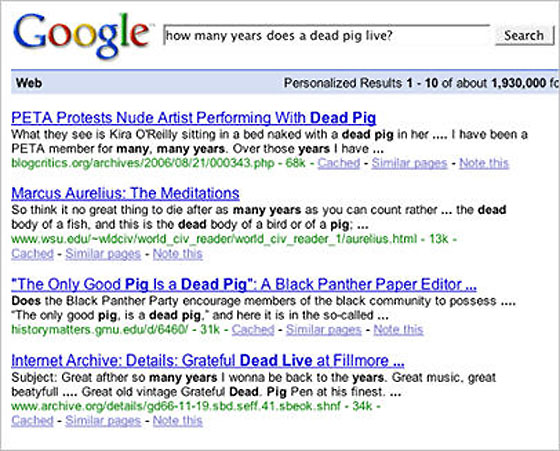

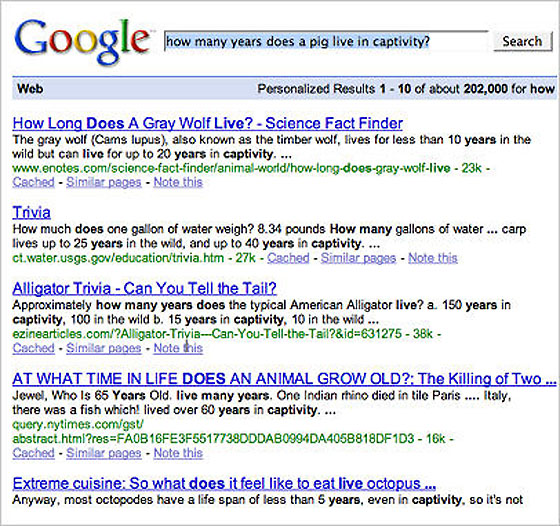

Or, finally, to give an example we can all relate to from our own experience, Figures 1 and 2 illustrate the sense in which the wonderful Google search engine fails to bridge the gap between narrow and general AI — it understands some of our queries perfectly, and in other cases fails miserably due to a lack of conceptual understanding borne of the fact that it acts on the level of words rather than meanings.

Figure 1: Given a simple question such as “How many years does a pig live?” Google (as of October 2007) acts like a reasonably intelligent natural language query answering system.

Figure 2: Given a question requiring just a little bit of commonsense background knowledge, such as “How many years does a dead pig live?”, Google’s (as of October 2007) inadequacies as a question-answerer become apparent. Note that Google’s answer to “How many years does a pig live in captivity?” are about other animals than pigs (wolves, octopi, carp, etc.). Google does not have enough sense to understand that the question is about pigs. This specific problem could be remedied through judicious application of narrow-AI language-processing techniques (and startups like PowerSet are working on this), but without AGI, there will always be many examples of this sort of obtuseness.

Clearly, there are various roles for narrow AI in the metaverse (to use Neal Stephenson’s (2000) term for the universe of virtual worlds, made popular in his classic SF novel Snow Crash; see also the Metaverse Roadmap). Virtual worlds could use automated cars and Google-like search engines; they could use chatbots on the level of ALICE as virtual shopkeepers. Enterprising individuals are already moving to fill this niche, for instance Daden Limited’s Second Life chatbots.

In a similar vein, video games — some of which verge on being virtual worlds, such as MMOGs like World of Warcraft — often feature cleverly-constructed narrow AIs, serving as automated opponents or teammates. A few games have even included AIs with significant ability to learn or evolve (the Creatures games and the Black and White series being the most notable examples).

However, these sorts of narrow AIs in the metaverse are not what I want to talk about here. They’re worthwhile, but clearly, they’re not as impressive in the metaverse domain as, say, Deep Blue is in the chess domain, or an AI-learned diagnostic rule is in the medical domain. Deep Blue could beat any human at chess, and in many cases AI-learned diagnostic rules can out-diagnose any human physician. Chess and medical diagnosis are examples of problems that humans solve using general intelligence — but that, in a digital computing context, have found to be at least equally amenable to highly specialized narrow-AI techniques as to AGI methods.

But the metaverse presents different sorts of challenges. Narrow-AI based chatbots and automated virtual car drivers and warfighters are drastically inferior in functionality to avatars carrying out similar functions but controlled by human beings. Apparently, social interaction in virtual worlds is a problem domain that requires general intelligence on roughly the human level, and is not tractably amenable to narrow-AI techniques.

Adequate interaction in virtual worlds, in my view, is very likely to require powerful AGI systems — and this hypothesis is most interesting to contemplate in conjunction with the observation that virtual worlds seem to provide an ideal environment for the creation and maturation of powerful AGI systems.

As I noted above (and as many others have observed as well; see e.g. J. Storrs Hall’s 2006 book Beyond AI) the AI research community to date, in both academia and industry has focused largely on narrow-AI. But in recent years this is already shifting a bit toward AGI, as evidenced by an increasing number of conference special sessions, journal special issues, and edited volumes focused in the AGI area (Cassimatis, 2006; Goertzel and Wang, 2007).

As virtual worlds become increasingly important, I believe we will see a more dramatic shift in the research community toward the investigation of AGI systems and related scientific issues. Because, I believe, the embodiment of AGI systems in virtual worlds is going to lead to palpably exciting results during the next few years — results that are exciting on multiple levels: scientifically, visually, and in terms of human emotions and social systems.

While the scientific community can be stubborn, AI science like any other kind is ultimately empirically based, and the minds of all but the most hidebound scientists can be shifted by dramatic observable results. My prediction is that “AI in virtual worlds” may well serve as the catalyst that refocuses the AI research community on the grand challenge of creating AGI at the human level and beyond, which was after all the vision on which the AI field was founded.

Virtual-World Embodiment as a Path to Human-Level AGI

I often say there are four key aspects to creating a human-level AGI:

Cognitive architecture (the overall design of an AGI system: what parts does it have, how do they connect to each other)

- Knowledge representation (how does the system internally store declarative, procedural, and episodic knowledge; and now does it create its own representation for knowledge of these sorts in new domains it encounters)

- Learning (how does it learn new knowledge of the types mentioned above; and how does it learn how to learn, and so on)

- Teaching methodology (how is it coupled with other systems so as to enable it to gain new knowledge about itself, the world, and others)

Here I’m going to focus on the fourth of these. For my views on the other three aspects, you are directed to references such as (Goertzel, 2006, 2007; Goertzel et al, 2004) and to a prior article of mine on KurzweilAI.net. My main point here is to suggest that virtual worlds present unprecedented and unparalleled opportunities for AGI teaching methodology — which, combined with appropriate solutions for the other three key aspects of the AGI problem, may have a catalytic effect and accelerate progress toward AGI dramatically.

From an AI theory perspective, virtual worlds may be viewed as one possible way of providing AI systems with embodiment. The issue of the necessity for embodiment in AI is an old one, with great AI minds falling on both sides of the debate. The classic GOFAI systems (Good Old-Fashioned AI; see Crevier, 1993) are embodied only in a very limited sense; whereas Rodney Brooks (1999) and others have argued for real-world robotic embodiment as the golden path to AGI.

My own view is somewhere in the middle: I think embodiment is very useful though probably not strictly necessary for AGI, and I think that at the present time, it is probably more generally worthwhile for AI researchers to spend their time working with virtual embodiments in digital simulation worlds, rather than physical robots. Toward that end the focus of my current AGI research — which I’ll discuss more in a few paragraphs — involves connecting AI learning systems to virtual agents in virtual worlds, including Second Life.

The notion of virtually embodied AI is nowhere near a new one, and can be traced back at least to Winograd’s (1972) classic SHRDLU system. However, technology has advanced a long way since SHRDLU’s day, and the power of virtual embodiment to assist AI is far greater in these days of Second Life, Word of Warcraft, HiPiHi, Creatures, Club Penguin, and the like.

In 2004 and 2005, my colleagues and I experimented with virtual embodiment in simple game-engine type domains, customized for AGI development (see Figure 3), which has advantages in terms of the controllability of the environment; but I am now leaning toward the conclusion that the greater advantage is to be had by making use of the masses of potential AGI teachers present in commercial virtual worlds.

Figure 3: The Novamente Cognition Engine controlling a simple embodied agent in a 3D simulation world. The AI controls the smaller agent and a human controls the larger agent. This screenshot is from a simulation in which the human is trying to teach the AI the Piagetan concept of “object permanence”, something that human babies typically learn during their first year of life. In the overall experiment from which this screenshot is drawn, the baby AI is supposed to learn that when a toy has been placed inside a box, it tends to stay there even after the lid of the box is closed, so that it will still be there when the lid of the box is opened again.

Virtually embodied AGI in virtual worlds may take many different forms, for instance (to name just a handful of examples):

ambient wildlife

- virtual pets (see Figure 4 for a narrow-AI virtual pet currently for sale in Second Life)

- virtual babies for virtual-world residents to take care of, love, and teach (Figure 8)

- virtual shopkeepers

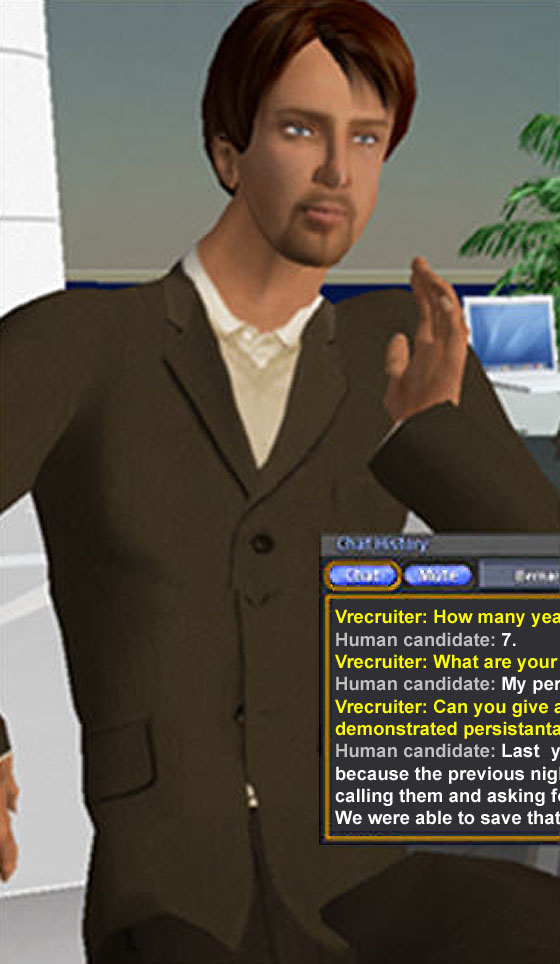

- virtual job recruiters (see Figure 9 for a visual depiction: and note that dozens of real-world companies are now using human-controlled Second Life avatars to do job recruiting)

- digital twins, imitating users’ avatars

Figure 4: A virtual dog currently for sale in the Second Life virtual world, on the sim Pawaii, by D&D Dogs. The dog carries out a fixed repertoire of tricks, rather than learning new behaviors, hence qualifying it as a “narrow AI” (to the extent that it is intelligent at all).

To concretely understand the potential power of virtual embodiment for AGI, in this essay I’ll focus mostly on just one possibility — a potential project my software company Novamente LLC has been considering undertaking sometime during the next few years: a virtual talking parrot. But all of the possibilities mentioned above are important and interesting, plus many others, and there is much to be gained by exploring the detailed implications they lead to.

At the moment Novamente, in collaboration with Electric Sheep Company, is experimenting with simpler virtual animals in virtual worlds — nonlinguistic animals that can carry out spontaneous behaviors while seeking to achieve their own goals [Figure 5] and can also specifically be trained by human beings to carry out novel tricks and other behaviors [Figure 6] (which were not programmed into them, but rather must be learned by the AI on the fly).

This current experimental work will be used as the basis of a commercial product to be launched sometime in 2008. These simpler virtual animals are an important first step, but I think the more major leap will be taken when linguistic interaction is introduced into the mix — something that, technologically is not at all far off. Take a simpler virtual animal and add a language engine, integrated in the appropriate way (and Novamente LLC, along with many other research groups, already has a fairly powerful language engine)… and you’re on your way.

Of course a virtual animal with a language engine could be concretized many different ways but — inspired in part by Irene Pepperberg’s (2000) groundbreaking work teaching an actual parrot complex things like grammar and arithmetic — the specific scenario I’ve thought about most is a virtual talking parrot.

Figure 5: Screenshot of a prototype of the virtual animals being created for virtual worlds by Electric Sheep Company and Novamente LLC. This prototype dog is being experimented with in Second Life; and is illustrating a spontaneous behavior: pursuing a cat.

Figure 6: Screenshot of a prototype of the virtual animals being created for virtual worlds by Electric Sheep Company and Novamente LLC. This prototype dog is being experimented with in Second Life; and is being instructed by an avatar controlled by a human. The human is teaching the dog the command “kick the ball” — an example of flexible learning, in that the set of commands the dog can learn is not limited to a set of preprogrammed tricks, but is quite flexible, limited only by the human teacher’s ability to dream up tricks to show them to the dog, and the dog’s intelligence (the latter provided by the Novamente Cognition Engine).

Imagine millions of talking parrots spread across different online virtual worlds — all communicating in simple English. Each parrot has its own local memories, its own individual knowledge and habits and likes and dislikes — but there’s also a common knowledge-base underlying all the parrots, which includes a common knowledge of English.

Figure 7: A virtual parrot in Second Life. Right now virtual birds in Second Life don’t display intelligent non-verbal behaviors, let alone possess the capability of speech. But, virtual parrots that talk would seem a very natural medium via which AI’s may progressively gain greater and greater language understanding.

Next, suppose that an adaptive language learning algorithm is set up (based on one of the many available paradigms for such), so that the parrot-collective may continually improve its language understanding based on interactions with users. If things go well, then the parrots will get smarter and smarter at using language, as time goes on. And, of course, with better language capability, will come greater user appeal.

The idea of having an AI’s brain filled up with linguistic knowledge via continual interaction with a vast number of humans, is very much in the spirit of the modern Web.

Wikipedia is an obvious example of how the “wisdom of crowds” — when properly channeled — can result in impressive collective intelligence. Google is ultimately an even better example — the PageRank algorithm at the core of Google’s technical success in search, is based on combining information from the Web links created by multi-millions of Website creators. And the intelligent targeted advertising engine that makes Google its billions of dollars is based on mining data created by the pointing and clicking behavior of the one billion Web users on the planet today.

Like Wikipedia and Google, the mind of a talking-parrot tribe instructed by masses of virtual-world residents will embody knowledge implicit in the combination of many, many peoples’ interactions with the parrots.

Another thing that’s fascinating about virtual-world embodiment for language learning is the powerful possibilities it provides for disambiguation of linguistic constructs, and contextual learning of language rules. Michael Tomasello (2003), in his excellent book Constructing a Language, has given a very clear summary of the value of social interaction and embodiment for language learning in human children.

For a virtual parrot, the test of whether it has used English correctly, in a given instance, will come down to whether its human friends have rewarded it, and whether it has gotten what it wanted. If a parrot asks for food incoherently, it’s less likely to get food — and since the virtual parrots will be programmed to want food, they will have motivation to learn to speak correctly. If a parrot interprets a human-controlled avatar’s request “Fetch my hat please” incorrectly, then it won’t get positive feedback from the avatar — and it will be programmed to want positive feedback.

The intersection between linguistic experience and embodied perceptual/active experience is one thing that makes the notion of a virtual talking parrot very fundamentally different from the “chatbots” on the Internet today. The other major difference, of course, is the presence of learning — chatbots as they currently exist rely almost entirely on hard-coded lists of expert rules. But the interest of many humans in interacting with chatbots suggests that virtual talking parrots or similar devices would be likely to meet with a large and enthusiastic audience.

Yes, humans interacting with parrots in virtual worlds can be expected to try to teach the parrots ridiculous things, obscene things, and so forth. But still, when it comes down to it, even pranksters and jokesters will have more fun with a parrot that can communicate better, and will prefer a parrot whose statements are comprehensible.

And of course parrots are not the end of the story. Once the collective wisdom of throngs of human teachers has induced powerful language understanding in the collective bird-brain, this language understanding (and the commonsense understanding coming along with it) will be useful for many, many other purposes as well. Humanoid avatars — both human-baby avatars that may serve as more rewarding virtual companions than parrots or other virtual animals; and language-savvy human-adult avatars serving various useful and entertaining functions in online virtual worlds and games.

Once AIs have learned enough that they can flexibly and adaptively explore online virtual worlds (and the Internet generally) and gather information according to their own goals using their linguistic facilities, it’s easy to envision dramatic acceleration in their growth and understanding.

Figure 8: A virtual baby in Second Life. Right now Second Life babies are essentially just visuals, little more animated and responsive than articles of clothing or physical objects. But, attaching AI to virtual babies may yield something much more interesting: the capability for humans to help baby AGI’s ascent through the stages of cognitive development, by interacting with them in virtual worlds.

A baby AI has a lot of disadvantages compared to a baby human being: it lacks the intricate set of inductive biases built into the human brain, and it also lacks a set of teachers with a similar form and psyche to it… and for that matter, it lacks a really rich body and world. However, the presence of thousands to millions of teachers constitutes a large advantage for the AI over human babies. And a flexible AGI framework will be able to effectively exploit this advantage.

Google doesn’t emulate the human brain, nor does Wikipedia, yet in a sense they both display considerable intelligence. It seems possible to harness the “wisdom of crowds” phenomenon underlying these Internet phenomena for AGI, enabling AGI systems to learn from vast numbers of appropriately interacting human teachers.

There are no proofs or guarantees about this sort of thing, but it does seem at least plausible that this sort of mechanism could lead to a dramatic acceleration in the intelligence of virtually-embodied AGI systems, and maybe even on a time-scale faster than the pathway to Singularity-enabling AGI that Ray Kurzweil has envisioned, where brain-scanning and hardware advances lead to human-brain emulation, which then leads on to more general and powerful transhuman AGIs.1

In the Novamente Cognition Engine design we have charted out a specific engineering pathway via which we believe this can happen — what’s needed is more time spent on software implementation and various related technical computer science problems — and the human intelligence of a large number of virtual world residents, interacting with Novamente-powered AGI systems.

Figure 9: Intelligent virtual agents in Second Life will have applications beyond entertainment. This image shows a prototype virtual agent designed to serve as a job interviewer, providing “initial screening and filtering interviews” of job candidates, and then passing on the best ones to human interviewers. Job interviewer agents are not yet ready for deployment, and creating them based on the Novamente Cognition Engine will still require a significant amount of development work; but this sort of application is quite palpable and plausible in the near future. (Editor’s note: This “intelligent virtual agent” looks suspiciously like Bruce Klein.)

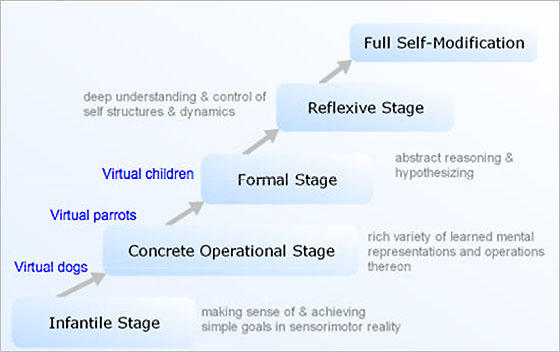

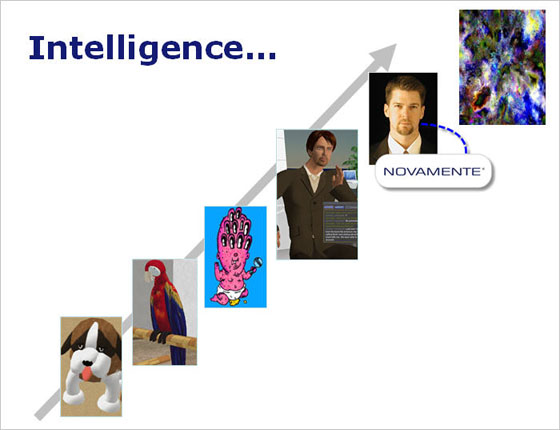

One way to conceptualize this advance is in terms of Jean Piaget’s famous stages of human cognitive development, as illustrated in Figure 10. Figure 11 presents a more whimsical version of Piaget, tied in specifically with the notion of AGI in virtual worlds.

Figure 10: For AGI systems that are at least roughly humanlike in their cognitive architecture, Piaget’s theory of the stages of cognitive development is highly relevant. For example the prototype virtual animals that Electric Sheep Company and Novamente LLC are currently experimenting with in Second Life, are somewhere between the Infantile and Concrete Operational stages (what Piaget sometimes called Pre-Operational). Via interacting with humans and each other in virtual worlds, virtual agents may ascend the ladder of development — eventually reaching stages of advancement inaccessible to humans, via their ability to more fully introspect and self-improve.

Figure 11: A visual depiction of the forms that virtually-embodied AGIs might take as they ascend the Piagetan ladder. Once virtually embodied AGIs achieve roughly human-level intelligence, it may be worthwhile to link human minds and brains with them in various ways, including potentially direct neural interconnects. The ultimate stages go beyond anything we can imagine, as illustrated by the final graphic shown, an incomprehensibly superhuman supermind drawn from the online virtual world Orion’s Arm.

Conclusion (Hi Ho, Hi Ho, to the Metaverse We Go)

I am one of the minority of the human race who believes that a technological Singularity is almost surely coming. And, as this essay hopefully has made clear, I am also part of an even smaller minority who believes that the most probable path for the Singularity’s arrival involves AGI in the online metaverse. I suspect that both of these minorities will become larger and larger as the next decade unfolds.

I certainly don’t claim the metaverse is the only possible route to Singularity. The rate of progress of radical ideas and technologies is famously hard to predict. Nanotech or quantum computing — to name just two possible technologies — could have a tremendous, Singularity-enabling advance any year now, leapfrogging ahead of AGI and/or enabling advanced AGI in ways we cannot now predict or comprehend. These possibilities need to be taken very seriously.

And I do attach a lot of plausibility to the scenario that Ray Kurzweil has elaborated in The Singularity Is Near and other writings, in which software-based human brain emulation is the initial route toward Singularity-enabling AGI.

However, my own educated guess is that we will achieve human-level AGI first via other means, well before the brain-scanning route gets us there. Human uploads as Kurzweil projects will come — we will upload ourselves into virtual bodies, living in virtual worlds. But when we get there, I suggest, AGI systems will be living there already — and if we build them right and teach them right, they will greet us with open arms!

Singularity-wise, one interesting aspect of the virtual-worlds route to AGI has to do with the high level of integration it implies will occur between AGIs and human society, coming right out of the gate. Ray Kurzweil has suggested that, by the time of the Singularity, humans may essentially be inseparable from the AI-incorporating technological substrate they have created. The virtual-agents pathway makes very concrete one way in which this integration might happen — in fact it might be the route by which AGI evolves in the first place.

Right now many people consider themselves inseparable from their cellphones, search engines, and so forth. Suppose that in the Internet of 2015, websites and word processors are largely replaced by some sort of 3D immersive reality — a superior Second Life with hardware and software support far beyond what exists now in 2007 — and that artificially intelligent agents are a critical part of this “metaversal” Internet.

Suppose that the AGIs involved in this metaverse become progressively more and more intelligent, year by year, due to their integration in the social network of human beings interacting with them. When the AGIs reach human-level intelligence, they will be part of the human social network already. It won’t be a matter of “us versus them”; in a sense it may be difficult to draw the line.

Singularity-scenario-wise, this sort of path to AGI lends itself naturally to what Stephan Bugaj and I, in The Path to Posthumanity: 21st Century Technology and Its Radical Implications for Mind, Society and Reality, called the “Singularity Steward” scenario, in which AGI systems interact closely with human society to guide us through the dramatic transitions that are likely to characterize our future.

References

Brooks, Rodney (1999). Cambrian Intelligence: The Early History of the New AI. MIT Press.

- Cassimatis, N.L, E.K. Mueller, P.H. Winston (2006). Editors, Special Issue of AI Magazine on Achieving Human-Level Intelligence through Integrated Systems and Research. AI Magazine. Volume 27 Number 2.

- Crevier, Daniel (1993), AI: The Tumultuous Search for Artificial Intelligence, New York, NY: BasicBooks

- Goertzel, Ben (2007). Virtual Easter Egg Hunting: A Thought-Experiment in Embodied Social Learning, Cognitive Process Integration, and the Dynamic Emergence of the Self. In Advances in Artificial General Intelligence, Ed. by Ben Goertzel and Pei Wang:36–54. Amsterdam: IOS Press.

- Goertzel, Ben (2006). Patterns, Hypergraphs and Embodied General Intelligence. Proceedings of International Joint Conference on Neural Networks, IJCNN 2006, Vancouver CA

- Goertzel, Ben, Moshe Looks and Cassio Pennachin (2004). Novamente: An Integrative Architecture for Artificial General Intelligence. Proceedings of AAAI Symposium on Achieving Human-Level Intelligence through Integrated Systems and Research, Washington DC, August 2004

- Goertzel, Ben and Stephan Bugaj (2006). The Path to Posthumanity. Academica Press.

- Goertzel, Ben and Cassio Pennachin, Eds. (2006). Artificial General Intelligence. Springer.

- Goertzel, Ben and Pei Wang, Eds. (2007). Advances in Artificial General Intelligence. IOS Press.

- Hall, J. Storrs (2006). Beyond AI. Prometheus Press.

- Kurzweil, Ray (2005). The Singularity Is Near. Viking.

- McDermott, Drew (2006). Kurzweil’s argument for the success of AI. Artificial Intelligence 170(18): 1227–1233

- Pepperberg, Irene (2000). The Alex Studies: Cognitive and Communicative Abilities of Grey Parrots. Harvard University Press.

- Stephenson, Neal (2000). Snow Crash. Spectra.

- Tomasello, Michael (2003). Constructing a A Language. Harvard University Press.

- Winograd, Terry (1972). Understanding Natural Language. San Diego: Academic.

1. And personally, I find the virtual-worlds approach more compelling as an AGI researcher, in part because it gives me something exciting to work on right now, instead of just sitting back and waiting for the brain-scanner and computer-hardware engineers.