From CLUBOF.INFO

The increasing detail at which human brains can be scanned is bringing the possibility of mind-reading appliances closer and closer. Such appliances, when complete, will be non-invasive and capable of responding to our thoughts as easily as they respond to keys on a keyboard. Indeed, as emphasized in the Lifeboat Foundation’s 2013 publication, The Human Race to the Future, there may soon be appliances that are operated by thought alone, and such technology may even replace our keyboards.

It is not premature to be concerned about possible negative outcomes from this, however positive the improvement in people’s lifestyles would be. In mind-reading appliances, there are two possible dangers that become immediately obvious.

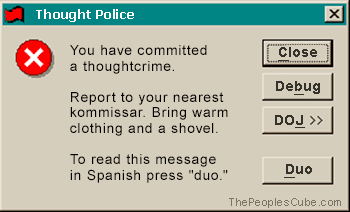

Danger 1: “Thought police”

Brain-machine interfaces have many possibilities that deserve to be explored by science. However, there are also potentially dystopian threats presented by this technology. Even technologies like personal computers, which were seen as liberating to the individual and not aligned with powerful governments, have also become windows that regimes can use to spy on their citizens.

If hardware eventually allows words to appear on screens simply because of a thought, and the appliances are still vulnerable to hacking or government pressure, does this mean minds can be read without consent? It is very likely that any technology sensitive enough to respond to our thoughts could be programmed by a regime to intercept our thoughts. Even if our hardware was not originally designed to intercept thoughts on behalf of the authorities, the hardware would already meet the requirements for any program written to intercept thoughts for policing and political repression.

The potential negative consequences of mind-reading technology are equivalent to those of “uploading”, the futuristic concept of transferring one’s mind to computers as popularized by Singularitarians, usually following the ideas of Ray Kurzweil. There is a real threat that a technological singularity, as depicted in Kurzweil’s The Singularity is Near, could strengthen a flawed social system by giving the authorities the intrusive ability to monitor what it sees as deviant or threatening thought.

Danger 2: Accidents

It may be that manual or verbal control, still depicted in science fiction as gifts that will be with us for many centuries and taken with humanity’s distant descendants to the stars, are just more practical than mind-reading. Even when limited to practical uses like controlling a vehicle or appliance, mind-reading may simply be destined to take away convenience rather than create convenience for the vast majority of its customers.

Even if driving a car by thought can be made safe, the use of aircraft or weapons systems via mind-reading would certainly be more problematic. When the stakes are high, most of us already agree that it is best not to entrust the responsibility to one person’s thoughts. By not using the body and voice when performing a task, and by not allowing others to intervene in your actions, the chances of an accident are probably always going to be raised. Although we like to think of our own brains as reliable and would probably be eager to try out mind-reading control over our vehicles, we do not think of others peoples’ brains that way, and would be troubled by the lack of any window for intervention in the other person’s actions.

Possible accidents when piloting a complex machine like a helicopter or manning a dangerous weapon may be averted by an experienced hand preventing someone from taking the wrong action. Considering this, old-fashioned manual controls may already be destined to be superior to any mind-reading controls and more attuned to the challenges faced by humans. We evolved to talk and physically handle challenges. Given this fact, removing all the remaining physical challenges of performing a task may only complicate your ability to perform effectively, or result in a higher tendency to err or take rash actions by subverting the ability of others to challenge you as you act.

Recommendation: we should avoid strengthening an undesirable social system

I hope that these objections to mind-reading may be proven invalid, with time. It is certainly likely that some people, such as those with physical disabilities, are going to rely on improvements in mind-reading technology to restore their lives. However, there has been, and continues to be, a very definite danger that a flawed social system and government are going to seek out technologies that can make them more and invulnerable, and this is one such technology. Any potential avenue of invulnerability of governments against their critics is unacceptable and should be challenged, just as the present excess in surveillance has been challenged.

It is important to keep reiterating that it is not the technology itself that is the source of a threat to humanity, but the myopic actions of the likely operators of that technology. Given the experience of government mass espionage, which began without the knowledge or consent of the public, concerns about other unannounced programs exploiting communication technology for “total information awareness” (TIA) are justified.

There’s no doubt but that new technologies such as “cloud mind-reading” (CMR) will be attempted and used against people, whether or not the information collected is truly “correct” or not.

I would reasonably expect that any CMR most likey would be attemptring to interpret highly synchronous brain activity usually strongly correlated with information being processed by short-term memory (STM). This interpretation only recently has gained the attention of the neuroscience community at large. E.g., see some press releases on work I’ve been involved in since circa 1980: http://www.sdsc.edu/News%20Items/PR041414_short_term_memory.html

and http://ucsdnews.ucsd.edu/pressrelease/the_mechanism_of_short_term_memory , where the reporter leans on some recent info from NIH.

At least one issue presented is just how faithful is the info being processed by STM a true or strong indicator of intent or actual thought processes along a chain of thought processes that may lead to similar or dissimilar thought processes or actions?

Since many people train their memories to string patterns of information via visual (most common), auditory or somatic (as Einstein was quoted) associations/overlaps, clearly many people could train to have patterns of pretty weird associations represent their “true” thoughts, even if only for the purpose of confounding CMR? There likely will always be some counter-measures some people can employ with such new technologies, thereby leading to even less social transparency, further decay of morality, and generally make Earth more like Hell?

Of course, on the “bright” side, given that CMR sensors could be employed by individuals to communicate with themselves as well as other people via languages both more personal and universal than today’s languages (numbering perhaps a couple of thousand?), great new worlds of deeper and more meaningful interactions and growth may become possible and realized.

Such familiarity developed using CMR may lead to enhanced contempt or compassion — there always are so many choices to make … :)! I think the reasonable argument always put forward concerning new technologies is that, since we really cannot predict the ratio of Good over Evil developed in the future as a direct result of applications of these technologies, we might as well go forward …

Could Mind-reading Technology Become Harmful? is a wondrous notion. I don’t know whether it becomes harmful or not but the technology will be beyond than plausible. Pre-Cog and Pre-Crime will be in place without a fail.

http://lifeboat.com/blog/2014/04/white-swan

There are always fears about new technology and the potential for accidents and misuse. But that didn’t deter us from creating highways so that cars can travel at high speed, from creating high speed trains or airplanes that can potentially fall out of the sky or be hijacked. We always have added engineering and legal safeguards to prevent accidents and misuse. Misuse by the government is always an issue. Phone lines are tapped by the intelligence people on a regular basis, but should we get rid of the phones?

I think there is a tremendous danger in looking at something so radically different as mind reading or mind control of equipment and think that this somehow equates with someone physically turning a steering wheel or pressing a button. That somehow, some manifest difference exists when a person physically does something to physically thinking about doing something is plainly false.

There may be no steering wheel — not because it was replaced with a thought controller but because there is no car, or at least, not in the sense we think of a car today. And people understand that just as there are fail safes, coded key combinations and other barriers to entry for weapons systems, those systems will not engage without an appropriate and standardized method of access.

Instead of publishing articles that say “NO” to something that is inevitable, let’s look at it from a more guided perspective to help build the new systems and protections we will need in the new world.

The future will happen but instead of turning into the Krell, we can learn from Morbius and survive.

To the Lifeboat Foundation:

I want to protect human rights. A group of people are ILLEGALLY using Mind- reading technology in the New York state. What actions should we take to protect privacy, and notify the appropriate authorities, to prevent this atrocity. (see thomas horn forbidden gates pdf) I want to do the right thing. Please respond by email.

PirateRo, I am not saying NO to mind-reading any more than I am saying no to telephony or personal computers. No, I will continue to speculate about emerging technologies right here at the Lifeboat Foundation, a think tank created for that purpose, even if I enthusiastically agree with those emerging technologies or I invest in them. Hearing out possible criticisms and going through them would be a necessary part of legitimizing such technology.

Mind reading and writing will be a commercial success as soon as usable systems become available at a reasonable price. We can conclude this from the success of social networks, web searches and online games. A sufficient number of users will throw caution about privacy to the wind when exciting new services become available. Others will feel the need to follow in order not to be left behind.

Mind links from individuals can be achieved by various types of scanning. Mind links to individuals might require solutions like the nanoparticles described by Freeman Dyson. Direct links to our minds are likely to shape our thinking much more than conventional medial and our structure for handling these links will determine the way our societies work. Debates about this structure might benefit from a simple concept that captures the essence of the problem and indicates at least the general nature of a solution. Here is a draft for discussion.

It might be useful to imagine mind links as divided into three parts: the Interface part that either converts neural activity to electrical signals or uses electrical signals to stimulate certain neurons (think of headbands and pills to swallow), the Assistant system that converts these person-specific signals into general signals that can be communicated to others and vice versa (think of mobile phones) and the Web including other users and services (think search engines). The Interface is likely to consist of standard hardware and its drivers. The Web is open to friend and foe. The Assistant systems will be the key to control.

Let’s assume that the Assistant systems are just very smart pieces of software that have no hidden agendas. Individuals controlling their own Assistant systems could then determine what they reveal to whom and what kind of signals can reach them, making them fairly resistant to direct monitoring and subconscious influences. If the Assistant system communication is well enough encrypted to make bulk surveillance unaffordable, watchful citizens might be able to pool their resources and arrange appropriate sousveillance before their government can silence them. This would favour a stable democracy. Obviously, once authoritarian systems have gotten control of enough Assistant systems, they could shape the minds of their citizens and create a stable system as well.

“Brain-machine interfaces have many possibilities that deserve to be explored by science. However, there are also potentially dystopian threats presented by this technology”

I think the potential of dystopic threats will exist so long as we continue to accept and sustain faulty leadership (i.e., leadership that is not operating in the best interest of the species). Therefore, what we need is a technology that can enable us to discern intention/thought/emotion in a way that provides us the intel to select optimal leadership based on their subjective and/or neurological qualities, in addition to other important factors. By ensuring we always have optimal leadership, we can eliminate or greatly reduce the emergence of potential dystopic futures. Because it’s only a faulty/suboptimal leadership [that abuses/misuses technology] that could create a dystopic future in the first place.

Brain-machine interfaces might lead to much more trustworthy governments. Imagine that mind links are controlled by Assistant systems and normal users set their systems so that they reveal very little about themselves to the general public. When such a user runs as a political candidate, voters must take a high risk, because they can only go by the promises made and possible scandals reported in the media.

A candidate who would change his Assistant system to a more permissive setting would let others take a genuine glimpse at how his mind works. This would lower the voters’ risk of being misled and make this candidate more electable. It might even lead to a “race towards openness” that only slows down when it makes group leaders become too predictable for the opponents of this group.

However, trustworthy does not necessarily mean “in the best interest of the species” as citizens do not necessarily agree what that should be. Here is an example: Assume that members of a religious sect honestly believe that the world can on by be saved when their religion has been established everywhere. They use selective mind links to find a good leadership team: very smart, fair according to internal rules, no trace of nepotism, certainly no hidden business interests, honest belief in the purpose of their religion, will not hesitate to use fire and sword on disbelievers, will not go soft when victory requires high sacrifices, will not spare themselves… The sect might indeed get “pure-hearted” leaders that deserve their trust, but their goals will certainly differ from that of the mainstream citizen. One can imagine similar arrangements among other political groups and after mind selective mind-linking has taken out much of the intra-group friction, the inter-group clashes might become even more intense.