Three centuries after Newton described the universe through fixed laws and deterministic equations, science may be entering an entirely new phase.

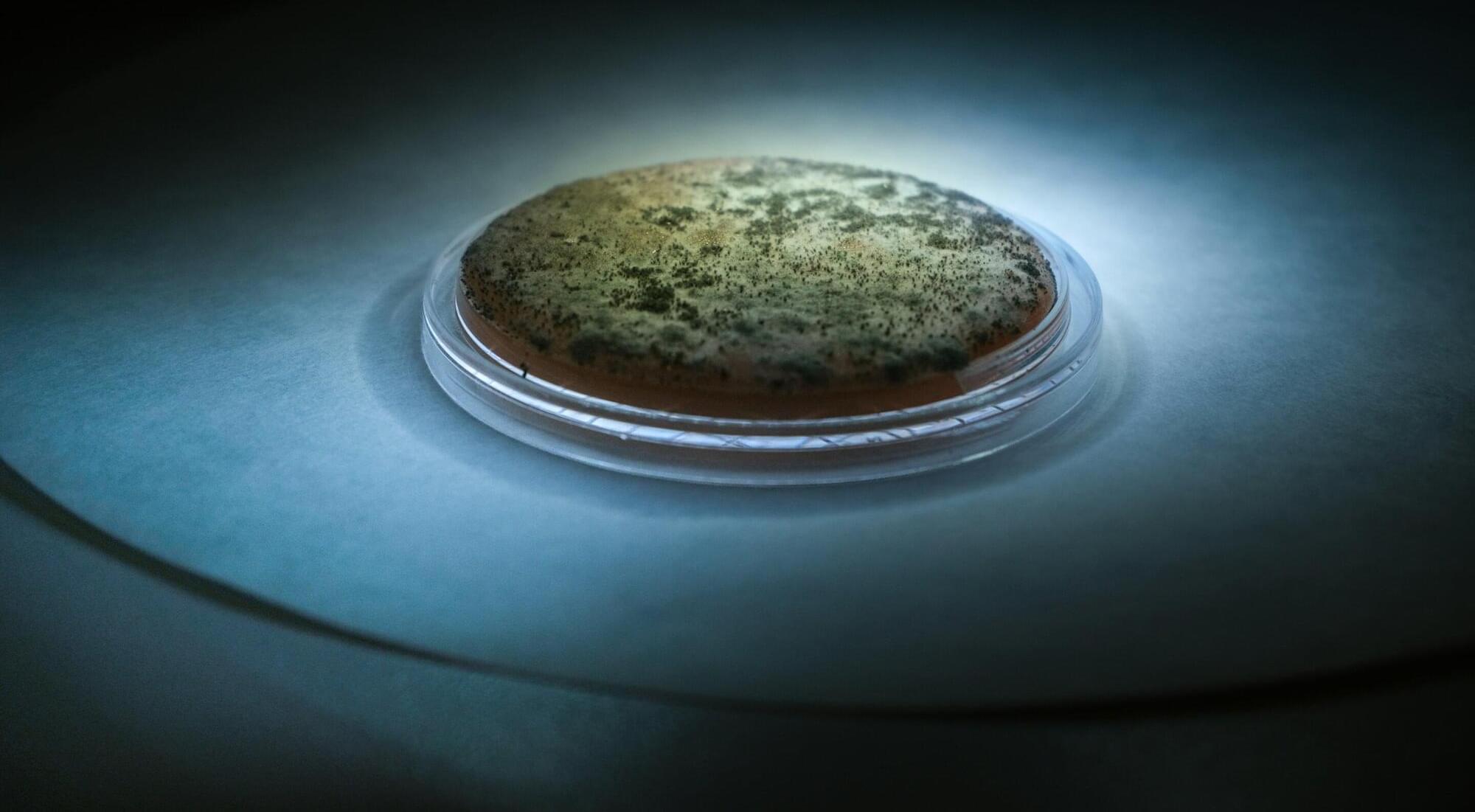

According to biochemist and complex systems theorist Stuart Kauffman and computer scientist Andrea Roli, the biosphere is not a predictable, clockwork system. Instead, it is a self-organising, ever-evolving web of life that cannot be fully captured by mathematical models.

Organisms reshape their environments in ways that are fundamentally unpredictable. These processes, Kauffman and Roli argue, take place in what they call a “Domain of No Laws.”

This challenges the very foundation of scientific thought. Reality, they suggest, may not be governed by universal laws at all—and it is biology, not physics, that could hold the answers.

Tap here to read more.