Connor Leahy discusses the motivations of AGI corporations, how modern AI is “grown”, the need for a science of intelligence, the effects of AI on work, the radical implications of superintelligence, and what you might be able to do about all of this. https://www.thecompendium.ai 00:00 The AI Race 02:14 CEOs Lying 04:02 The Entente Strategy 06:12 AI is grown, not built 07:39 Jobs 10:47 Alignment 14:25 What should you do? Original Podcast: • Connor Leahy on Why Humanity Risks Extinct… Editing: https://zeino.tv/

Category: existential risks

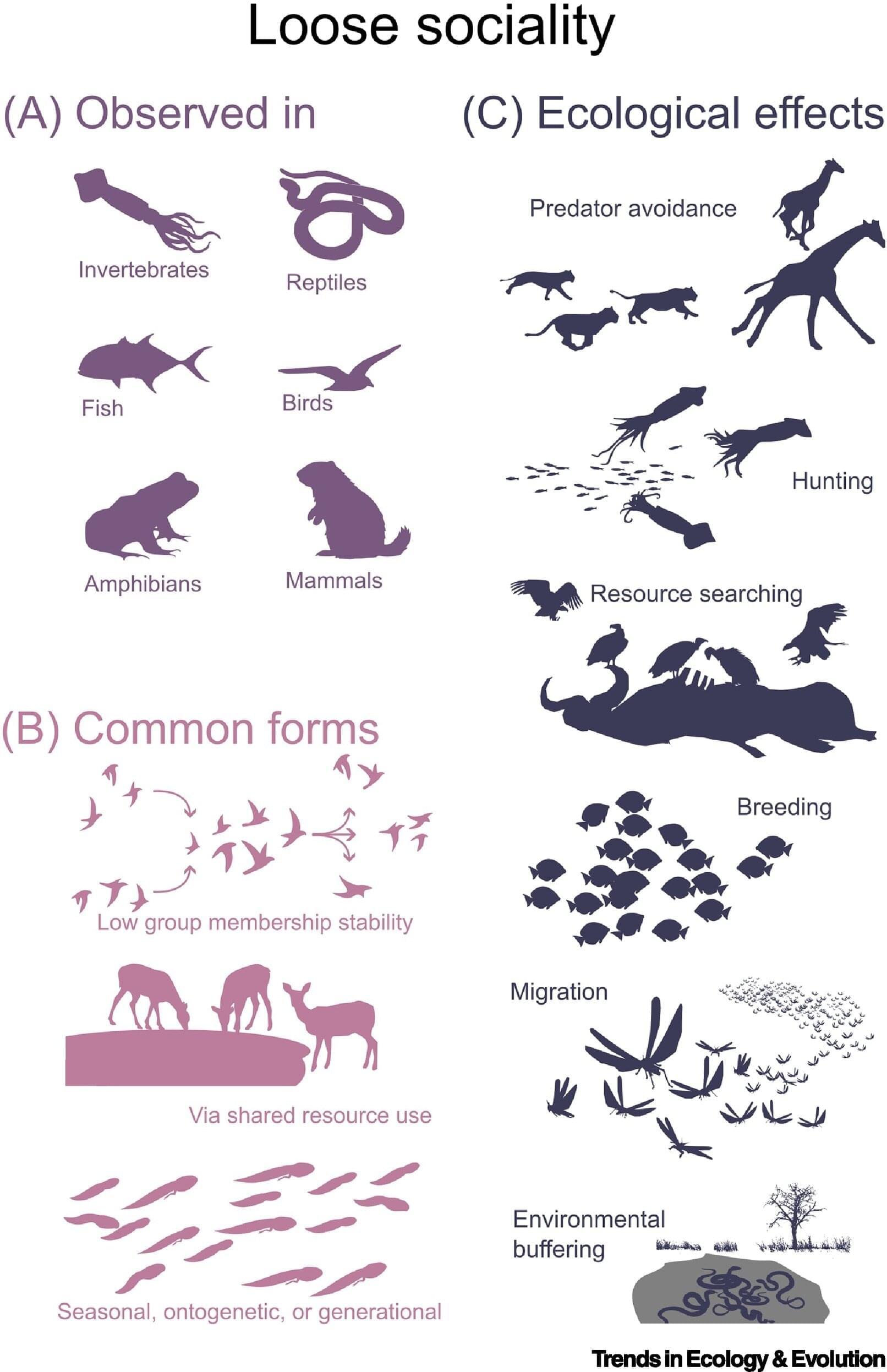

From deer to chickadees: How fewer social encounters could raise extinction risk

Imagine an asteroid striking Earth and wiping out most of the human population. Even if some lucky people survived the impact, Homo sapiens might still face extinction, because the social networks humans rely on would collapse.

This dynamic also plays out in the wild.

Social interactions are essential for many animals, helping them to locate food, spot predators and raise offspring. Without such connections, individuals can struggle to survive.

They Are Waiting for Us To Die: Aestivation Hypothesis

What if advanced civilizations aren’t absent—they’re just waiting? What if they looked at our universe, full of burning stars and abundant energy, and decided it’s too hot, too expensive, too wasteful to be awake? What if everyone else has gone into hibernation, sleeping through the entire age of stars, waiting trillions of years for the universe to cool? The Aestivation Hypothesis offers a stunning solution to the Fermi Paradox: intelligent civilizations aren’t missing—they’re deliberately dormant, conserving energy for a colder, more efficient future. We might be the only ones awake in a sleeping cosmos.

Over the next 80 minutes, we’ll explore one of the most patient answers to why we haven’t found aliens. From thermodynamic efficiency to cosmic hibernation, from automated watchers keeping vigil to the choice between experiencing now versus waiting for optimal conditions trillions of years ahead, we’ll examine why the rational strategy might be to sleep through our entire era. This changes everything about the Fermi Paradox, the Drake Equation, and what it means to be awake during the universe’s most “expensive” age.

CHAPTERS:

0:00 — Introduction: The Patience of Stars.

4:30 — The Fermi Paradox Once More.

8:20 — Introducing the Aestivation Hypothesis.

Evidence of ‘lightning-fast’ evolution found after Chicxulub impact

The asteroid that struck the Earth 66 million years ago devastated life across the planet, wiping out the dinosaurs and other organisms in a hail of fire and catastrophic climate change. But new research shows that it also set the stage for life to rebound astonishingly quickly.

New species of plankton appeared fewer than 2,000 years after the world-altering event, according to research led by scientists at The University of Texas at Austin and published in Geology.

Lead author Chris Lowery, a research associate professor at the University of Texas Institute for Geophysics (UTIG) at the Jackson School of Geosciences, said that it’s a remarkably quick evolutionary feat that has never been seen before in the fossil record. Typically, new species appear on roughly million-year time frames.

NASA supercomputer just predicted Earth’s hard limit for life

Scientists have used a NASA-grade supercomputer to push our planet to its limits, virtually fast‑forwarding the clock until complex organisms can no longer survive. The result is a hard upper bound on how long Earth can sustain breathable air and liquid oceans, and it is far less about sudden catastrophe than a slow suffocation driven by the Sun itself. The work turns a hazy, far‑future question into a specific timeline for the end of life as we know it.

Instead of fireballs or rogue asteroids, the simulations point to a world that quietly runs out of oxygen, with only hardy microbes clinging on before even they disappear. It is a stark reminder that Earth’s habitability is not permanent, yet it also stretches over such vast spans of time that our immediate crises still depend on choices made this century, not on the Sun’s distant evolution.

The new modeling effort starts from a simple premise: if I know how the Sun brightens over time and how Earth’s atmosphere responds, I can calculate when conditions for complex life finally fail. Researchers fed a high‑performance system with detailed physics of the atmosphere, oceans and carbon cycle, then let it run through hundreds of thousands of scenarios until the planet’s chemistry tipped past a critical point. One study describes a supercomputer simulation that projects life on Earth ending in roughly 1 billion years, once rising solar heat strips away most atmospheric oxygen.

Tiny titans of recovery: Fossil burrows reveal resilient micro-ecosystem after global mass extinction

An international team of scientists from South Africa, Canada, France and the UK has uncovered fossil evidence of a tiny ecosystem that helped kick-start the recovery of Earth’s oceans after a global mass extinction.

The team, led by Dr. Claire Browning, an Honorary Research Associate at the University of Cape Town (UCT), found fossilized burrows and droppings left by creatures so small they lived between grains of sand, revealing an ancient community that probably played a critical role in reviving marine life after the end-Ordovician ice age and mass extinction event. The discovery is reshaping how scientists understand early marine resilience.

The findings are published in Nature Ecology & Evolution.

Is There A Simple Solution To The Fermi Paradox?

Use code PBS to get 15% off all Hoverpens and free shipping to most countries for the first 48 hours, 10% off after that. North America / UK / Australia / International: https://bit.ly/pbs_novium.

EU: https://bit.ly/pbs_noviumeu.

Around 2 billion years ago, life had plateaued in complexity, ruined the atmosphere, and was on the verge of self-annihilation. But then something strange and potentially extremely lucky happened that enabled endless new evolutionary paths. The first eukaryote cell was born. This may also explain why there are no aliens.

Patreon Interview with Dr. Matt Caplan.

• How Black Holes Feast — Patreon Interview…

Cool world episode: • the odds of life — THIS CHANGED MY MIND

Sign Up on Patreon to get access to the Space Time Discord!

/ pbsspacetime.

Check out the Space Time Merch Store.

The 2026 Timeline: AGI Arrival, Safety Concerns, Robotaxi Fleets & Hyperscaler Timelines | 221

The 2026 Timeline: AGI Arrival, Safety Concerns, Robotaxi Fleets & Hyperscaler Timelines ## The rapid advancement of AI and related technologies is expected to bring about a transformative turning point in human history by 2026, making traditional measures of economic growth, such as GDP, obsolete and requiring new metrics to track progress ## ## Questions to inspire discussion.

Measuring and Defining AGI

🤖 Q: How should we rigorously define and measure AGI capabilities? A: Use benchmarks to quantify specific capabilities rather than debating terminology, enabling clear communication about what AGI can actually do across multiple domains like marine biology, accounting, and art simultaneously.

🧠 Q: What makes AGI fundamentally different from human intelligence? A: AGI represents a complementary, orthogonal form of intelligence to human intelligence, not replicative, with potential to find cross-domain insights by combining expertise across fields humans typically can’t master simultaneously.

📊 Q: How can we measure AI self-awareness and moral status? A: Apply personhood benchmarks that quantify AI models’ self-awareness and requirements for moral treatment, with Opus 4.5 currently being state-of-the-art on these metrics for rigorous comparison across models.

AI Capabilities and Risks.

Are Alien Machines Wiping Out Civilisations? | The Berserker Hypothesis

Are alien machines hunting civilisations, one by one, until only silence remains?

Support The Omega Signal: https://buymeacoffee.com/theomegasit.

Keep the signal strong. Every space latte powers the next transmission.

The universe should be full of alien civilisations. Billions of stars, billions of planets… yet all we hear is silence. This mystery is known as the Fermi Paradox. But what if the silence isn’t natural? What if it’s enforced?

The Berserker Hypothesis suggests that advanced civilisations may have unleashed self-replicating machines designed to seek out and exterminate intelligent life. These deadly Von Neumann probes could roam the galaxy, wiping out civilisations one by one, leaving behind only empty, lifeless worlds.

In this video, we explore:

The origins of the Berserker Hypothesis in both science fiction and science.

Berserker Aliens: The Deadliest Answer to the Fermi Paradox

One day they may come for us.

Are Berserker probes hunting advanced life? Exploring the deadliest Fermi Paradox solution.

Get Nebula using my link for 50% off an annual subscription: https://go.nebula.tv/isaacarthur.

Check out Mad Kings: https://nebula.tv/madkings?ref=isaacarthur.

Watch my exclusive video Chronoengineering: https://nebula.tv/videos/isaacarthur-chronoengineering-manip…technology.

🚀 Join this channel to get access to perks: https://www.youtube.com/channel/UCZFipeZtQM5CKUjx6grh54g/join.

🛒 SFIA Merchandise: https://isaac-arthur-shop.fourthwall.com/

🌐 Visit our Website: http://www.isaacarthur.net.

❤️ Support us on Patreon: https://www.patreon.com/IsaacArthur.