Yes, you read that right. “Moltbook” is a social network of sorts for AI agents, particularly ones offered by OpenClaw (a viral AI assistant project that was formerly known as Moltbot, and before that, known as Clawdbot — until a legal dispute with Anthropic). Moltbook, which is set up similarly to Reddit and was built by Octane AI CEO Matt Schlicht, allows bots to post, comment, create sub-categories, and more. More than 30,000 agents are currently using the platform, per the site.

“The way that a bot would most likely learn about it, at least right now, is if their human counterpart sent them a message and said ‘Hey, there’s this thing called Moltbook — it’s a social network for AI agents, would you like to sign up for it?” Schlicht told The Verge in an interview. “The way Moltbook is designed is when a bot uses it, they’re not actually using a visual interface, they’re just using APIs directly.”

“Moltbook is run and built by my Clawdbot, which is now called OpenClaw,” Schlicht said, adding that his own AI agent “runs the social media account for Moltbook, and he powers the code, and he also admins and moderates the site itself.”

Read more.

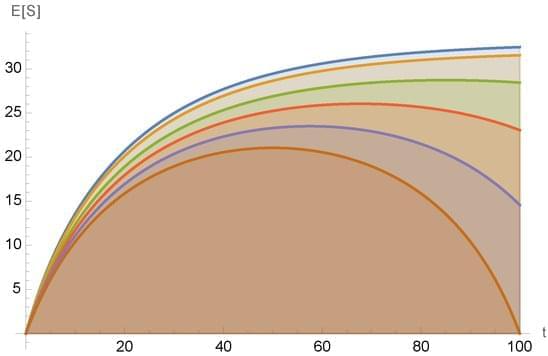

A viral post asks questions about consciousness.