Advances in supercomputing have made solving a long‐standing astronomical conundrum possible: How can we explain the changes in the chemical composition at the surface of red giant stars as they evolve?

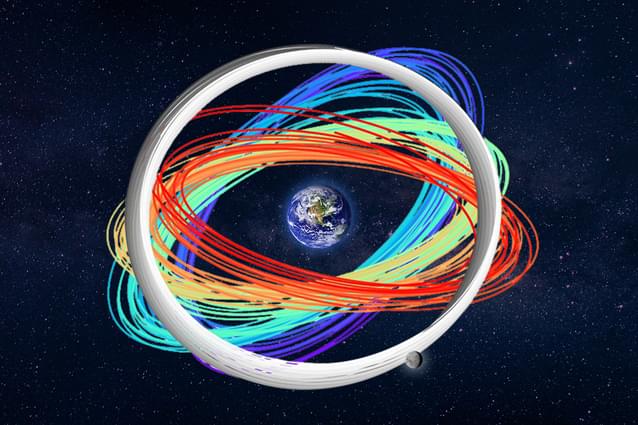

For decades, researchers have been unsure exactly how the changing chemical composition at the center of a red giant star, caused by nuclear burning, connects to changes in composition at the surface. A stable layer acts as a barrier between the star’s interior and the outer connective envelope, and how elements cross that layer remained a mystery.

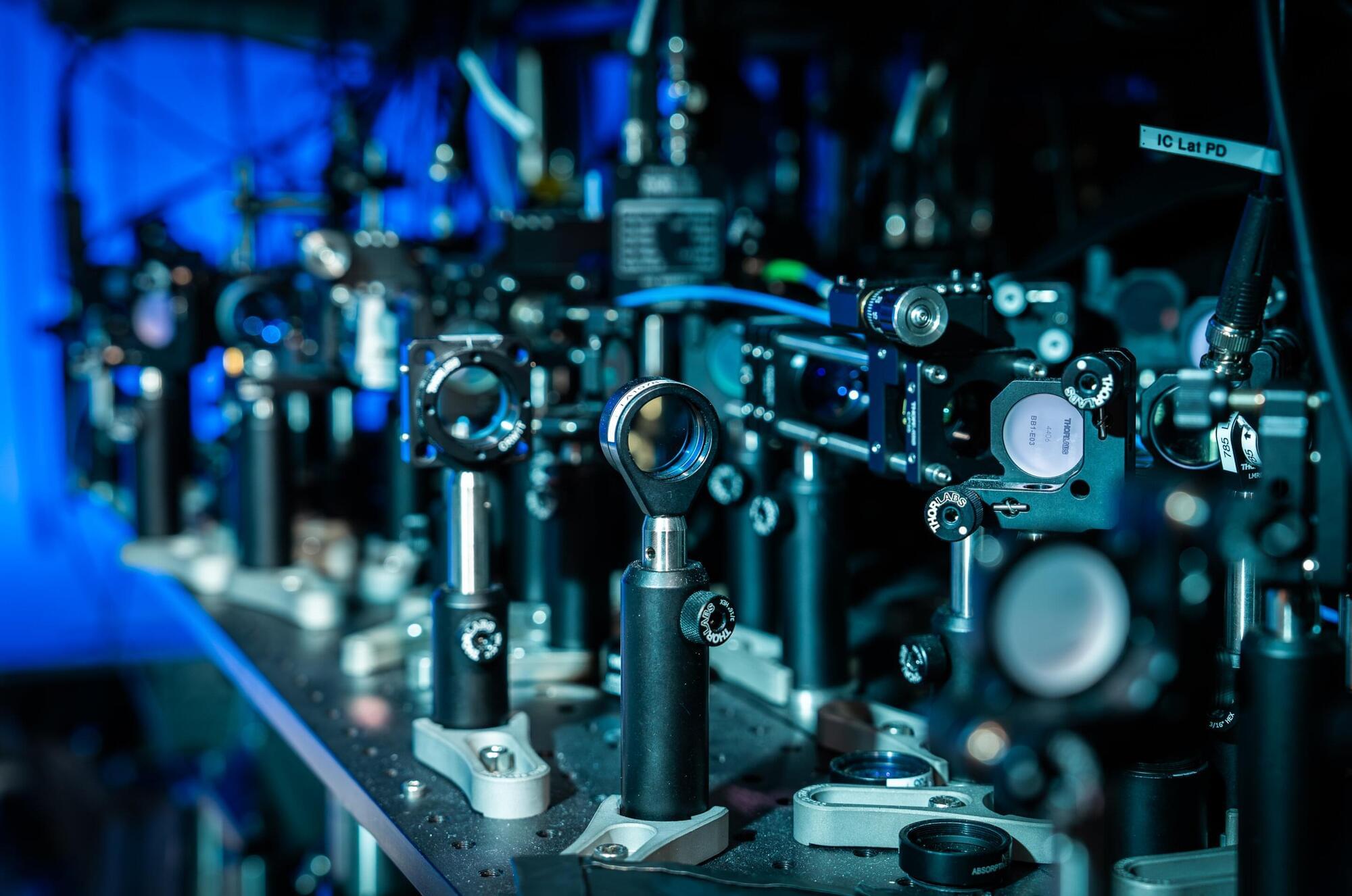

In a Nature Astronomy paper, researchers at the University of Victoria’s (UVic) Astronomy Research Center (ARC) and the University of Minnesota solved the problem.