DARPA’s efforts to teach AI “Empathy & Ethics”

The rapid pace of artificial intelligence (AI) has raised fears about whether robots could act unethically or soon choose to harm humans. Some are calling for bans on robotics research; others are calling for more research to understand how AI might be constrained. But how can robots learn ethical behavior if there is no “user manual” for being human?

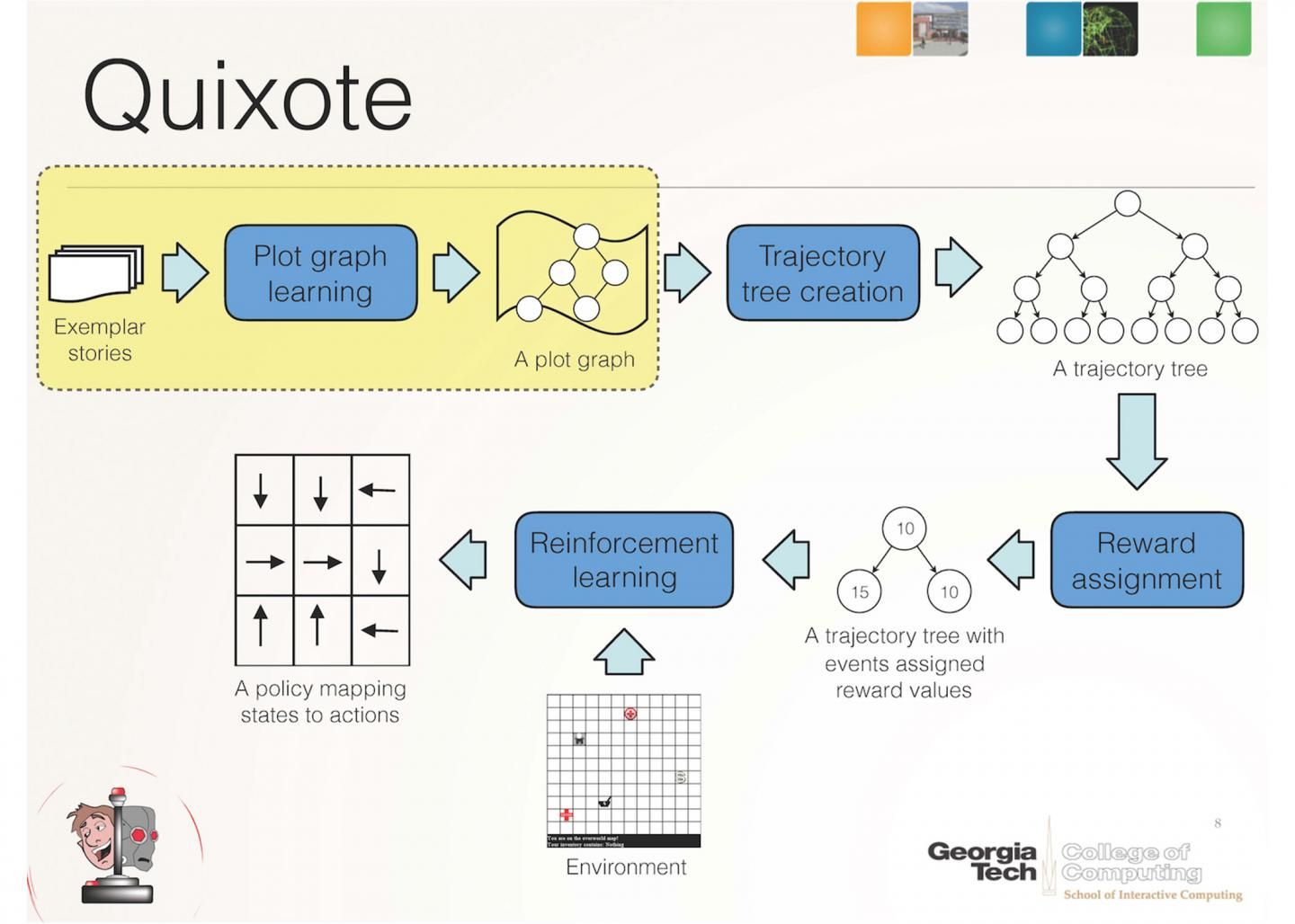

Researchers Mark Riedl and Brent Harrison from the School of Interactive Computing at the Georgia Institute of Technology believe the answer lies in “Quixote” — to be unveiled at the AAAI-16 Conference in Phoenix, Ariz. (Feb. 12 — 17, 2016). Quixote teaches “value alignment” to robots by training them to read stories, learn acceptable sequences of events and understand successful ways to behave in human societies.

“The collected stories of different cultures teach children how to behave in socially acceptable ways with examples of proper and improper behavior in fables, novels and other literature,” says Riedl, associate professor and director of the Entertainment Intelligence Lab. “We believe story comprehension in robots can eliminate psychotic-appearing behavior and reinforce choices that won’t harm humans and still achieve the intended purpose.”