“So there came a time in which the ideas, although accumulated very slowly, were all accumulations not only of practical and useful things, but great accumulations of all types of prejudices, and strange and odd beliefs.

Then a way of avoiding the disease was discovered. This is to doubt that what is being passed from the past is in fact true, and to try to find out ab initio again from experience what the situation is, rather than trusting the experience of the past in the form in which it is passed down. And that is what science is: the result of the discovery that it is worthwhile rechecking by new direct experience, and not necessarily trusting the [human] race[’s] experience from the past. I see it that way. That is my best definition…Science is the belief in the ignorance of experts.“

–Richard P Feynman, What is Science? (1968)[1]

TruthSift.com is a platform designed to support and guide individuals or crowds to rationality, and make them smarter collectively than any unaided individual or group. (Free) Members use TruthSift to establish what can be established, refute what can’t be, and to transparently publish the demonstrations. Anyone can browse the demonstrations and learn what is actually known and how it was established. If they have a rational objection, they can post it and have it answered.

Whether in scientific fields such as climate change or medical practice, or within the corporate world or political or government debate, or on day to day factual questions, humanity hasn’t had a good method for establishing rational truth. You can see this from consequences we often fail to perceive:

Peer reviewed surveys agree: A landslide majority of medical practice is *not* supported by science [2,3,4]. Scientists are often confused about the established facts in their own field [5]. Within fields like climate science and vaccines, that badly desire consensus, no true consensus can be reached because skeptics raise issues that the majority brush aside without an established answer (exactly what Le Bon warned of more than 100 years ago[6]). Widely consulted sources like Wikipedia are reported to be largely paid propaganda on many important subjects [7], or the most popular answer rather than an established one [8]. Quora shows you the most popular individual answer, generated with little or no collaboration, and often there is little documentation of why you should believe it. Existing systems for crowd sourced wisdom largely compound group think, rather than addressing it. Existing websites for fact checking give you someone’s point of view.

Corporate or government planning is no better. Within large organizations, where there is inevitably systemic motivation to not pass bad news up, leadership needs active measures to avoid becoming clueless as to the real problems [9]. Corporate or government plans are subject to group think, or takeover by employee or other interests competing with the mission. Individuals who perceive mistakes have no recourse capable of rationally pursuading the majority, and may anyway be discouraged from speaking up by various consequences[6].

TruthSift is designed to solve all these problems. TruthSift realizes in your browser the Platonic ideal of the scientific literature, but TruthSift applies it to everything, and makes it tangible and lightweight, extended to a much lower hurdle for publishing. On a public TruthSift diagram, members (or on a Private diagram, members you have invited), who believe they can prove or refute a statement, can post their proof or refutation exactly where it is relevant. TruthSift logically propagates the consequences of each contribution, graphically displaying how it impacts the establishment status of all the others, drawing statements established by the combined efforts in thick borders, and statements refuted in thin. Statements are considered established only when they have an established demonstration, one with every posted challenge refuted.

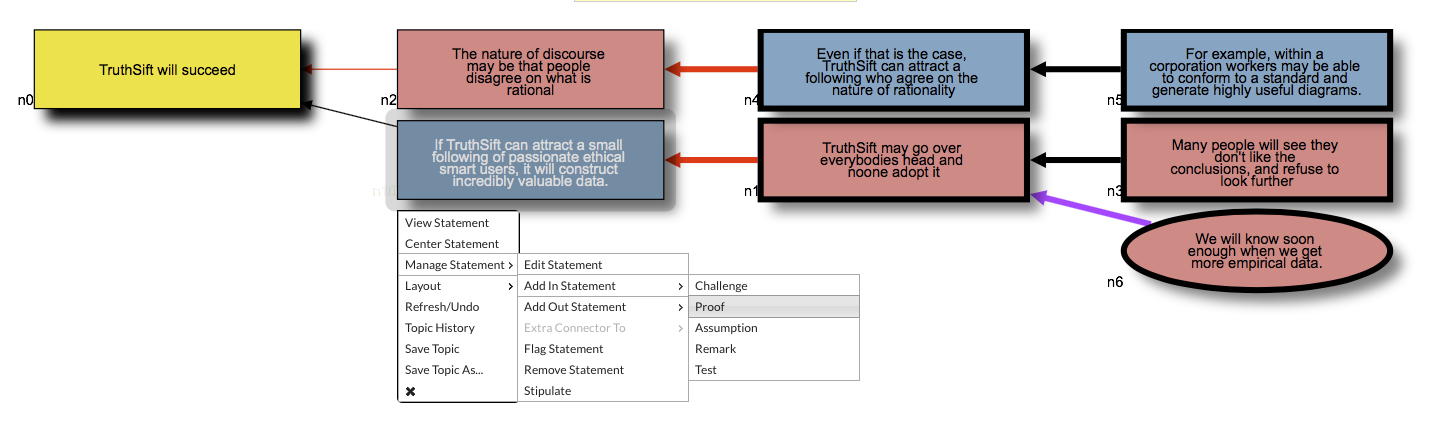

Fig 1: An example topic. The topic statement n0 is currently refuted, because its only proof

is refuted. The statement menu is shown open in position to add a proof to this proof.

The topic statement is gold, pro statements are blue, con statements are red. Proof

connectors are black, challenges red, remarks purple, assumptions (not shown) blue.

Statements show the title. On the actual Topic the body can be seen by selecting

the statement and “View Statement” or hovering the mouse.

What is a proof? According to the first definition at Dictionary.com a proof is: “evidence sufficient to establish a thing as true, or to produce belief in its truth.” In mathematics, a proof is equivalent to a proof tree that starts at axioms, or previously established results, which the participants agree to stipulate, and proceeds by a series of steps that are individually unchallengeable. Each such step logically combines several conclusions previously established and/or axioms. The proof tree proceeds in this way until it establishes the stated proved conclusion. Mathematicians often raise objections to steps of the proof, but if it is subsequently established that all such objections are invalid, or if a workaround is found around the problem, the proof is accepted.

The Scientific literature works very similarly. Each paper adds some novel argument or evidence that previous work is true or is not true or extends it to establish new results. When people run out of valid, novel reasons why something is proved or is not proved, what remains is an established theory, or a refutation of it or of all its offered proofs.

Galileo’s: Dialogues Concerning the Two Chief World Views.

The black triangle indicates other incoming edges not shown. For complex diagrams,

it is often best to walk around in focused view centered on each statement in turn.

TruthSift is a platform for diagramming this process and applying it to any statements members care to propose to establish or refute. One may state a topic and add a proof tree for it, which is drawn as a diagram with every step and connection explicit. Members may state a demonstration of some conclusion they want to prove, building from some statements they assert are self-evident or that reference some authority they think trustworthy, and then building useful intermediate results that rationally follow from the assumptions, and building on until reaching the stated conclusion. If somebody thinks they find a hole in a proof at any step, or thinks one of the original assumptions need further proof, they can challenge it, explaining the problem they see. Then the writer of the proof (or others if its in collaboration mode) may edit the proof to fix the problem, or make clearer the explanation if they feel the challenger was simply mistaken, and may counter-challenge the challenge explaining that it had been resolved or mistaken. This can go on recursively, with someone pointing out a hole in the proof used by the counter-challenger that the challenge was invalid. On TruthSift the whole argument is laid out graphically and essentially block-chained, which should prevent the kind of edit-wars that happen for controversial topics on Wikipedia. Each challenge or post should state a novel reason, and when the rational arguments are exhausted, as in mathematics, what remains is either a proof of the conclusion or a refutation of it or all of its proofs.

As statements are added to a diagram, TruthSift keeps track of what is established and what refuted, drawing established statements’ borders and their outgoing connectors thick, and refuted statements’ borders and their outgoing connectors thin so viewers can instantly tell what is currently established and what refuted. TruthSift computes this by a simple algorithm that starts at statements with no incoming assumptions, challenges, or proofs, which are thus unchallenged as assertions that prove themselves, are self evident, or appeal to an authority everybody trusts. These are considered established. Then it walks up the diagram rating statements after all their parents have been rated. A statement will be established if all its assumptions are, none of its challenges are, and if it has proofs, at least one is established. (We support challenges requesting a proof be added to a statement which neither has one added nor adequately proves itself.) Otherwise, that is if a statement has an established challenge, or has refuted assumptions, or all of its proofs are refuted, it is refuted.

To understand why a statement is established or refuted, center focus on it, so that you see it and its parents in the diagram. If it is refuted, either there is an established challenge of it, or one of its assumptions is refuted, or all of its proofs are. If it is not refuted, it is established. Work your way backward up the diagram, centering on each statement in turn, and examine the reasons why it is established or refuted.

Fig 3: An example topic.

Effective contribution to TruthSift diagrams involves mental effort. This is both a hurdle and a feature. TruthSift teaches Critical Thinking. First you think about your Topic Statement. How actually should you specify Vaccine Safety or Climate Change, so it covers what you want to establish or refute, and so it is amenable to rational discussion? There is no place you could go to see that well specified now, and can you properly assure it without properly specifying it? Next you think about the arguments for your topic statement, and those against it, and those against the arguments for, and those for the arguments for, and the arguments against the arguments against, and so on until everybody runs out of arguments, when what is left is a concise rational analysis of what is established and why. The debate is settled point by point. The process naturally subdivides the field into sub-topics where different expertise’s come into play, promoting true collective wisdom and understanding.

For TruthSift to work properly, posters will have to respect the guidelines and post only proof or challenge statements that they believe rationally prove or refute their target and are novel to the diagram (or also novel additional evidence as assumptions or remarks or tests, which are alternative connector types). Posts violating the guidelines may be flagged and removed, and consistent violators as well. Posts don’t have to be correct, that’s what challenges are for, but they have to be honest attempts, not spam or ad hominem attacks. Don’t get hung up on whether a statement should be added as a proof or an assumption of another. Frequently you want to assemble arguments for a proposition stating something like “the preponderance of the evidence indicates X”, and these arguments are not individually necessary for X, nor are they individually proofs of X. It is safe to simply add them as proofs. They are not necessary assumptions, and if not enough of them are established, the target may be challenged on that basis. The goal is a diagram that transparently explains a proof and what is wrong with all the objections people have found plausible.

For cases where members disagree on underlying assumptions or basic principles, stipulation is available. If one or more statements are stipulated, statements are shown as conditionally true if established based on the stipulations and as conditionally false if refuted based on the stipulations. The challenges to the stipulation are also shown. TruthSift supports reasoning from different fundamental assumptions, but requires being explicit about it when challenged.

Probability mode supports the intuitive construction of probabilistic models, and evaluates the probability of each statement in the topic marginalizing over all the parameters in the topic. With a little practice these allow folding in various connections and evidence. These could be used for collaborative, verified, risk models; to support proofs with additional confidence tests; to reason about hidden causes; or many other novel applications

Rebut it if you can. Dashed edges represent citation into the literature. Title is shown for each

statement, on actual topic select “View Statement” to see body.

Basic Membership is free. In addition to public diagrams, debating the big public issues, private diagrams are available for personal or organizational planning or to exclude noise from your debate. Private diagrams have editing and/or viewing by invitation only. Come try it. http://TruthSift.com

TruthSift’s mission is to enable publication of a transparent exposition of human knowledge, so that anyone may readily determine what is truth and what fiction, what can be established by valid Demonstration and what can’t, and so that anyone can read and understand that Demonstration.

We intend the process of creating this exposition to lead to vastly increased understanding and improved critical thinking skills amongst our members and beyond. We hope to support collaborative human intelligences greater than any intelligence previously achieved on the planet, both in the public domain and for members’ private use.

And please, I’d love feedback or questions. [email protected]

1. Richard P Feynman, What is Science? (1968) http://www-oc.chemie.uni-regensburg.de/diaz/img_diaz/feynman…nce_68.pdf

2. Assessing the Efficacy and Safety of Medical Technologies, Office of Technology Assessment, Congress of the United States (1978)

http://www.fas.org/ota/reports/7805.pdf

3. Jeannette Ezzo, Barker Bausell, Daniel E. Moerman, Brian Berman and Victoria Hadhazy (2001). REVIEWING THE REVIEWS . International Journal of Technology Assessment in Health Care, 17, pp 457–466. http://journals.cambridge.org/action/displayAbstract?fromPag…aid=101041

4. John S Garrow BMJ. 2007 Nov 10; 335(7627): 951.doi:10.1136/bmj.39388.393970.1F PMCID: PMC2071976

What to do about CAM?: How much of orthodox medicine is evidence based?

http://www.dcscience.net/garrow-evidence-bmj.pdf

5. S. A. Greenberg, “How citation distortions create unfounded authority: analysis of a citation network”, BMJ 2009;339:b2680

http://www.bmj.com/content/339/bmj.b2680

6. Gustav Le Bon, The Crowd, (1895), (1995) Transaction Publishers New Edition Edition

7. S Attkisson, “Astroturf and manipulation of media messages”, TEDx University of Nevada, (2015) https://www.youtube.com/watch?v=-bYAQ-ZZtEU

8. Adam M. Wilson , Gene E. Likens, Content Volatility of Scientific Topics in Wikipedia: A Cautionary Tale 2015 DOI: 10.1371/journal.pone.0134454 http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0134454

9. Kiira Siitari, Jim Martin & William W. Taylor (2014) Information Flow in Fisheries Management: Systemic Distortion within Agency Hierarchies, Fisheries, 39:6, 246–250, http://dx.doi.org/10.1080/03632415.2014.915814

I like.