Perceiving an object only visually (e.g. on a screen) or only by touching it, can sometimes limit what we are able to infer about it. Human beings, however, have the innate ability to integrate visual and tactile stimuli, leveraging whatever sensory data is available to complete their daily tasks.

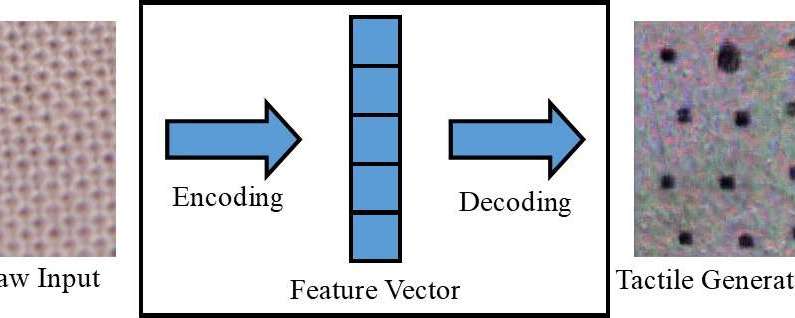

Researchers at the University of Liverpool have recently proposed a new framework to generate cross-modal sensory data, which could help to replicate both visual and tactile information in situations in which one of the two is not directly accessible. Their framework could, for instance, allow people to perceive objects on a screen (e.g. clothing items on e-commerce sites) both visually and tactually.

“In our daily experience, we can cognitively create a visualization of an object based on a tactile response, or a tactile response from viewing a surface’s texture,” Dr. Shan Luo, one of the researchers who carried out the study, told TechXplore. “This perceptual phenomenon, called synesthesia, in which the stimulation of one sense causes an involuntary reaction in one or more of the other senses, can be employed to make up an inaccessible sense. For instance, when one grasps an object, our vision will be obstructed by the hand, but a touch response will be generated to ‘see’ the corresponding features.”