Even in a dual-socket AMD EPYC Rome/Milan server and 4 x MI100 PCIe-based accelerators, we’re looking at 128GB of HBM memory on offer with 4.9TB/sec of bandwidth. We see a drop down to 136 TFLOPs here as well.

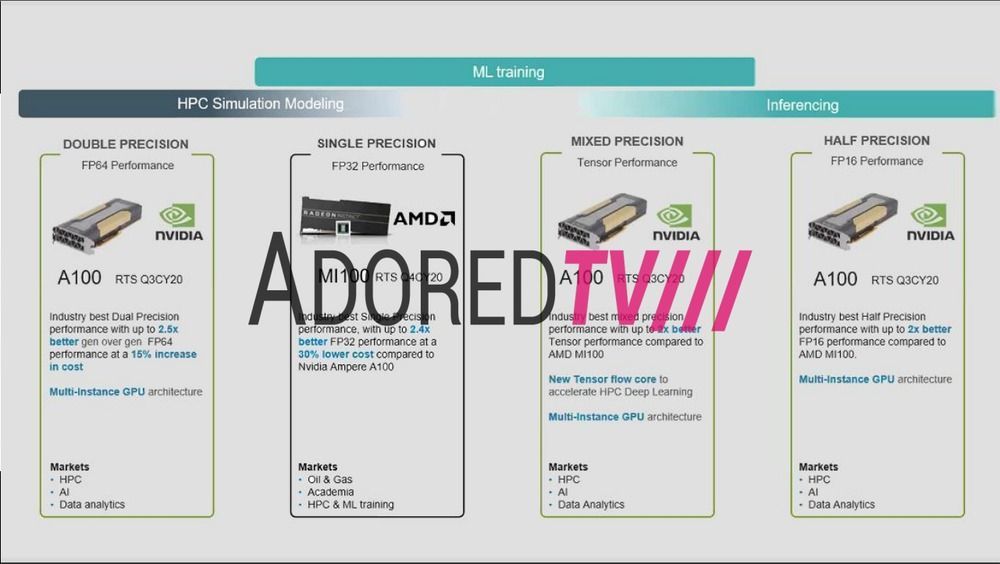

We are looking at the purported AMD Radeon Instinct MI100 accelerator being around 13% faster in FP32 compute performance over NVIDIA’s new Ampere A100 accelerator. The performance to value ratio is much better, with the MI100 being 2.4x better value over a V100S setup, and 50% better value over Ampere A100.

Comments are closed.