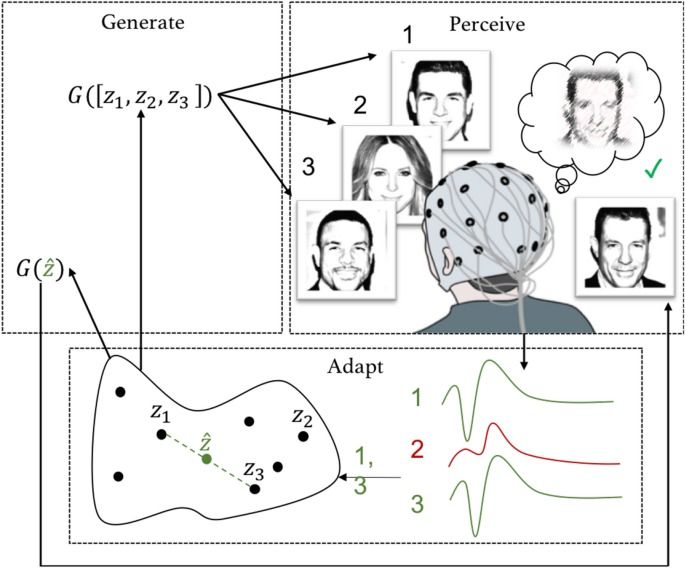

Brain–computer interfaces enable active communication and execution of a pre-defined set of commands, such as typing a letter or moving a cursor. However, they have thus far not been able to infer more complex intentions or adapt more complex output based on brain signals. Here, we present neuroadaptive generative modelling, which uses a participant’s brain signals as feedback to adapt a boundless generative model and generate new information matching the participant’s intentions. We report an experiment validating the paradigm in generating images of human faces. In the experiment, participants were asked to specifically focus on perceptual categories, such as old or young people, while being presented with computer-generated, photorealistic faces with varying visual features. Their EEG signals associated with the images were then used as a feedback signal to update a model of the user’s intentions, from which new images were generated using a generative adversarial network. A double-blind follow-up with the participant evaluating the output shows that neuroadaptive modelling can be utilised to produce images matching the perceptual category features. The approach demonstrates brain-based creative augmentation between computers and humans for producing new information matching the human operator’s perceptual categories.