Grasping objects is something primates do effortlessly, but how does our brain coordinate such a complex task? Multiple brain areas across the parietal and frontal cortices of macaque monkeys are essential for shaping the hand during grasping, but we lack a comprehensive model of grasping from vision to action. In this work, we show that multiarea neural networks trained to reproduce the arm and hand control required for grasping using the visual features of objects also reproduced neural dynamics in grasping regions and the relationships between areas, outperforming alternative models. Simulated lesion experiments revealed unique deficits paralleling lesions to specific areas in the grasping circuit, providing a model of how these areas work together to drive behavior.

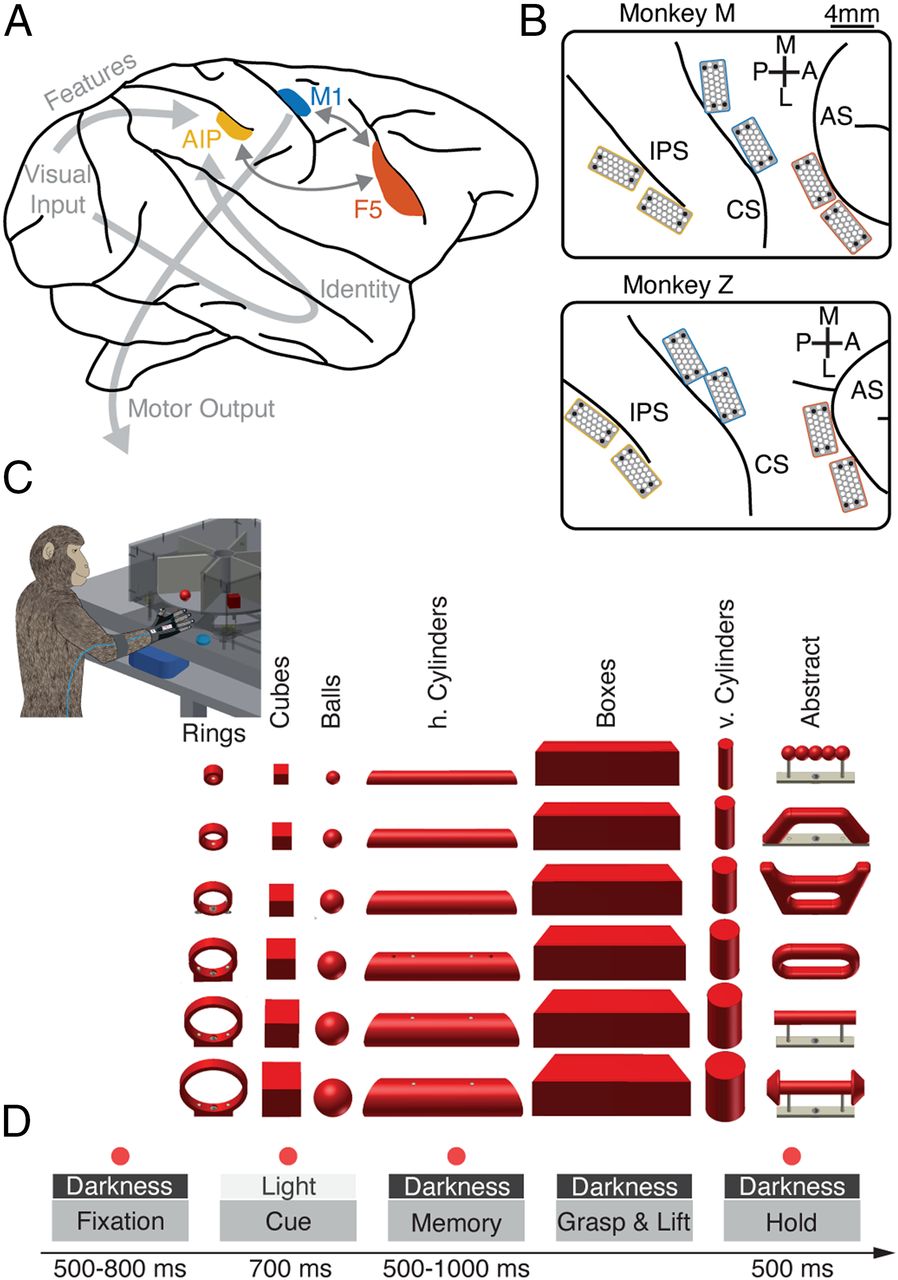

One of the primary ways we interact with the world is using our hands. In macaques, the circuit spanning the anterior intraparietal area, the hand area of the ventral premotor cortex, and the primary motor cortex is necessary for transforming visual information into grasping movements. However, no comprehensive model exists that links all steps of processing from vision to action. We hypothesized that a recurrent neural network mimicking the modular structure of the anatomical circuit and trained to use visual features of objects to generate the required muscle dynamics used by primates to grasp objects would give insight into the computations of the grasping circuit. Internal activity of modular networks trained with these constraints strongly resembled neural activity recorded from the grasping circuit during grasping and paralleled the similarities between brain regions.