Transformer-based deep learning models like GPT-3 have been getting much attention in the machine learning world. These models excel at understanding semantic relationships, and they have contributed to large improvements in Microsoft Bing’s search experience. However, these models can fail to capture more nuanced relationships between query and document terms beyond pure semantics.

The Microsoft team of researchers developed a neural network with 135 billion parameters, which is the largest “universal” artificial intelligence that they have running in production. The large number of parameters makes this one of the most sophisticated AI models ever detailed publicly to date. OpenAI’s GPT-3 natural language processing model has 175 billion parameters and remains as the world’s largest neural network built to date.

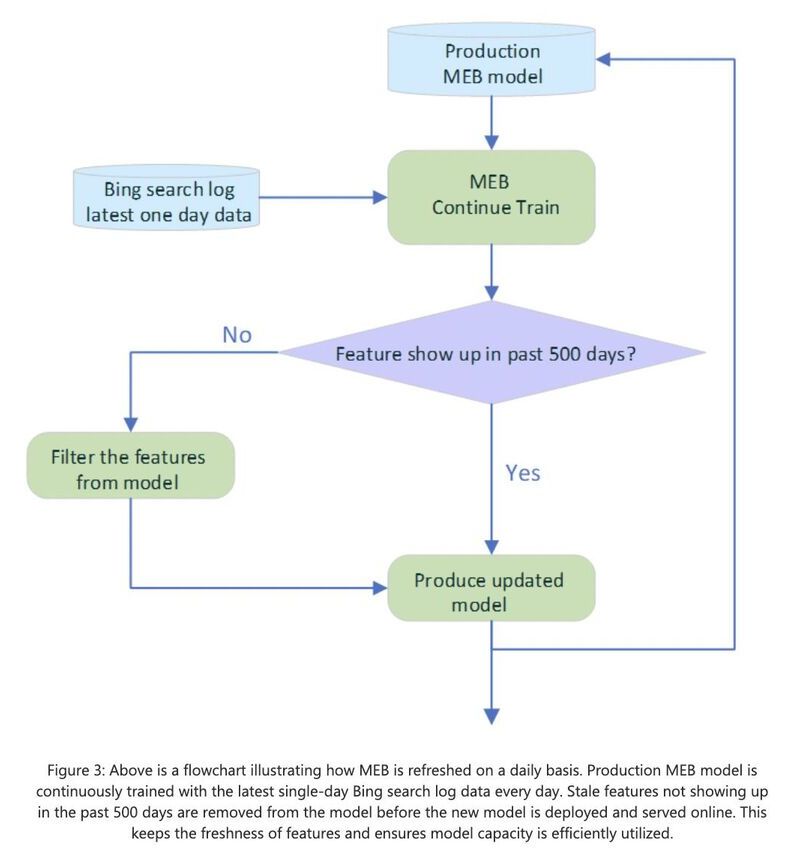

Microsoft researchers are calling their latest AI project MEB (Make Every Feature Binary). The 135-billion parameter machine is built to analyze queries that Bing users enter. It then helps identify the most relevant pages from around the web with a set of other machine learning algorithms included in its functionality, and without performing tasks entirely on its own.