A team of researchers at Stanford University, working with a colleague at the Chinese Academy of Sciences, has built an AI-based filtration system to remove noise from seismic sensor data in urban areas. In their paper published in the journal Science Advances, the group describes training their application and testing it against real data from a prior seismic event.

In order to provide advance warning when an earthquake is detected, scientists have placed seismometers in earthquake-prone areas, including urban areas where quakes do the most damage and harm or kill the most people. But seismologists have found it troublesome to sort out seismic data related to natural ground movements from data related to city life. They note that human activities in cities, such as vehicles and trains, produce a lot of seismic noise. In this new effort, the researchers developed a deep learning application that determines which seismic data is natural and which is man-made and filters out those that are non-natural.

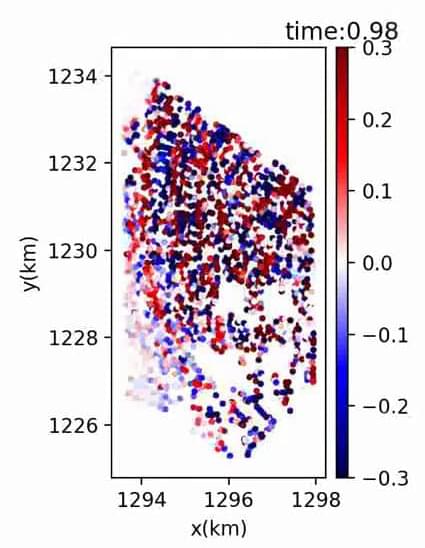

The researchers call their new application UrbanDenoiser. It was built using a deep-learning application and trained on 80,000 samples of urban seismic noise along with 33,751 samples from recorded natural seismic activity. The team applied their filtering system to seismic data recorded in Long Beach, California, to see how well it worked. They found it improved the level of desired signals compared to background noise by approximately 15 decibels. Satisfied with the results, they used UrbanDenoiser to analyze data from an earthquake that struck a nearby area in 2014. They found the application was able to detect four times the amount of data compared to the sensors without the filtering.