The table also shows the average normalized rank of transfer learning approaches. Hyperparameter transfer learning uses evaluation data from past HPO tasks in order to warmstart the current HPO task, which can result in significant speed-ups in practice.

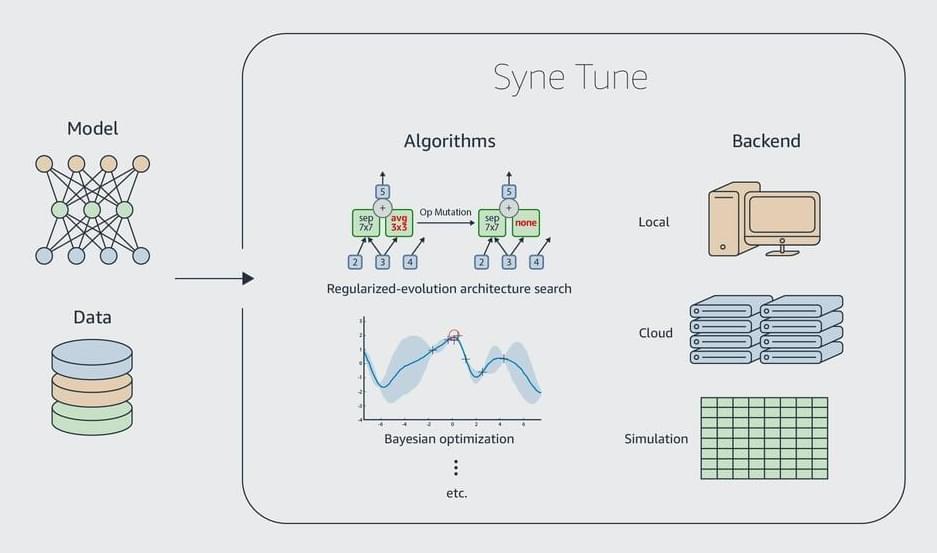

Syne Tune supports transfer-learning-based HPO via an abstraction that maps a scheduler and transfer learning data to a warmstarted instance of the former. We consider the bounding-box and quantile-based ASHA, respectively referred to as ASHA-BB and ASHA-CTS. We also consider a zero-shot approach (ZS), which greedily selects hyperparameter configurations that complement previously considered ones, based on historical performances; and RUSH, which warmstarts ASHA with the best configurations found for previous tasks. As expected, we find that transfer learning approaches accelerate HPO.

Our experiments show that Syne Tune makes research on automated machine learning more efficient, reliable, and trustworthy. By making simulation on tabulated benchmarks a first-class citizen, it makes hyperparameter optimization accessible to researchers without massive computation budgets. By supporting advanced use cases, such as hyperparameter transfer learning, it allows better problem solving in practice.