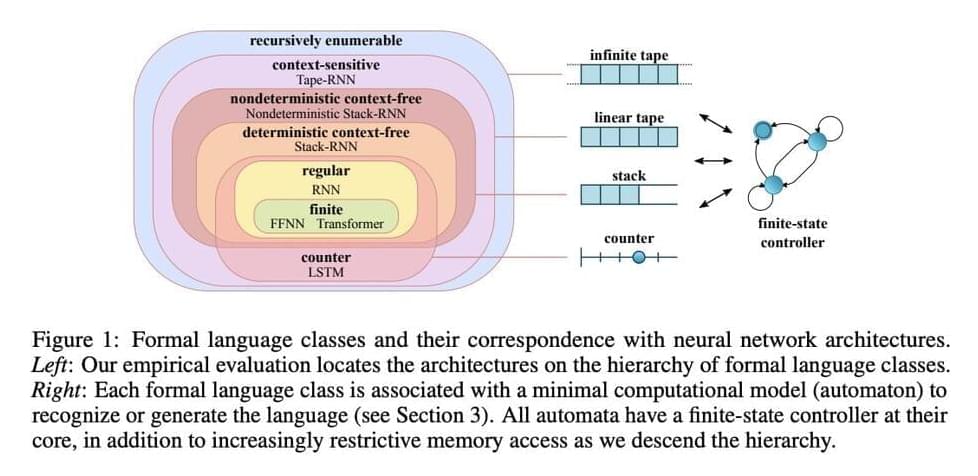

A DeepMind research group conducted a comprehensive generalization study on neural network architectures in the paper ‘Neural Networks and the Chomsky Hierarchy’, which investigates whether insights from the theory of computation and the Chomsky hierarchy can predict the actual limitations of neural network generalization.

While we understand that developing powerful machine learning models requires an accurate generalization to out-of-distribution inputs. However, how and why neural networks can generalize on algorithmic sequence prediction tasks is unclear.

The research group performed a thorough generalization study on more than 2000 individual models spread across 16 tasks of cutting-edge neural network architectures and memory-augmented neural networks on a battery of sequence-prediction tasks encompassing all tiers of the Chomsky hierarchy that can be evaluated practically with finite-time computation.