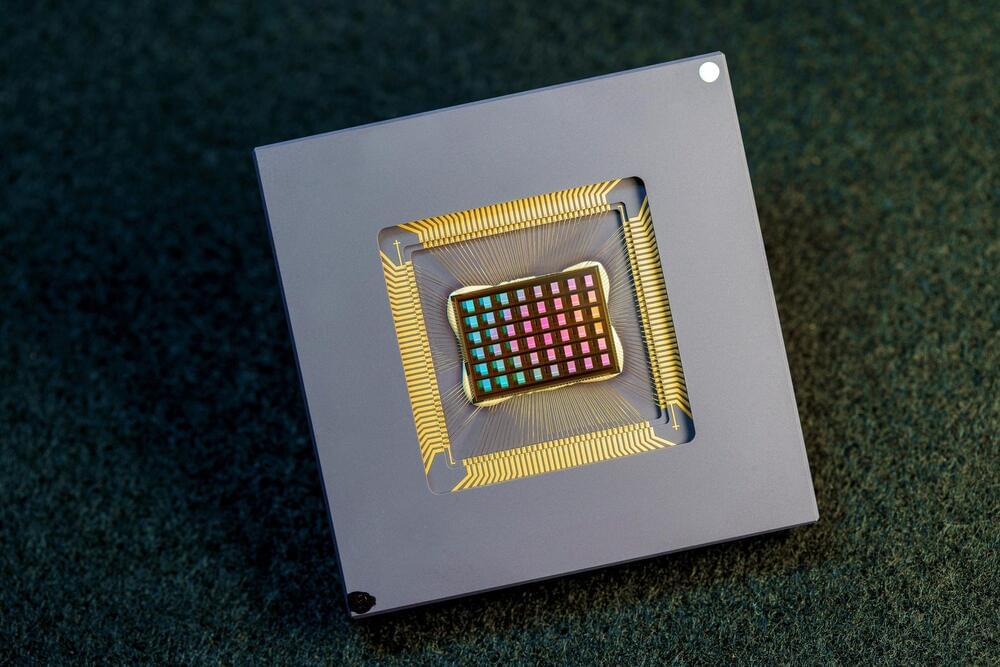

NeuRRAM, a new chip that runs computations directly in memory and can run a wide variety of AI applications has been designed and built by an international team of researchers. What sets it apart is that it does this all at a fraction of the energy consumed by computing platforms for general-purpose AI computing.

The NeuRRAM neuromorphic chip brings AI a step closer to running on a broad range of edge devices, disconnected from the cloud. This means they can perform sophisticated cognitive tasks anywhere and anytime without relying on a network connection to a centralized server. Applications for this device abound in every corner of the globe and every facet of our lives. They range from smartwatches to VR headsets, smart earbuds, smart sensors in factories, and rovers for space exploration.

Not only is the NeuRRAM chip twice as energy efficient as the state-of-the-art “compute-in-memory” chips, an innovative class of hybrid chips that runs computations in memory, it also delivers results that are just as accurate as conventional digital chips. Conventional AI platforms are much bulkier and typically are constrained to using large data servers operating in the cloud.