However, AI functionalities on these tiny edge devices are limited by the energy provided by a battery. Therefore, improving energy efficiency is crucial. In today’s AI chips, data processing and data storage happen at separate places – a compute unit and a memory unit. The frequent data movement between these units consumes most of the energy during AI processing, so reducing the data movement is the key to addressing the energy issue.

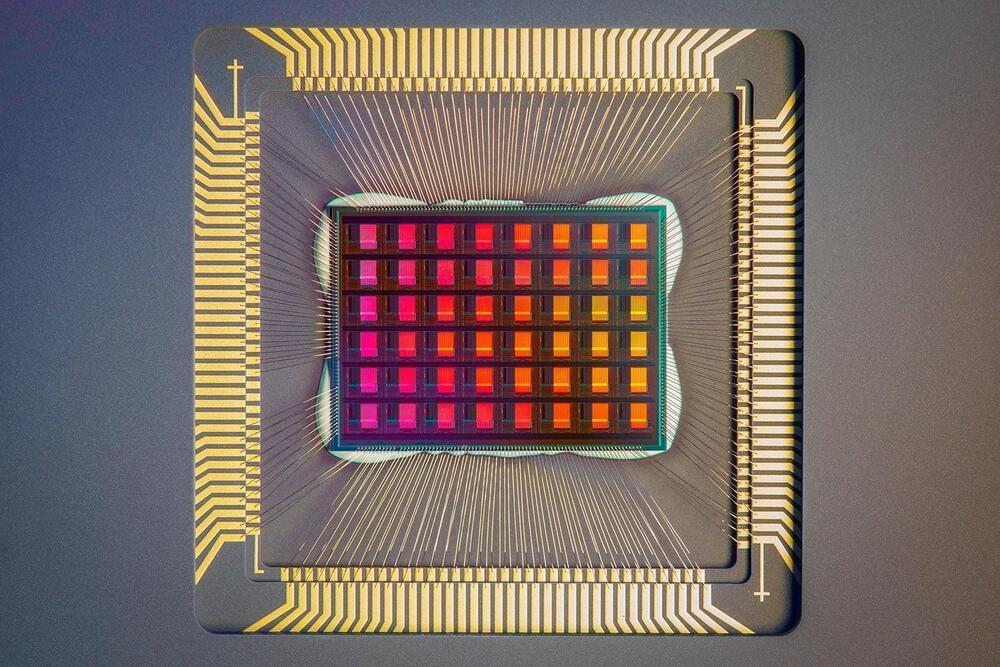

Stanford University engineers have come up with a potential solution: a novel resistive random-access memory (RRAM) chip that does the AI processing within the memory itself, thereby eliminating the separation between the compute and memory units. Their “compute-in-memory” (CIM) chip, called NeuRRAM, is about the size of a fingertip and does more work with limited battery power than what current chips can do.

“Having those calculations done on the chip instead of sending information to and from the cloud could enable faster, more secure, cheaper, and more scalable AI going into the future, and give more people access to AI power,” said H.-S Philip Wong, the Willard R. and Inez Kerr Bell Professor in the School of Engineering.