Early last year, our research team from the Visual Computing Group introduced Swin Transformer, a Transformer-based general-purpose computer vision architecture that for the first time beat convolutional neural networks on the important vision benchmark of COCO object detection and did so by a large margin. Convolutional neural networks (CNNs) have long been the architecture of choice for classifying images and detecting objects within them, among other key computer vision tasks. Swin Transformer offers an alternative. Leveraging the Transformer architecture’s adaptive computing capability, Swin can achieve higher accuracy. More importantly, Swin Transformer provides an opportunity to unify the architectures in computer vision and natural language processing (NLP), where the Transformer has been the dominant architecture for years and has benefited the field because of its ability to be scaled up.

So far, Swin Transformer has shown early signs of its potential as a strong backbone architecture for a variety of computer vision problems, powering the top entries of many important vision benchmarks such as COCO object detection, ADE20K semantic segmentation, and CelebA-HQ image generation. It has also been well-received by the computer vision research community, garnering the Marr Prize for best paper at the 2021 International Conference on Computer Vision (ICCV). Together with works such as CSWin, Focal Transformer, and CvT, also from teams within Microsoft, Swin is helping to demonstrate the Transformer architecture as a viable option for many vision challenges. However, we believe there’s much work ahead, and we’re on an adventurous journey to explore the full potential of Swin Transformer.

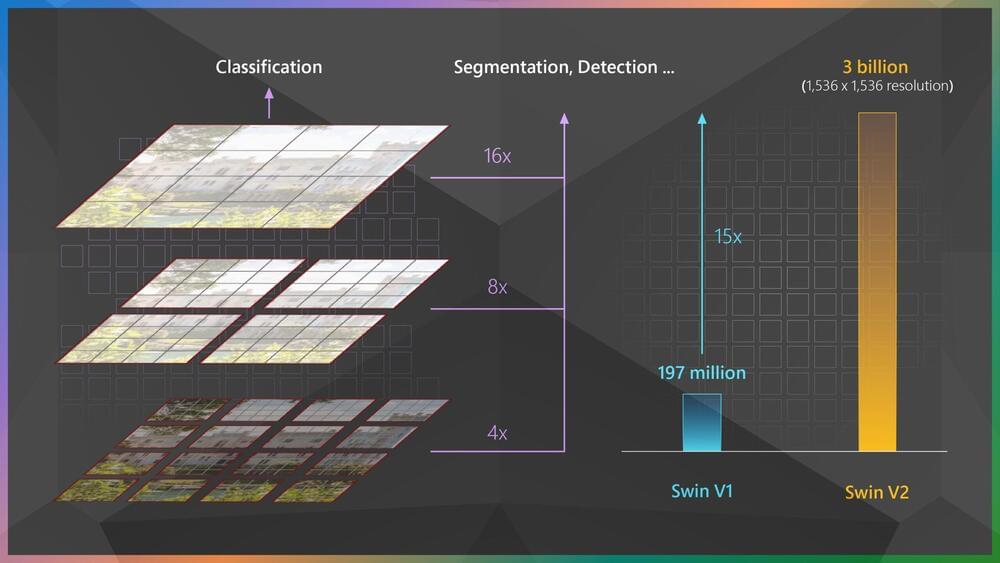

In the past few years, one of the most important discoveries in the field of NLP has been that scaling up model capacity can continually push the state of the art for various NLP tasks, and the larger the model, the better its ability to adapt to new tasks with very little or no training data. Can the same be achieved in computer vision, and if so, how?