One of the promising technologies being developed for next-generation augmented/virtual reality (AR/VR) systems is holographic image displays that use coherent light illumination to emulate the 3D optical waves representing, for example, the objects within a scene. These holographic image displays can potentially simplify the optical setup of a wearable display, leading to compact and lightweight form factors.

On the other hand, an ideal AR/VR experience requires relatively high-resolution images to be formed within a large field-of-view to match the resolution and the viewing angles of the human eye. However, the capabilities of holographic image projection systems are restricted mainly due to the limited number of independently controllable pixels in existing image projectors and spatial light modulators.

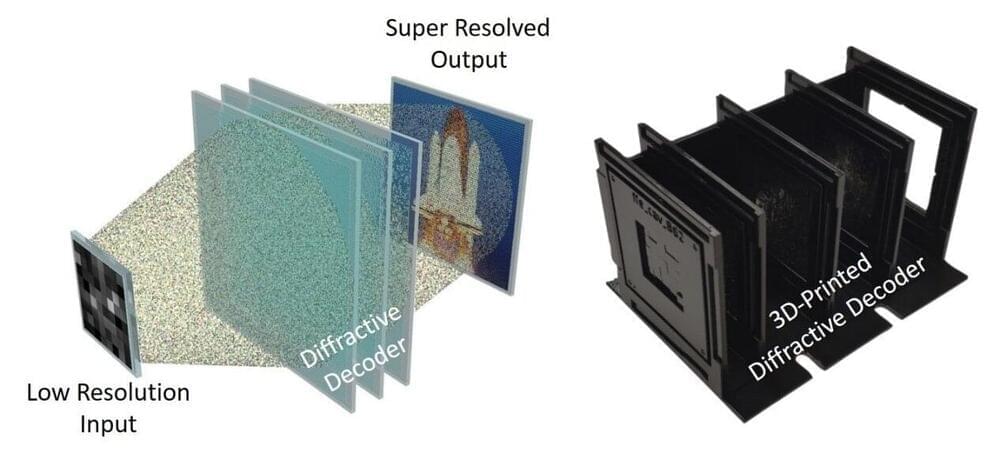

A recent study published in Science Advances reported a deep learning-designed transmissive material that can project super-resolved images using low-resolution image displays. In their paper titled “Super-resolution image display using diffractive decoders,” UCLA researchers, led by Professor Aydogan Ozcan, used deep learning to spatially-engineer transmissive diffractive layers at the wavelength scale, and created a material-based physical image decoder that achieves super-resolution image projection as the light is transmitted through its layers.