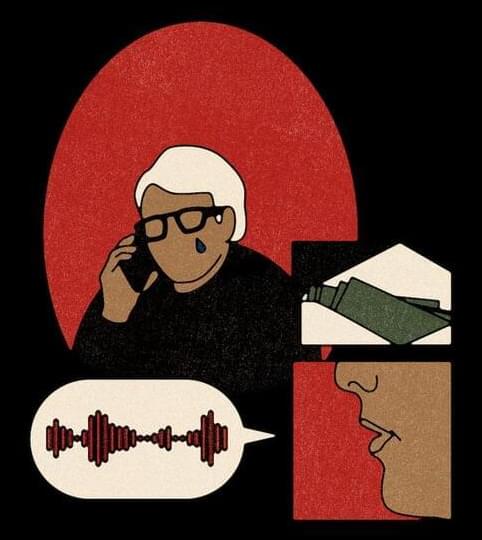

As impersonation scams in the United States rise, Card’s ordeal is indicative of a troubling trend. Technology is making it easier and cheaper for bad actors to mimic voices, convincing people, often the elderly, that their loved ones are in distress. In 2022, impostor scams were the second most popular racket in America, with over 36,000 reports of people being swindled by those pretending to be friends and family, according to data from the Federal Trade Commission. Over 5,100 of those incidents happened over the phone, accounting for over $11 million in losses, FTC officials said.

Advancements in artificial intelligence have added a terrifying new layer, allowing bad actors to replicate a voice with just an audio sample of a few sentences. Powered by AI, a slew of cheap online tools can translate an audio file into a replica of a voice, allowing a swindler to make it “speak” whatever they type.

Experts say federal regulators, law enforcement and the courts are ill-equipped to rein in the burgeoning scam. Most victims have few leads to identify the perpetrator and it’s difficult for the police to trace calls and funds from scammers operating across the world. And there’s little legal precedent for courts to hold the companies that make the tools accountable for their use.