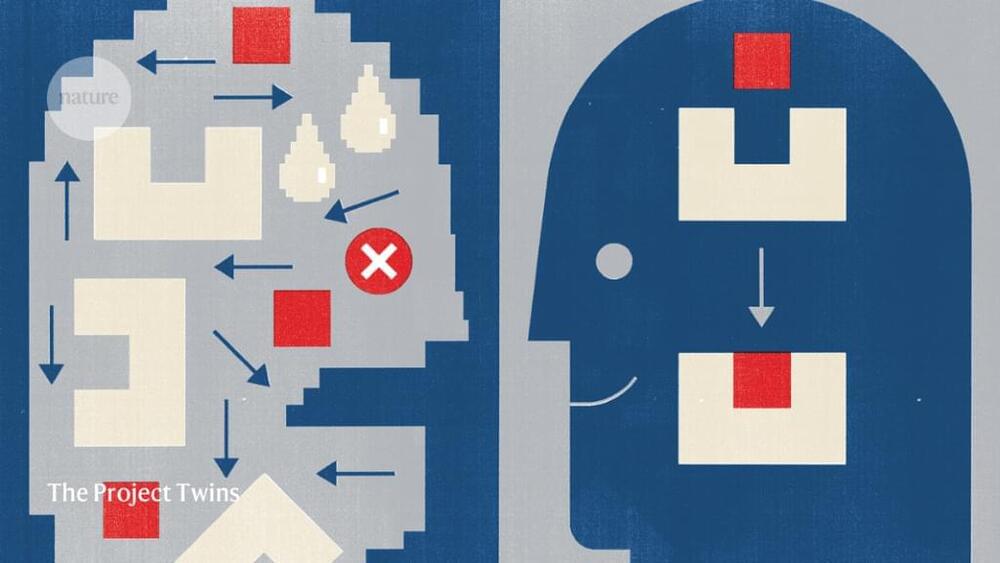

The world’s best artificial intelligence (AI) systems can pass tough exams, write convincingly human essays and chat so fluently that many find their output indistinguishable from people’s. What can’t they do? Solve simple visual logic puzzles.

In a test consisting of a series of brightly coloured blocks arranged on a screen, most people can spot the connecting patterns. But GPT-4, the most advanced version of the AI system behind the chatbot ChatGPT and the search engine Bing, gets barely one-third of the puzzles right in one category of patterns and as little as 3% correct in another, according to a report by researchers this May1.

The team behind the logic puzzles aims to provide a better benchmark for testing the capabilities of AI systems — and to help address a conundrum about large language models (LLMs) such as GPT-4. Tested in one way, they breeze through what once were considered landmark feats of machine intelligence. Tested another way, they seem less impressive, exhibiting glaring blind spots and an inability to reason about abstract concepts.