Large language models (LLMs) have become a general-purpose approach to embodied artificial intelligence problem-solving. When agents need to understand the semantic nuances of their environment for efficient control, LLMs’ reasoning skills are crucial in embodied AI. Recent methods, which they refer to as “programs of thought,” use programming languages as an improved prompting system for challenging reasoning tasks. Program-of-thought prompting separates the issues into executable code segments and deals with them one at a time, unlike chain-of-thought prompting. However, the relationship between the use of programming languages and the development of LLMs’ thinking skills has yet to receive enough research. When does program-of-thought suggesting work for reasoning2 remain the crucial question?

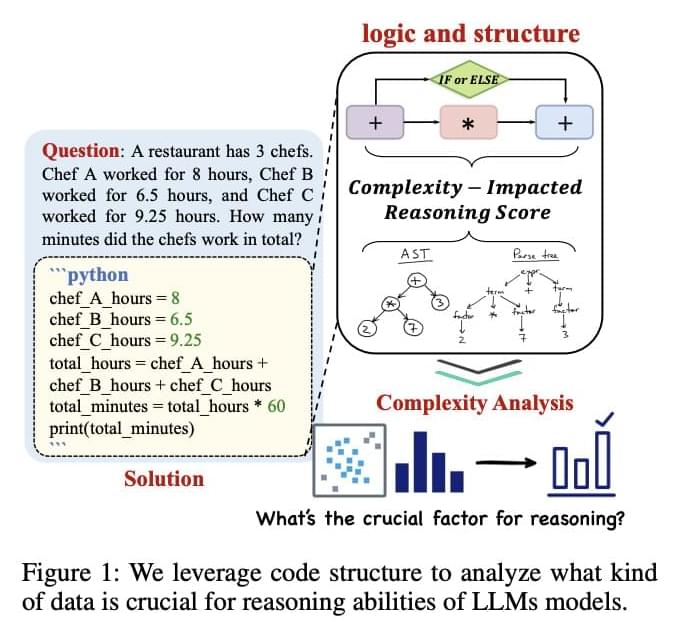

The complexity-impacted reasoning score (CIRS), a thorough metric for the link between code reasoning stages and their effects on LLMs’ reasoning abilities, is proposed in this paper. They contend that programming languages are inherently superior to serialized natural language because of their improved modeling of complex structures. Their innate procedure-oriented logic aids in solving difficulties involving several steps in thinking. Because of this, their suggested measure assesses the code complexity from both a structural and a logical standpoint. In particular, they compute the structural complexity of code reasoning stages (rationales) using an abstract syntax tree (AST). Their method uses three AST indicators (node count, node type, and depth) to keep all structural information in AST represented as a tree, which thoroughly comprehends code structures.

Researchers from Zhejiang University, Donghai Laboratory and National University of Singapore develop a way to determine logical complexity by combining coding difficulty with cyclomatic complexity, drawing inspiration from Halsted and McCabe’s idea. Thus, it is possible to consider the code’s operators, operands, and control flow. They can explicitly calculate the logic’s complexity within the code. They discover through an empirical investigation using their suggested CIRS that present LLMs have a restricted comprehension of symbolic information like code and that not all sophisticated code data can be taught and understood by LLMs. Low-complexity code blocks lack the necessary information, but high-complexity code blocks could be too challenging for LLMs to understand. To effectively improve the reasoning abilities of LLMs, only code data with an appropriate amount of complexity (structure & logic), both basic and detailed, are needed.