Large Language Models (LLMs) are exceptionally resource-intensive on the CPU and memory, but Apple is said to be experimenting with storing this technology on flash storage, likely to make it easily accessible on multiple devices. However, the technology giant also wishes to make LLMs ubiquitous on its iPhone and Mac lineup and is exploring ways to make this possible.

Storing LLMs on flash memory has been difficult; Apple aims to fix this on machines with limited capacity

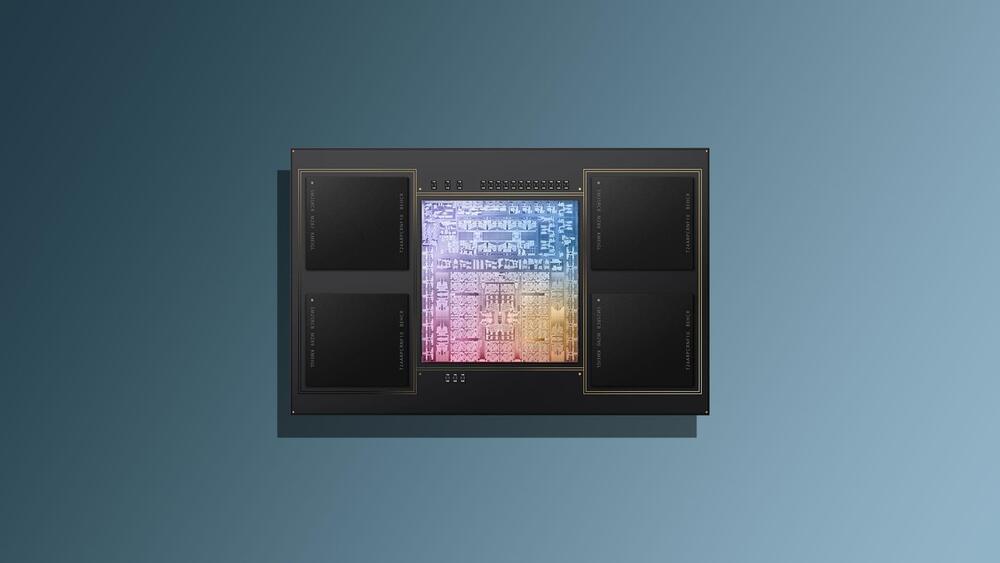

Under typical conditions, Large Language Models require AI accelerators and a high DRAM capacity to be stored. As reported by TechPowerUp, Apple is working to bring the same technology, but to devices that sport limited memory capacity. In a newly published paper, Apple has published a paper that aims to bring LLMs to devices with limited memory capacity. iPhones have limited memory too, so Apple researchers have developed a technique that uses flash chips to store the AI model’s data.