In machine learning, larger networks with increasing parameters are being trained. However, training such networks has become prohibitively expensive. Despite the success of this approach, there needs to be a greater understanding of why overparameterized models are necessary. The costs associated with training these models continue to rise exponentially.

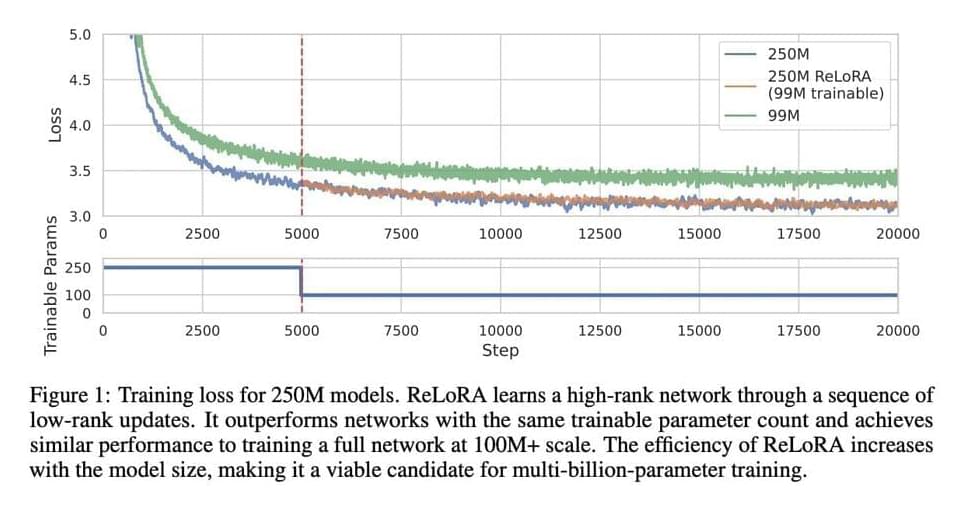

A team of researchers from the University of Massachusetts Lowell, Eleuther AI, and Amazon developed a method known as ReLoRA, which uses low-rank updates to train high-rank networks. ReLoRA accomplishes a high-rank update, delivering a performance akin to conventional neural network training.

Scaling laws have been identified, demonstrating a strong power-law dependence between network size and performance across different modalities, supporting overparameterization and resource-intensive neural networks. The Lottery Ticket Hypothesis suggests that overparameterization can be minimized, providing an alternative perspective. Low-rank fine-tuning methods, such as LoRA and Compacter, have been developed to address the limitations of low-rank matrix factorization approaches.