Any activity that requires comprehension and production in one or more modalities is considered a multimodal task; these activities can be extremely varied and lengthy. It is challenging to scale previous multimodal systems because they rely heavily on gathering a large supervised training set and developing task-specific architecture, which must be repeated for every new task. In contrast, present multimodal models have not mastered people’s ability to learn new tasks in context, meaning that they can do so with minimal demonstrations or instructions. Generative pretrained language models have recently shown impressive skills in learning from context.

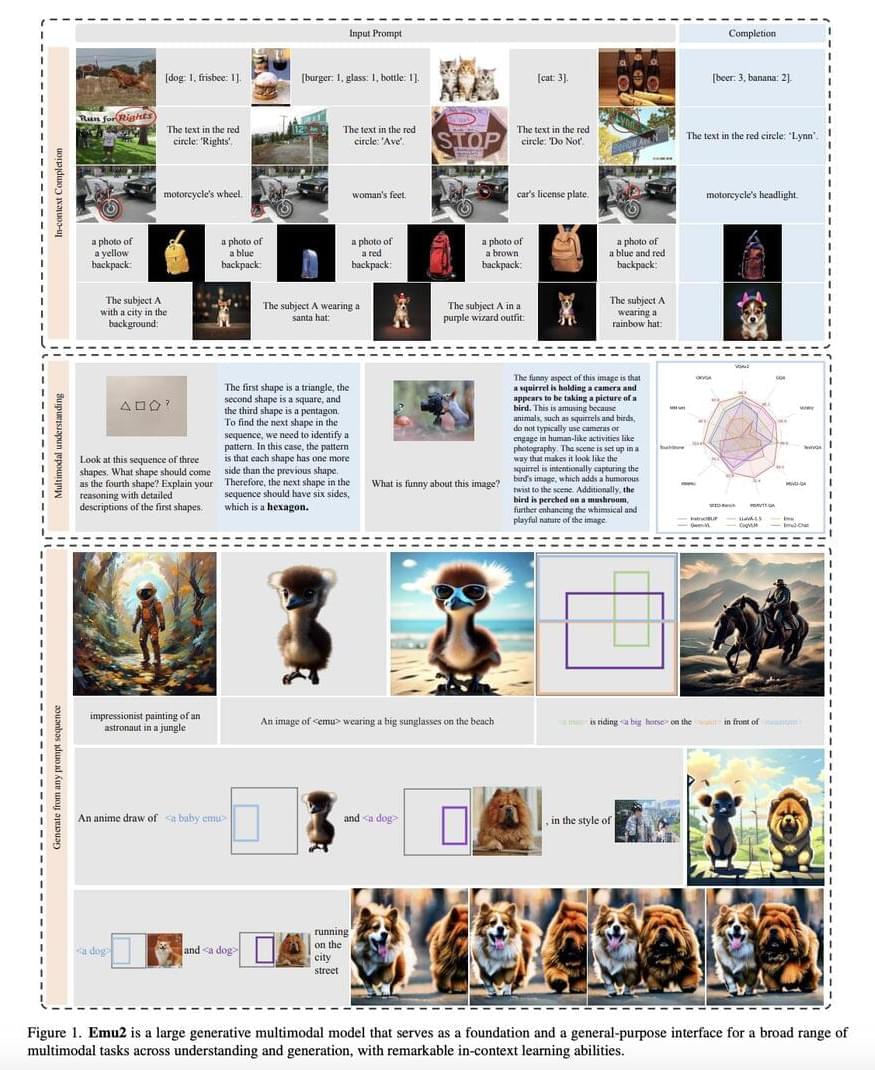

New research by researchers from Beijing Academy of Artificial Intelligence, Tsinghua University, and Peking University introduces Emu2, a 37-billion-parameter model, trained and evaluated on several multimodal tasks. Their findings show that when scaled up, a multimodal generative pretrained model can learn similarly in context and generalize well to new multimodal tasks. The objective of the predict-the-next-multimodal-element (textual tokens or visual embeddings) is the only one used during Emu2’s training. This unified generative pretraining technique trains models by utilizing large-scale multimodal sequences, such as text, image-text pairs, and interleaved image-text video.

The Emu2 model is generative and multimodal; it learns in a multimodal setting to predict the next element. Visual Encoder, Multimodal Modeling, and Visual Decoder are the three main parts of Emu2’s design. To prepare for autoregressive multimodal modeling, the Visual Encoder tokenizes all input images into continuous embeddings, subsequently interleaved with text tokens. The Visual Decoder turns the regressed visual embeddings into a movie or image.