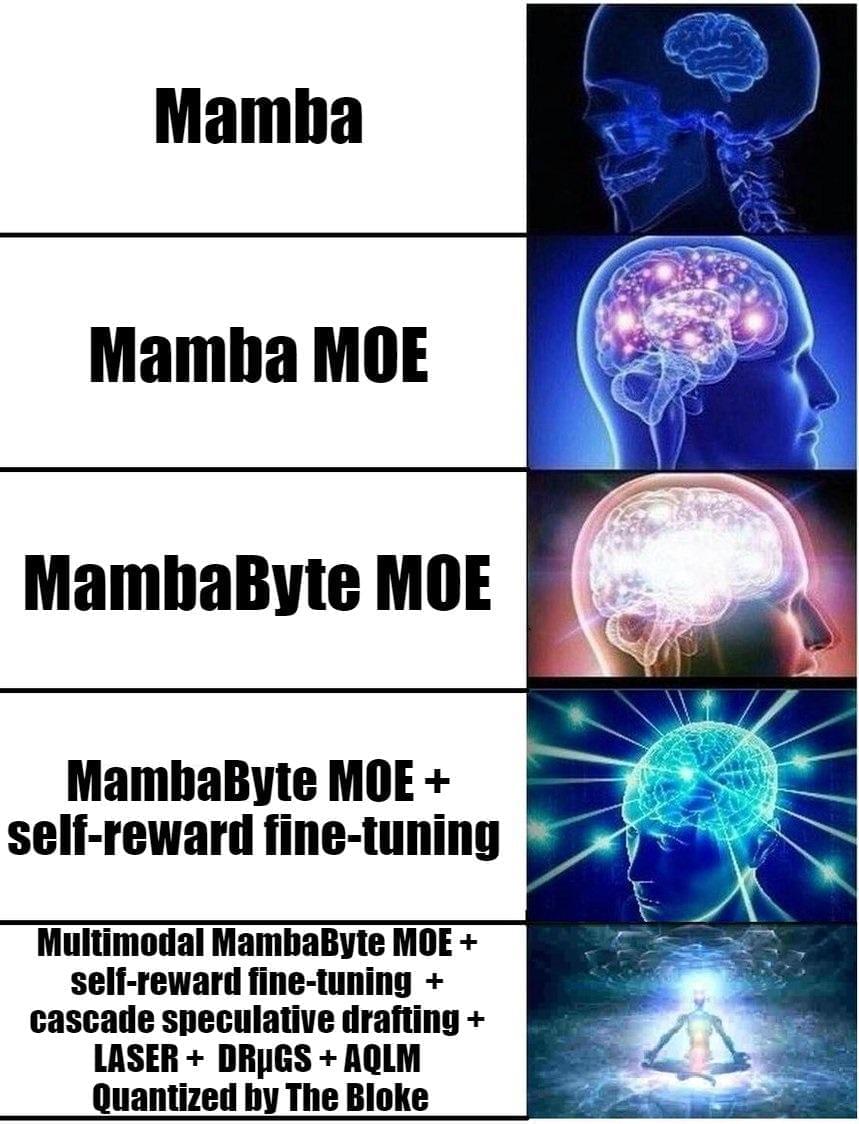

In the dynamic field of Artificial Intelligence (AI), the trajectory from one foundational model to another has represented an amazing paradigm shift. The escalating series of models, including Mamba, Mamba MOE, MambaByte, and the latest approaches like Cascade, Layer-Selective Rank Reduction (LASER), and Additive Quantization for Language Models (AQLM) have revealed new levels of cognitive power. The famous ‘Big Brain’ meme has succinctly captured this progression and has humorously illustrated the rise from ordinary competence to extraordinary brilliance as one delf into the intricacies of each language model.

Mamba is a linear-time sequence model that stands out for its rapid inference capabilities. Foundation models are predominantly built on the Transformer architecture due to its effective attention mechanism. However, Transformers encounter efficiency issues when dealing with long sequences. In contrast to conventional attention-based Transformer topologies, with Mamba, the team introduced structured State Space Models (SSMs) to address processing inefficiencies on extended sequences.