GaLore.

Memory-efficient LLM training by gradient low-rank projection.

V/@animaanandkumar.

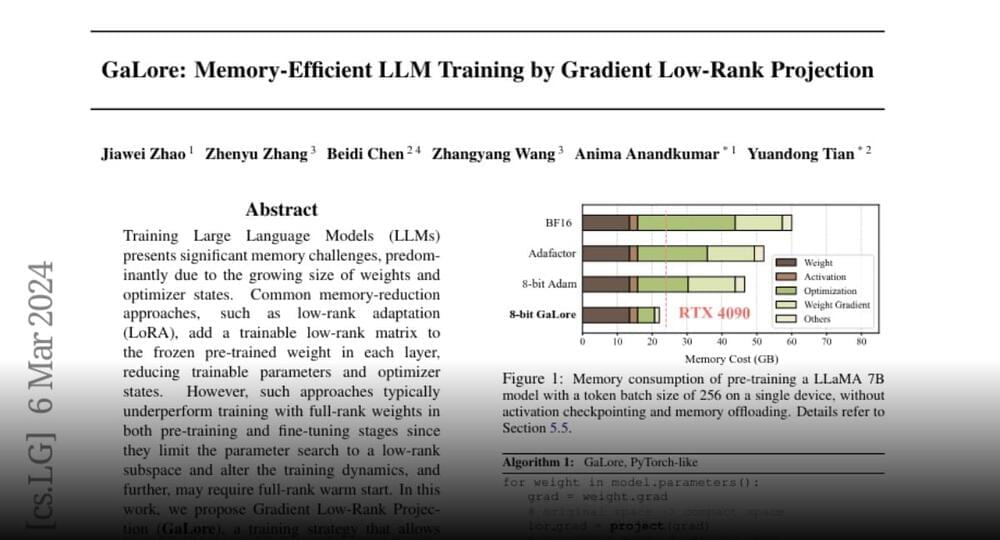

For the first time, we show that the Llama 7B LLM can be trained on a single consumer-grade GPU (RTX 4090) with only 24GB memory.

Join the discussion on this paper page.