Meta announces MA-LMM

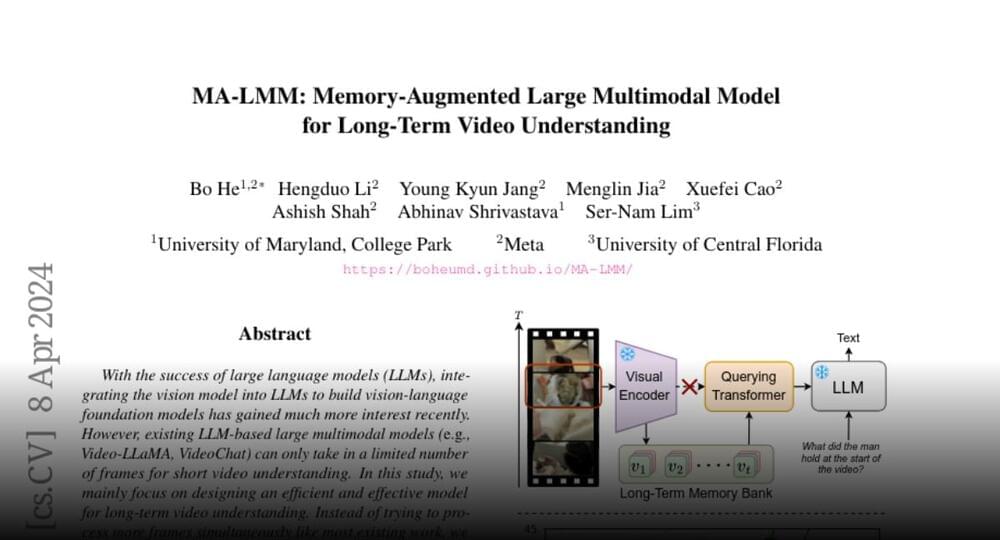

Memory-augmented large multimodal model for long-term video understanding.

With the success of large language models (#LLMs), integrating the vision model into LLMs to build vision-language #foundation models has gained much more interest…

Join the discussion on this paper page.