Wearable displacement sensors—which are attached to a human body, detect movements in real time and convert them into electrical signals—are currently being actively studied. However, research on tensile-capable displacement sensors has many limitations, such as low tensile properties and complex manufacturing processes.

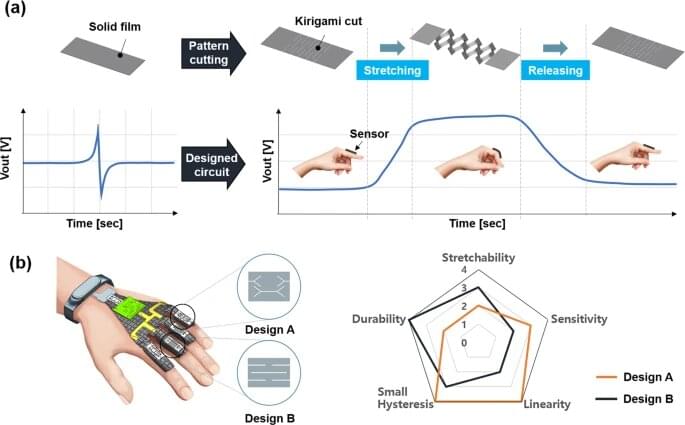

If a displacement sensor that can be easily manufactured with high sensitivity and tensile properties is developed, it can be attached to a human body, allowing large movements of joints or fingers to be used in various applications such as AR and VR. A research team led by Sung-Hoon Ahn, mechanical engineering professor at Seoul National University, has developed a piezoelectric strain sensor with high sensitivity and high stretchability based on kirigami design cutting.

In this research, a stretchable piezoelectric displacement sensor was manufactured and its performance was evaluated by applying the kirigami structure to a film-type piezoelectric material. Various sensing characteristics were shown according to the kirigami pattern, and higher sensitivity and tensile properties were shown compared to existing technologies. Wireless haptic gloves using VR technology were produced using the developed sensor, and a piano could be played successfully using them.