Optics, technologies that leverage the behavior and properties of light, are the basis of many existing technological tools, most notably fiber communication systems that enable long-and short-distance high-speed communication between devices. Optical signals have a high information capacity and can be transmitted across longer distances.

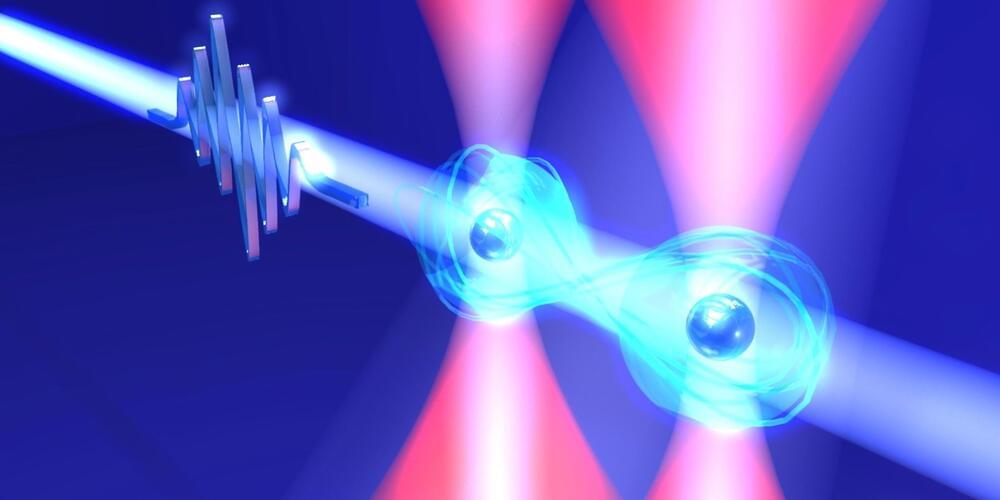

Researchers at California Institute of Technology have recently developed a new device that could help to overcome some of the limitations of existing optical systems. This device, introduced in a paper published in Nature Photonics, is a lithium niobate-based device that can switch ultrashort light pulses at an extremely low optical pulse energy of tens of femtojoules.

“Unlike electronics, optics still lacks efficiency in required components for computing and signal processing, which has been a major barrier for unlocking the potentials of optics for ultrafast and efficient computing schemes,” Alireza Marandi, lead researcher for the study, told Phys.org. “In the past few decades, substantial efforts have been dedicated to developing all–optical switches that could address this challenge, but most of the energy-efficient designs suffered from slow switching times, mainly because they either used high-Q resonators or carrier-based nonlinearities.”