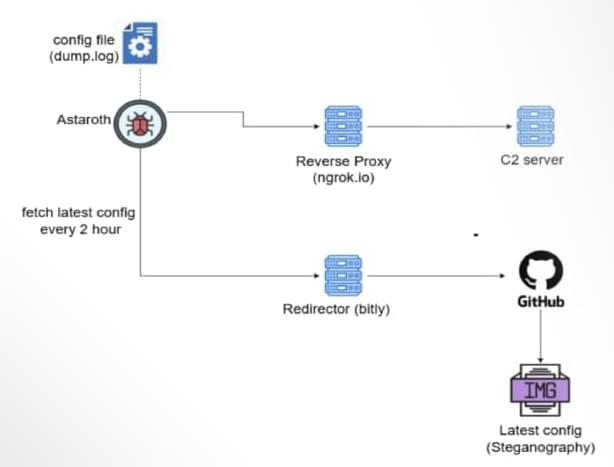

Cybersecurity researchers are calling attention to a new campaign that delivers the Astaroth banking trojan that employs GitHub as a backbone for its operations to stay resilient in the face of infrastructure takedowns.

“Instead of relying solely on traditional command-and-control (C2) servers that can be taken down, these attackers are leveraging GitHub repositories to host malware configurations,” McAfee Labs researchers Harshil Patel and Prabudh Chakravorty said in a report.

“When law enforcement or security researchers shut down their C2 infrastructure, Astaroth simply pulls fresh configurations from GitHub and keeps running.”