Homer Simpson on NASA and Bart Simpson on Book Of Five Rings and the Noda Secret!

Homer: Son, it has been said that Kaizen is “good change.”

Bart: Dad, good change, Do you mean the throttle?

Homer: Son, What do you mean by throttle?

Bart: Dad, the gas pedal gone lunatic!

Homer: Son, lunatic how?

Bart: Dad, the gas pedal set into out-of-controlness in order to harm the automobile and the driver and passengers in said automobile.

Homer: Son, that is impossible as Kaizen through its doppelgänger (a) Toyota Production System (TPS) and (b) “…Thinking People System…” were in place to preclude what you suggest.

Bart: Dad, I don’t suggest anything as the victims and casualties are in the news and in court and cementeries.

Homer: Son, Really? I didn’t know that. Why do you think this happened?

Bart: Dad, the gas pedal is a subsystem to which huge technical complexity was added, layer after layer, device after device, until the technical complexity superseded the totality of knowledge level of Toyota, worldwide.

Homer: Son, Oh my big “G.” So, Drew says that?

Bart: Dad, yes he does!

Homer: Son, And beyond Drew and Andy who else underpins that?

Bart: Dad, Well, the flaw was so complex that it actually was lucidly identified and elucidated by NASA and never by JAXA.

Homer: Son, JAXA? Is that the detergent ambiword played around by the Illuminati?

Bart: Dad, no, no, no! JAXA is not a Protecter & Gamble detergent but Japan’s NASA.

Homer: Son, and what happened to the instituted “good change” pertaining to the murdering gas pedal?

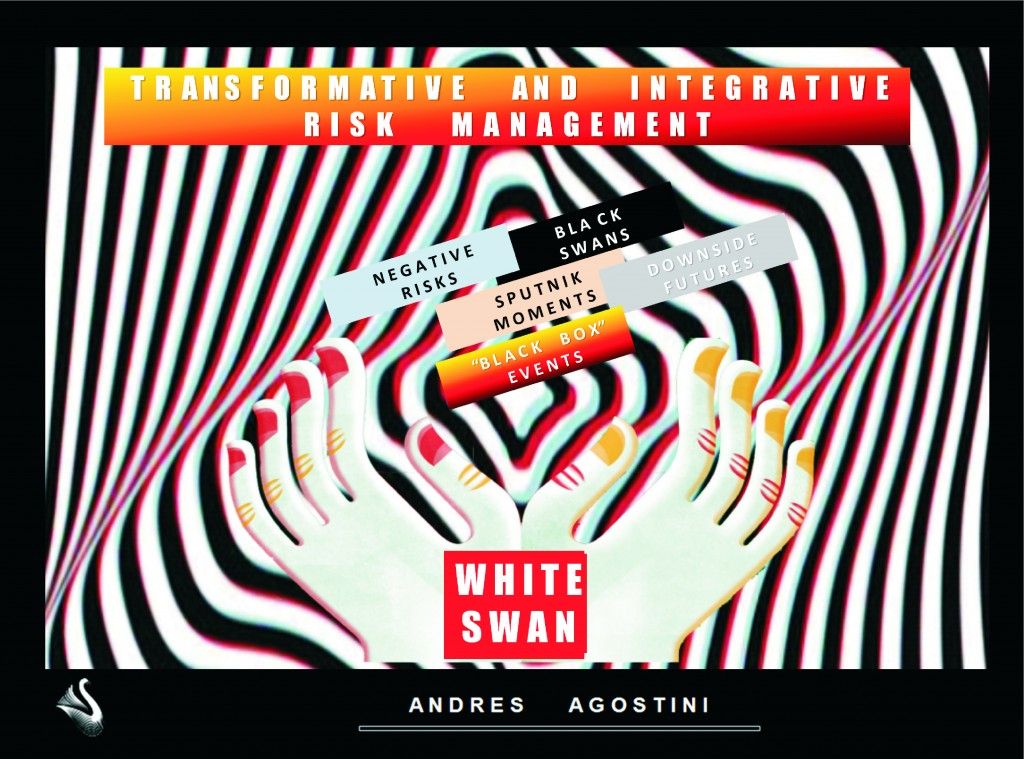

Bart: Dad, you see, regardless of how tough your Quality Assurance methodology, neither Kaizen nor TPS is instrumented and operationalized with the Systems Approach and the non-theological applied Omniscience perspective, thus bringing about White Swan “Transformative and Integrative” Best Practices!

Homer: Son, Are suggesting that this carmaker is myopic when manufacturing?

Bart: Dad, To a great extent, that carmaker does beautifully, but as the 360-degree flow of processes and contents are not thoroughly pursuited by them, ignorantly and unfailingly they give birth to Black Swans, Blacks Swans that show up frequently and out the blue.

Homer: Son, What does Australian black rara avis have to do with this?

Bart: Dad, You got it all wrong again. But Black Swan I mean to say the incessant fostering of the frequent impact of the dramatic highly improbable (ISBN: 978–0812973815 AND AT http://en.wikipedia.org/wiki/Black_swan_theory )

Homer: Son, Is there a Structural Counterpoint to this stupid Black Swan stuff and Suboptimal Kaizen and TPS? Please, son, tell me if there is a fundamental solution to such simpletonness?

Bart: Dad, Yes there is, but I will not tell you the Secret, by means of which Toyota Production Director, San Noda, kindly baptized Andy with a newer name. THE COUNTERPOINT-plus, so to speak, is: “… The White Swan’s Beyond Eureka and Sputnik Moments: How To Fundamentally Cope With Corporate Litmus Tests and With The Impact of the Dramatic Highly Improbable And Succeed and Prevail Through Transformative and Integrative Risk Management! …” ( ASIN: B00KMY0DLK AND at http://amzn.to/1zi1RDY )

Homer: Son, where did the Noda Secret took place and why?

Bart: Dad, at a long meeting of Andy with the chairman, CEO, CFO and Director of Production of Toyota, through which Andy made a lengthy and most-detailed explanation about technical shortcomings he found both in Kaizen and TPS.

Homer: Son, Oh My God? And the Nippon Honor got bruised in there?

Bart: Dad, Yes, the Director of Production wanted to assassin Andy but the chairman and the CEO remained calmed and tranquil.

Homer: Son, What was the final outcome of said business dealing?

Bart: Dad, well, with the bruised egos, they contracted Andy so that he could institute Andy’s own Transformative and Integrative Risk Management, never implementing Kaizen or TPS.

Homer: Son, Don’t they do also Lean and Mean (ISBN: 978–1572302525), Lean Manufacturing, Agile, Extreme Project Management, Six Sigma and the like?

Bart: Dad, yes, they do but they fail frequently anyway!

Homer: Son, Why? Why? Why?

Bart: Dad, to this end and question, Andy relentlessly argues that they DO NOT observe a Womb-to-Tomb Management Prescription by His Excellency George W. Rutler, S.T.D. (Doctor of Sacred Theology).

Homer: Son, Can you simplify into a couple of words the aforementioned Management Prescription by said Doctor of Sacred Theology?

Bart: Dad, yes, I can readily activate that while George W. Rutler, S.T.D. observes verbatim, “… we [and they and everyone else in the Earth] need a great Dose of Reality [most urgently]…”

Homer: Son, The Creator has spoken as there are, in all truth, so many Ivy-League and Oxbridge postdoctorals that are both myopic and narrow minded in a world in which must-do-for-ever updatedness is abjectly rejected.

Bart: Dad, touché!

Homer: Son, Do Toyota and other global corporations of gran repute from the Far East apply, say and for instance, embrace Servant Leadership (ISBN: 978–0761513698) in order to further underpin their collective efforts towards Quality Assurance and Continuous Process Improvement?

Bart: Dad, yes, they keep the WHOLE FASHIONABLE FAD about Leadership immeasurably overestimated when remarkable Harvard University Leadership Professor Prof. Barbara Kellerman, PhD., among zillion others, has indicated that “…The End of Leadership…” (ISBN: 978–0062069160) has terrifyingly and UNIVERSALLY failed because of rampant lack of ETHICS and absence of LUCRATIVE BUSINESS OUTCOMES. How can you Kaizen something, that is: “…change for the better…”, if you daily violate ethics and your end results are ineffectual, ludicrous, madly-in-love with bankruptcy and decay?

Homer: Son, And the (a) “5S” ( http://en.wikipedia.org/wiki/5S_%28methodology%29 ) and (b) the “5 Whys” ( http://en.wikipedia.org/wiki/5_Whys ) ?

Bart: Dad, with the great advent of exponential technologies ( http://en.wikipedia.org/wiki/Exponential_Technology ) since the Fall of the Iron Wall and the lost of “…corporate decorum,…” they simply DO NOT SUFFICE and seem to be necessary a must-do re-birth, overhaul and re-engineering APPROACH to structurally fix those. All of the preceding while in the face of entire Earth flows and overflows a FORCEFUL ZEITGEIST characterized by elites into propelling destroyed à-la-ganters “…ethics…” and imposing their anti-values, in order to make their status quo fatter and more obese, not Lean!

Homer: Son, but in Fukushima, managers there did their very best, didn’t they?

Bart: Dad, Not, they didn’t! That were un-coped-with black swans.

Homer: Son, Why not?

Bart: Dad, Andy argues that organizations from Fukushima part of the world ARE RISK ADVERSE AND ARE UTTERLY AGAINST BEST-IN CLASS WESTERN MODALITIES (chiefly those practices from the West’s western-most region in the Northern Hemisphere) of Risk Management. Andy assures that he has strongly observe this too frequently, not only while working with Mitsubishi Motors and Toyota Motors, but also closely working with the INSURANCE COMPANY called Tokyo Marine Group ( http://www.tokiomarinehd.com/en/group/index.html ).

Homer: Son, And what’s wrong with hating risks before and after they create devastations?

Bart: Dad, those sages from the Far East are nice, but they only concentrate on the “…Line of Production…”, “…Supply Chain…”, “ Workshop Benches,” “…Other Primary, Secondary and tertiary Functions of the Core Business,…” and the inner-most environment of the Factory, the Head-Office or the Throughputting Façades, EXPONENTIALLY IGNORING AND HENCE LACKING TO MANAGE MANY OTHER WASTES, DEFECTS, RISKS, THREATS, BLACK SWANS, BLACK-BOX EVENTS, DOWNSIDE FUTURES, SPUTNIKS, KNOWN UNKNOWNS, UNKNOWN UNKNOWNS, STEMMING FROM THE OUTER-MOST ENVIRONMENT.

Homer: Son, They loves their façades as they feel untouchable for the external environment and the outter-most external environment?

Bart: Dad, touché!

Homer: Son, So you mean to say that the seamless integration of Continuous Process Improvements, Kaizen, Toyota Production System, Agile, Lean, Lean Production, Six Sigma, Extreme Project Management, Servant Leadership, so fort is NOT ENOUGH TO COUNTERMEASSURE MANY OTHER WASTES, DEFECTS, RISKS, THREATS, BLACK SWANS, BLACK-BOX EVENTS, DOWNSIDE FUTURES, SPUTNIKS, KNOWN UNKNOWNS, UNKNOWN UNKNOWNS, STEMMING FROM THE OUTER-MOST ENVIRONMENT?

Bart: Dad, Andy, under his White Swan book and other publications, has been indefatigable to say that Flawness must be a hugely studied science and that issues must be dealed with preemptively before they happen through the systems approach and with the applied omniscience perspective in due place, IN ORDER TO AVOID, and by way of just one meager example, “… AUTO RECALLS SURPASS 60 MILLION IN 2014, NEARLY TWICE THE PREVIOUS U.S. RECORD …” ( http://bit.ly/1Clgyap ). ANDY INSISTS AND INSISTS ON THAT POTENTIAL DISRUPTION IS A POTENTIAL DISRUPTION FROM WHEREVER IT COMES AND THAT THOSE CAN BE FUNDAMENTALLY SOLVED AND PROFITED FROM, EARLY, AS PER HIS WORDS, NUMBERS, FACTS, AND STATS.

Homer: Son, Under his Disruption Potential, Does Andy’s White Swan Idea include, say, the disasters both by Fukushima and Sony Corporation to cite just two instances?

Bart: Dad, A quick answer is an IRONCLAD YES. THE LONGER ANSWER INCLUDES THE FOLLWING CASES WHOSE WHITE SWAN TRANSFORMATIVE AND INTEGRATIVE RISK MANAGEMENT WAS NEVER EVER INSTITUTED:

EXTREMELY SHORT LISTS BY BART ENSUES NOW:

(#1 of #14)_ Takata air bags. (http://read.bi/1GLoul9).

(#2 of #14)_ Mazda Recalls 100,000 Cars for Defect in Tire Pressure Sensor.

(#3 of #14)_ Toyota Gas Pedal

(#4 of #14)_ General Motors Co. ignition switches

(#5 of #14)_ GM alone has recalled nearly 27 million cars and trucks in the U.S. this year, a record for any single automaker. Defective GM ignition switches in small cars have been linked to at least 42 deaths and 58 injuries.

(#6 of #14)_ Honda Motor Co., the third-largest Japanese automaker, has recalled 5.4 million vehicles to replace Takata air bags.

(#7 of #14)_ Fiat Chrysler Automobiles NV said Dec. 19 that it would accede to a NHTSA request and expand an existing air-bag recall. That will add 2.89 million vehicles to the recall total for the U.S. when reflected in the government database.

(#8 of #14)_ With the focus on more and quicker recalls, 2014 will probably signify a period of elevated safety fixes, Steinkamp said. The average number of recalled vehicles per year from the 2004 through last year was 16.1 million, according to NHTSA data. “…It’s a landmark year; it’s the start of a new era,…” said by Neil Steinkamp, a managing director at Stout Risius Ross who studies warranty and recall issues.

(#9 of #14)_ Virgin Galactic Crash (http://on.wsj.com/1sO9FWK)

(#10 of #14)_ Nasa’s Antares rocket explosion (http://ti.me/1tfXndg)

(#11 of #14)_ Even Airliners Weaponized Into Skyscrapers and the Pentagon (http://bit.ly/JsvuKR)

(#12 of #14)_ Sony “Rogue State”-sponsored Cyberhack (http://nyti.ms/1wPlRLX)

(#13 of #13)_ Boston Marathon bombings ( http://bit.ly/1m8zZqx )

(#14 of #14)_ Suzuki Motor to recall 453,000 mini vehicles in Japan (http://bit.ly/1Cr0Zev)

THE LONG LIST IS WITHIN THE WHITE SWAN BOOK AT: http://amzn.to/1AvY2tK

EXTREMELY SHORT LISTS BY BART ENDS NOW.

Homer: Son, Why are so many tragedies there?

Bart: Dad, Mostly because DoD, NASA, Virgin Galactic, Sony, Toyota, Mitsubishi, Mazda, Honda, GM, Takata, Fiat-Chrysler think that your corporate theaters of operations can be TOTALLY CLEAR AND FREE OF DISRUPTION POTENTIAL BY ONLY AND ONLY APPLYING CONTINOUS PROCESS IMPROVEMT, QUALITY ASSURANCE, KAIZEN, TOYOTA PRODUCTION SYSTEM, PROCESS REENGINEER, etc. In each corporate theater of operations within the global marketplace, every corporation is waging war to be world’s marketplace #1, with the utter purpose not to incurr in Chapter Seven ( http://bit.ly/1AvSjnM ), thus outright bankruptcy.

Homer: Son, Why does Apple manufacture IPhones in Japan with a U.S. name and domicile?

Bart: Dad, Because, after all and with all and all, Japanese and German manufacturing is the world’s least worst, except for the quality assurance used in manufacturing U.S., French and Israeli weapons? Weapons is a fancy word for “…tools to carry on with applied politics through other means …!…”

Homer: Son, How do you make the case against the defects and shortcomings in Japanese approaches not only to manufacture, but also to manage the corporate theater of operations from a Womb-to-Tomb stance?

Bart: Dad, I greatly value Japanese execs and sages but they focus only on throughputting(• the Known Inputs Into Desirable Outputs inside their premises, without considering the Non-Existential and Existential Risk of the External Environment (outside their industrial façade) at large as we do in the White Swan’s Tranformative and Integrative Risk Management Services.

(• Throughputting is a Latin word in its ING-form stemming from Latin language, whose meaning is exactly this: Modus Operandi (MO). In all order and in all correction, to assert to modus-opendai X is exactly identical to throughput X.

Yes, they Kaizen within and beyond and from the Assembly Line to HHRR and many other administrative facilities and operations. However, in Transformative and Integrative Risk Management, we consider and implement, as a major sub-chapter, every possible and most updated tool(s) by Quality Assurance and Continuous Improvement, most of the times to a “Shock and Awe” practical level for the sake of corporate lucre in sustainability.

Briefly, this is what I can add to this point.

Homer: Son, Are you sure you are not with the Discrete and Secretive Scotch Rite?

Bart: Not, thank you, Dad.

Homer: Son, What Do Suzuki, General Electric, and Toyota have in Common?

Bart: Dad, As per the downsides, Toyota is better known by the “…gas pedal…” error, constructed by “…throwing and throwing…” layers and layers of Knowledge Complexity (that is: heavy and undue involvement with the unknown Science of Complexity) to a single sub-subsystem, the gas pedal. Many American and Canadian lives were lost in the process.

The scientific forensics party, to become amenable to the U.S. Congress, was performed and solved by NASA. Toyota could have resorted, but to the despair of U.S. congressmen, to Japan’s NASA, JAXA (Japan Aerospace Exploration Agency).

In accordance to its disadvantages, the massive disruption by The Fukushima Daiichi Nuclear Power Plant, that was jointly built and jointly managed by General Electric, Boise, and Tokyo Electric Power Company (TEPCO).

Now, we have the recall by Suzuki. Suzuki Motor Corp, as per Reuters, on Thursday (September 19, 2014) issued a recall of 453,225 minivehicles in Japan to fix a defect in the blower fan motor of the air-conditioning unit that has resulted in three fires so far.

I have vast experience with Kaizen and Toyota Production System even before ANdy became extremely knowledgeable with two of his most salient clients, Toyota Motors and Mitsubishi Motors.

ONE. If you add complexity to your subsystems without fully knowing the upsides and downsides, the above will happen, unless to an important degree.

SECOND. These disruptions also happen when there is either or both Low Morale and Low Moral with Flawed Ethics. When in the corporate theater of operations there is SOLEMNITY, you immediately get a nice spinoff: High Morale, Optimal Morality, and Fundamental Ethics.

THIRD. Kaizen, Toyota Production System (TPS), and Lean Six Sigma, in my personal, opinion need to incorporate other problem-solving methodologies, quite amenable to the Fortune-100 Victors of the West.

When Andy carefully and in a most detailed and sequential fashion proved to Toyota’s Chairman, CEO and Production Director (Mr. Noda) TPS to be limited and constrained and blinded to Manage Risks, both from within the Assembly Line and from outside the Factory Facade, they got furious and Mr. Noda start crying like a little child.

However, the Patrician Patriarch at the helm of the Chair stayed extremely calmed and relaxed and issued a directive to contract Andy and do his (a) Beyond-Kaizen Method, and (b) Beyond TPS Method, right, as every mensurable outcomes (numerical goals and narrative objectives) were not achieved, but superseded in the audited actuality.

Homer: Son, you really love work harder than a workaholic, do you not?

Bart: Dad, you are 10% right. In fact, I just carry on with my pass times. However, seen from the outside enjoying my solving-wicked-problem pass times, clients and colleagues say that I look more, along the lines of an indefatigable Extraterrestrial Tesla Device ( http://bit.ly/1iLbOOo ).

Homer: Son, What does Sony (Global) Corporation operating in the totally of the Globe knows about Crisis Management ( http://bit.ly/1JFFlYQ ) ?

Bart: Dad, Exactly NOTHING! And Sony does know know, either, that as it operates multinationally, that it is entrenched with realpolitik world’s geopolitics and world history and world geography and world culture and ubiquitous world connectivity! SONY — LIKE TOYOTA, MITSUBISHI, SUZUKI, MAZDA, HONDA AND, AMONG MANY OTHERS, TOKYO MARINE (Insurance) — KNOWS ZERO ABOUT EXTREMELY-HOLISTIC BEYOND INSURANCE RISK MANAGEMENT, EXACTLY AS PER THE DICTUMS OF WHITE SWAN “…TRANSFORMATIVE AND INTEGRATIVE RISK MANAGEMENT…”

Homer: Son, So, I deduct from your saying that Nippons believe, as a close pride society, that their management style is untouchable and infinitely better than any SERIOUS MANAGEMENT (PROBLEM-SOLVING) IN THE WEST? Please also tell me a bit about the famous Noda Story.

Bart: Dad, EXACTLY. They proudly think of themselves best-in-class worldwide and in some extents and areas, yes, in fact, they are. ABOUT THE FACTUAL NODA STORY? This is a real-life story extremely summarized. I made a granularity-of-detail executive presentation to Toyota’s Board of Directors, including Mr. Noda (the Production Director). With the largest and smallest minuteness, step by step, sub-step by sub-step, I outright proved to Toyota Chairman, CEO, Production Director, CFO and others in the Board that using Kaizen to Manage Risks Holistically as per the Western state-of-the-art understanding was beyond ineffectual and inconsequential.

Of course and due to extreme Japanese nationalism and single mindedness, Mr. Noda assumed that I was too stupid to know something substantive about Kaizen and Toyota Production System and the American Professors who taught them their “stuff” through long consultative years.

When I first knocked the Toyota front-office door, I had spent twenty (20) years studying every advancement in business, management, and industry, clearly acknowledging every upside and every downside. In fact I was first introduced to a full-scope indoctrination in Japanese methodologies (ISBN: 978–0075543329 and ISBN: 978–0915299140) by Royal Dutch Shell.

Through Shell I was also greatly trained into Mr. William E. Conway’s “… Right Way to Manage …” (ISBN: 978–0963146458). Via them we observe quality assurance by the U.S. Navy, Los Alamos Lab, Hitachi. Thenceforth, a doctor in science taught me about what Quality, Reliability, Safety and Security meant for NASA. In a congruent and coherent and cohesive way, you will find those and other proprietary items within the White Swan “Transformative and Integrative Risk Management.”

In Shell, like as it is Mr. Jiddu Krishnamurti, there are no folly regionalism but frinctionless globalization and globalization smartification, thus embracing any useful approach, regardless of geography, story, race, ethnicity or else, AS LONG AS IT FURTHER UNDERPINS THE GLOBAL STRATEGIC BOTTOM-LINE, PERIOD!

I was heavily researching not just Toyota’s advancements and others by the Corporate Miracle of Japan of the 1980s, but absolutely everything regarding the countermeassuring of any form (including its many synonyms) of, direct or indirect, disruptions, both in the West and the Far East.

As Japan was topnotch and nobody in the West was doing something meritorious (as per Noda’s schema), he found it stupid and time-wasting and not lucrative to even consider the methodologies, even those by NASA and way beyond that, that I was ruthlessly researching, nation by nation, industry by industry. Ergo, as my amazing father and Napoleon Bonaparte stated, “ … I only have one counsel or you — be a master …” to the strategic surprise (Sputnik Moment) of Toyota, Noda, and Mitsubishi Motors.

Mr. Noda was extremely infuriated with me but, despite him, the Chairman hired me and carried on with emotional evenness, that of a Wise and Sage Patriarch. Mr. Noda gave me a positive nickname that I will not release at this or other time.

Other considerations pertaining to Kaizen and its evolution way beyond that, I will be commenting about in due time.

Homer: Son, Who is Taiichi?

Bart: Dad, Do you mean Mr. Taiichi Ohno?

Homer: Son, yes, tell me about him!

Bart: Dad, I will give you an info capsule. Taiichi Ohno (1912 – 1990) was a Japanese businessman and is considered to be the father of the Toyota Production System, which became Lean Manufacturing in the U.S. In the book The Perfect Engine (ISBN: 978–0743203814), it is commented the ensuing excerpt:

“ … When Taiichi Ohno visited the Ford Motor plant at River Rouge in the early fifties, he was truly humbled. Ford Motor’s quality and productivity were several times better than Toyota’s. The operational lead time, or total elapsed time for converting raw materials into a Model T, was only three days. Mr. Ohno worked diligently over the next twenty years to develop a version of Ford’s miracle that came to be known as the Toyota Production System …”

Homer: Son, being from the Far East and besides Lean Manufacturing, Do they observe and copy Sun Tzu’s Art of War?

Bart: Dad, Not, they don’t. Remember they are a tough small island that besieged and ruled in China, a huge country, that is to say: China, Germany’s world’s client number 1. IN FACT, THEY OBSERVE AGILE AND LEAN MANUFACTURING AND THEIR OWN 1645’S BOOK OF FIVE RINGS ( HTTP://BIT.LY/1ZAI0PG), BY MEANS OF WHICH IT IS FORCEFULLY MANDATED TO «INVESTIGATE AFFAIRS THOROUGHLY THROUGH» PRACTICE RATHER THAN TRYING TO LEARN THEM BY MERELY READING.

Homer: Bart, that sound very tough, isn’t it?

Bart: Dad, in fact, it is harshly tough without a fail.

Homer: Bart, do they watch the waves or the currents underneath?

Bart: Dad, they watch the waves, and both the currents underneath and the undercurrents closer to the sea bed!

Homer: Bart, Can you please explain the differences between Currents and Undercurrents?

Bart: Dad, I was reading The Economist and a notion came to my mind.

We have all have heard the Chinese adage,

“… Don’t look at the waves but the currents underneath …”

Currents are “ … Dynamic Driving Forces …” that eject sequences of so-called “…Trends…” While Undercurrents are Counter-“ … Dynamic Driving Forces …” that eject sequences of so-called “…Counter-Trends…”

As per the onset and in-progress Disruptional Singularity (coined by the signatory at http://amzn.to/1wfx4At), and using the terms “current” and “undercurrent” as linguistic wilds-cards, we have several CONCURRENT Global Currents and Worldly Undercurrents going on, around this Globe.

In extremely-holistic (beyond-insurance) risk management, we always know that small risks and medium-size risks and even large risks end up compounding together into devastation if we stay like innocent bystanders.

And as they compound, they make the Diruptional Singularity a reality.

Considering Media and Political Agendas, Which one is the “Current,” Ebola or ISIS?

Considering Media and Political Agendas, Which one is the “Undercurrent,” ISIS or Ukraine?

And there are also Forefronts and Foregrounds, such as the global massive sovereign indebtedness, nation-state-promoted cyberattacks, or reserve currencies waged into wars by the Central Bankers in the most important world economies.

Proper jobs and correct employment will never come back as industrial investors prefer to invest on bots and superautomation than humans. For the sake of their shares values and dividends, they will make frequent pacts with Satan with the utter purpose to be superricher yet. You see, they need to take with their wife about 25 millions to collaborate with the fight against Ebola to underpin their own P.R. agenda.

We have our concentration GLUED to the Waves, and not the Currents, Undercurrents, Counter-Currents, and Counter-Undercurrents underneath!

Currents are Dynamic Driving Forces that reshape this as-of-now Present (Continuum) and near-term Future.

Undercurrents are Counter-” Dynamic Driving Forces” that reshape this as-of-now Present (Continuum) and near-term future.

And so on and on. Every Force has a Counter-Force.

In order not to get your mind brainwashed, socially-engineered, or brain-controlled, you are going to have to REFLECT HARD AND SUBTLE and UNCONDITIONALLY AUDIT all those currents and counter-current underneath.

Homer: Bart, are you sure you are not the ruler mastermind of the Priory of Zion (http://bit.ly/16Hlppe)? THEN, TO KAIZEN OR NOT TO KAIZEN, TO FUKUSHIMA OR NOT TO FUKUSHIMA?

Bart: Dad, Again and again and again.

SHAKESPEARE: TO KAIZEN OR NOT TO KAIZEN, TO FUKUSHIMA OR NOT TO FUKUSHIMA?

I have extensively worked with Toyota and Mitsubishi Motors, as I have with many Western Corporation of a global scale, including Royal Dutch Shell and Exxon-Mobile. I also develop my own proprietary problem-solving methodology to manage every risks, in advance and otherwise, by the Internal Environment, even duly assuring Quality Control and Continuous Improvements along with womb-to-tomb Corporate Strategy, and External Environment, called Transformative and Integrative Risk Management.

The accomplished Japanese eliminating operational wastes and flaws in the products and manufacturing processes and further eternally improving those are the Monarchy in the Galaxy. But as they apply heavy Quality Assurance to Nuclear Plants like Fukushima, they never preemptively think of the flaws and threats stemming from the external environment, sometimes even from the external environment outside of the facade of the plant, factory or whatever installation.

It is like the Japanese are always fixated on the Henry Ford’s Assembly Line, ignoring other company’s crucial assets, both tangible and intangible.

Under Transformative and Integrative Risk Management, every form of advanced Quality Assurance and Continuous Improvement is as CRUCIAL as any internal or external threat, regardless whether they stem from the manufacturing process or NOT, including so-called Black-Swan events.

Japanese managers and engineers are hugely remarkable and respected people. However, I need to operate Womb-to-Tomb, early on.

To better understand the idea put forward, you need to read the White Swan.

Homer: Bart, Should I understand that Transformative and Integrative Risk Management has well established operational sub-chapters for both Continuous Improvement and Quality Assurance?

Bart: Dad, yes, you are accurate. But the scale, magnitude, and granularity of details incessantly considered by Transformative and Integrative Risk Management is mind-boggling for top Doctors in Rocket Science and yourself!

Homer: Bart, Really? Why is that? Can you elaborate about a hugely ignored ‘scientific knowledge’-laden, not out-foolish Thomas Paine’s so-called “common sense” that is forgotten and ignored by the corporate theaters of operation within the Top-10 Fortune 500?

Bart: Dad, well, the corporate theaters of operation within the Top-10 Fortune 500 constantly and continuously ignored some extremely useful and for-lucre methods by the Los Alamos National Laboratory ( http://www.lanl.gov/ ) and Lawrence Livermore National Laboratory ( https://www.llnl.gov/ ).

I will give you a concrete example. The savant over Procter & Gamble asked Los Alamos National Laboratory for a Process Engineering (Quality Assurance Method), sponsored by the gray matter and genes and synapses of Los Alamos National Laboratory scientists, but always dovetailed and customized to the needs, requirements and specifications of Procter & Gamble and its rampantly victorious corporate theater of operations.

Homer: Bart, Hugely Interesting and What Else? Is that your best?

Bart: Dad, no, no, that is not my best as I have spoken, pertaining to all-encompassing quality assurance approaches, of other as high standards and even higher as those available and observed by White Swan “Transformative and integrative Risk Management,” such as those of the world-class Military Spheres of Influence.

TO THIS END, I COULD ELLABORATE A LITTLE.

QUALITY ASSURANCE IN MILITARY AERONAUTIC INDUSTRIES! THIS IS A WORLDWIDE REAL-LIFE PRIORITIZED (TOP-DOWN) BRIEF LIST OF MILITARY ACHIEVEMENTS CONCERNING QUALITY ASSURANCE. ENSUING:

THE BEST Quality Assurance In Military Aeronautic Equipment Is And By American Manufacturer(S).

THE SECOND Best Quality Assurance In Military Aeronautic Equipment Is And By European Manufacturer(S).

THE THIRD Best Quality Assurance In Military Aeronautic Equipment Is And By Israeli Manufacturer(S).

THE FOURTH Best Quality Assurance In Military Aeronautic Equipment Is And By Russian Manufacturer(S).

THE FIFTH Best Quality Assurance In Military Aeronautic Equipment Is And By Chinese Manufacturer(S).

THE SIXTH Best Quality Assurance In Military Aeronautic Equipment Is And By Iranian Manufacturer(S).

NB_1: The writeup is based on evidence. Lack of information cannot allow the inclusion of Japan and India and their immeasurable achievements.

NB_2: However: Remember That Dedicated People Learn Fast And Change (UPGRADE) To Warp Speed!

Homer: Bart, What else do Nippons and Fortune-500 Top-10s lack to comprehend as a hardcore scientific truism while we are are experiencing a light-speed multi-eon age, way beyond millennials, exponential technologies, other societal and demographic and economic current imperatives?

Bart: Dad, that can be stated simply. I will tell you some things that Kaizen, Toyota Production Systems, Agile, Lean Manufacturing, Continuous Improvement, Juran’s Prescription, Six Sigma, Los Alamos’ Process Engineering and many other approaches, without forgetting that applied Systems Reliability allow America to upgrade its technological hotbeds when it made a quantum leap from Henry Ford’s knowledge base to moonshotting the Apollo Program into Moon-landing!

Homer: Bart, What are those considerations in the preceding paragraph that almost all Nippons and Fortune-500 Top-10s are failing to consider?

Bart: Dad, for instance, Paul Valéry (1932) wrote,

“… All the notions we thought solid, all the values of civilized life, all that made for stability in international relations, all that made for regularity in the economy … in a word, all that tended happily to limit the uncertainty of the morrow, all that gave nations and individuals some confidence in the morrow … all this seems badly compromised. I have consulted all augurs I could find, of every species, and I have heard only vague words, contradictory prophecies, curiously feeble assurances. Never has humanity combined so much power with so much disorder, so much anxiety with so many playthings, so much knowledge with so much uncertainty …”

AND:

Charles Dickens (1798) wrote,

“ …It was the best of times, it was the worst of times … it was the spring of hope, it was the winter of despair …”

AS WELL:

COMMENTARY BY BART: As per my evidence-based research and perpetual verification in real time, the only Singularity that there will be is not the Technological Singularity, but what I call the Disruptional Singularity. However, if every citizen in the world gets his, her act together the soonest, there is hope for a better world at a later time.

IF YOU THINK THIS IS FLAWED, SEE THE MASSIVE SECRET REPORTS OF THE N.I.C. ( NATIONAL INTELLIGENCE COUNCIL at http://1.usa.gov/1gloStR) TO THE INCUMBENT OF THE WHITE HOUSE.

THIS TOO:

Prof. Michio Kaku, Ph.D. indicates:

“… By the end of the twentieth century, science had reached the end of an era, unlocking the secrets of the atom, unraveling the molecule of life, and creating the electronic computer. With these three fundamental discoveries, triggered by the quantum revolution, the DNA revolution, and the computer revolution, the basic laws of matter, life, and computation were, in the main, finally solved .… That epic phase of science is now drawing to a close; one era is ending and another is only beginning .… The next era of science promises to be an even deeper, more thoroughgoing, more penetrating one than the last .… Clearly, we are on the threshold of yet another revolution. HUMAN KNOWLEDGE IS DOUBLING EVERY TEN YEARS [AS PER THE 1998 STANDARDS]. In the past decade, more scientific knowledge has been created than in all of human history. COMPUTER POWER IS DOUBLING EVERY EIGHTEEN MONTHS. THE INTERNET IS DOUBLING EVERY YEAR. THE NUMBER OF DNA SEQUENCES WE CAN ANALYZE IS DOUBLING EVERY TWO YEARS. Almost daily, the headlines herald new advances in computers, telecommunications, biotechnology, and space exploration. In the wake of this technological upheaval, entire industries and lifestyles are being overturned, only to give rise to entirely new ones. But these rapid, bewildering changes are not just quantitative. They mark the birth pangs of a new era .… FROM NOW TO THE YEAR 2020, SCIENTISTS FORESEE AN EXPLOSION IN SCIENTIFIC ACTIVITY SUCH AS THE WORLD HAS NEVER SEEN BEFORE. IN TWO KEY TECHNOLOGIES, COMPUTER POWER AND THE DNA SEQUENCING, WE WILL SEE ENTIRE INDUSTRIES RISE AND FALL ON THE BASIS OF BREATHTAKING SCIENTIFIC ADVANCES. SINCE THE 1950S, THE POWER OF OUR COMPUTERS HAS ADVANCED BY A FACTOR OF ROUGHLY TEN BILLION. IN FACT, BECAUSE BOTH COMPUTER POWER AND DNA SEQUENCING DOUBLE ROUGHLY EVERY TWO YEARS, ONE CAN COMPUTE THE ROUGH TIME FRAME OVER WHICH MANY SCIENTIFIC BREAKTHROUGHS WILL TAKE PLACE .… BY 2020, MICROPROCESSORS WILL LIKELY BE AS A CHEAP AND PLENTIFUL AS SCRAP PAPER, SCATTERED BY THE MILLIONS INTO ENVIRONMENT, ALLOWING US TO PLACE INTELLIGENT SYSTEMS EVERYWHERE. THIS WILL CHANGE EVERYTHING AROUND US, INCLUDING THE NATURE OF COMMERCE, THE WEALTH OF NATIONS, AND THE WAY WE COMMUNICATE, WORK, PLAY, AND LIVE…” [171]

AND AS WELL:

Nanotechnology and life by Ray Kurzweil (as of May 2009)!

“…Nanotechnologies are broad concept, it’s simply refers to technology where the key features in measuring the small number of nanometers. A NANOMETER IS THE DIAMETER OF FIVE CARBON ATOMS SO IT’S VERY CLOSE TO THE MOLECULAR LEVEL AND WE ALREADY HAVE NEW MATERIALS AND DEVICES THAT HAD BEEN MANUFACTURED AT THE NANOSCALE. IN FACT, CHIPS TODAY, THE KEY FEATURES ARE 50 OR 60 NANOMETERS SO THAT IS ALREADY NANOTECHNOLOGY. The true promise of nanotechnology is that ultimately we’ll be able to create devices that are manufactured at the molecular level by putting together, molecular fragments in new combinations so, I can send you an information file and a desktop nanofactory will assemble molecules according to the definition in the file and create a physical objects so I can e-mail you a pair of trousers or a module to build housing or a solar panel and WE’LL BE ABLE TO CREATE JUST ABOUT ANYTHING WE NEED IN THE PHYSICAL WORLD FROM INFORMATION FILES WITH VERY INEXPENSIVE INPUT MATERIALS. You can… I mean, just a few years ago if I wanted to send you a movie or a book or a recorded album, I would send you a FedEx package, now I can e-mail you an attachment and you can create a movie or a book from that. On the future, I’ll be able to e-mail you a blouse or a meal. So, that’s the promise of nanotechnology. Another promise is to be able to create devices that are size of blood cells and by the way biology is an example of nanotechnology, the key features of biology are at the molecular level. SO, THAT’S ACTUALLY THE EXISTENCE PROOF THAT NANOTECHNOLOGY IS FEASIBLE BUT BIOLOGY IS BASED ON LIMITED SIDE OF MATERIALS. EVERYTHING IS BUILT OUT OF PROTEINS AND THAT’S A LIMITED CLASS OF SUBSTANCES. WITH NANOTECHNOLOGY WE CAN CREATE THINGS THAT ARE FAR MORE DURABLE AND FAR MORE POWERFUL. One scientist designed a robotic red blood cell it’s a thousand times more powerful than the biological version so, if you were to replace a portion of your biological red blood cells with this respirocytes the robotic versions. You could do an Olympic sprint for 15 minutes without taking a breath or sit at the bottom of your pool for 4 hours. If I were to say someday you’ll have millions or even billions of these nanobots, nano-robots, blood cell size devices going through your body and keeping you healthy from inside, I might think well, that sounds awfully futuristic. I’d point out this already in 50 experiments in animals of doing exactly that with the first generation of nano engineered blood cell size devices. One scientist cured type 1 diabetes in rats with the blood cell size device. Seven nanometer pores let’s insulin out in the controlled fashion. At MIT, there’s a blood cell size device that can detect and destroy cancer cells in the bloodstream. These are early experiments but KEEP IN MIND THAT BECAUSE OF THE EXPONENTIAL PROGRESSION OF THIS TECHNOLOGY, THESE TECHNOLOGIES WILL BE A BILLION TIMES MORE POWERFUL IN 25 YEARS AND YOU GET SOME IDEA WHAT WILL BE FEASIBLE …” [199]

Homer: Bart, Does ISO standards suffice?

Bart: Dad, the provisions by International Organization for Standardization ( http://www.iso.org/iso/home.html ) are a good starting point, but not sufficiently optimal for Google X, DARPA, NASA, plain-vanilla Google, Amazon and other ground-breaking organizations, obsoleting New Frontiers frequently. Nonetheless, ISO standards are considered buy they do not intellectually castrate today’s winners-take-it-all!

Homer: Bart, What is updatedness? Does it affect Kaizen, TPS, Lean Manufacturing and the like?

Bart: Dad, your question demand that you memorize a Maxim for Life! Tis:

“… Everything is somewhat related related to everything else…” The “…somewhat…” is there to underlying mean that withing the “…fabrics…” of Cosmos’ Dark Matter and Dark Energy, everything is connected in discrete modes.

SO, YOU ASK HOW TO UNDERSTAND REAL-WORLD ZEITGEIST AND UPDATEDNESS, FLUIDLY?

TO THIS UTTER PURPOSE:

Austrian-American Peter Ferdinand Drucker (ISBN: 978–0060851149) strongly argues, as I have verified in my 33-year-old continual evidence-based research, that people’s schemas (understandings of the world), belief systems, worldviews, and most cherished notions and truisms, as well as Weltanschauung, have a median and in average — as per the 1990’s standards — of twenty (20) years of obsolescence per each person.

Factual proof, available and to this Bart, strongly indicates that such obsolescence has exponentially widened with the elapse of time while technological breakthroughs and scientific discoveries are more prevalent and obvious.

To-this-end details are to be found in the White Swan book.

WE NOW HAVE, FOR INSTANCE:

1.- A Russian Submarine in sovereign waters of Sweden. Along with some deep military provocations to other areas of the former Warsaw Act. France is asking Germany an emergency loan of 80 billion Euros.

2.- A great potential not to an Ebola epidemic, but pandemic. Ebola is decimating some African regions.

3.- ISIS (so-called Islamic States), with potentials to reach the Indian Ocean and Eurabia.

4.- Israel/Palestine Conflict.

5.- Ukraine’s lost of Crimea.

6.- Russia wanting to remind the world that there are a Galatic Superpower, not only in perilous Eastern Europe, Europe in General, and the World at large. Some reports argue that Russia will have a military presence in the Seven Seas, including a Military Base domiciled in the Arctic and Antarctic. Remember?

7.- Cold War II.

8.- Arms Race II.

9.- Space-Age War to Conquer the Outer Space, with novel players such as India.

10.- The Global Existential Risk of the Weather and the Environment.

11.- The Universal Deflation of the Advanced Economies of the Planet.

12.- The Universal Surplus of Corrupted Politicians and Universal Lack of Employment.

13.- If we get lucky, we can also get into M.A.D. WWIII.

13.- So forth.

N.B.: Dad, remember that if you think that the geopolitical tensions in the China South Sea do not affect the business operations of for-lucre multinationals around the world, I must tell you that these corporate warriors, Fortune 500, Sony Corporation and otherwise, has ludicrous planned and executed Strategies and Strategizing ones.

Then, Dad, the right question is, How can we fundamentally Kaizen the thirteen items above?

Homer: Bart, all of what you tell me sounds most complicated, son!

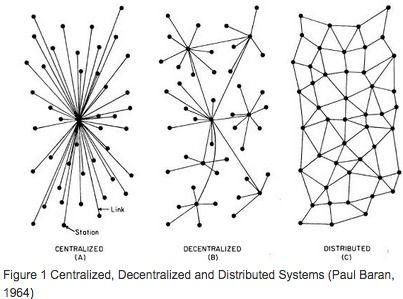

Bart: Dad, it is not complicated, but most complex. I will try to explain why with a message to all MANAGERS, regardless of their Professional Lines of Practices and the Core Business of their respective firms.

CAMBRIDGE UNIVERSITY’ SIR MARTIN REES, PH.D. (BRITISH ASTRONOMER ROYAL) FORCEFULLY SUGGEST TO MANAGERS TO BECOME PRACTICALLY AND PRACMATICALLY FLUENT AND COGNIZANT IN THE “…SCIENCE OF COMPLEXITY …” (ISBN: 978–0671872342) AND TO FURTHER ILLUSTRATE CHALLENGES, OPPORTUNITIES, BENEFITS AND OTHER DEVELOPMENTS, HE WROTE:

“ … Science is emphatically not, as some have claimed, approaching its end; it is surging ahead at an accelerating rate. We are still flummoxed about the bedrock nature of physical reality, and the complexities of life, the brain, and the cosmos. New discoveries, illuminating all these mysteries, will engender benign applications; but will also pose NEW ETHICAL DILEMMAS AND BRING NEW HAZARDS …. BUT THERE IS A DARK SIDE: NEW SCIENCE CAN HAVE UNINTENDED CONSEQUENCES; IT EMPOWERS INDIVIDUALS TO PERPETRATE ACTS OF MEGATERROR; EVEN INNOCENT ERRORS COULD BE CATASTROPHIC. THE ‘DOWNSIDE’ FROM TWENTY-FIRST CENTURY TECHNOLOGY COULD BE GRAVER AND MORE INTRACTABLE THAN THE THREAT OF NUCLEAR DEVASTATION THAT WE HAVE FACED FOR DECADES. AND HUMAN-INDUCED PRESSURES ON THE GLOBAL ENVIRONMENT MAY ENGENDER HIGHER RISKS THAT THE AGE-OLD HAZARDS OF EARTHQUAKES, ERUPTIONS, AND ASTEROID IMPACTS …”

Homer: Bart, it seems to me that the External Environment has been immeasurably underestimated by these Kaizen-centric and ‘Six Sigma’-focused corporations, being now elucidated to me that the external threats are not abstract, but formidably dangerous to the totality of the “…business as usual…” as IF the for-lucre affairs are carried on within the indoors of the Internal Environment.

So, Bart, you have upped so much White Swan “…Transformative and Integrative Risk Management…” ( http://amzn.to/1wUhrDq ) to a point of superseding and ruling, by a nonlinear order of explosive magnitude, functions such as Systems Quality Assurance, Systems Reliability, Systems Safety, Systems Security, among other amenities about which Andy holds under Fort Knock’s proprietary secret of his sole own.

I CAN SEE THAT “…TRANSFORMATIVE AND INTEGRATIVE RISK MANAGEMENT…” (PROBLEM-SOLVING METHODOLOGY) HAS TAKEN BALKANIZED FUNCTIONS, REFINED THEM, UPDATED THEM AND UPGRADED THEM AND BROUGHT THEM INTO A SEAMLESS MONOLITHIC INTEGRATION, ACTING AS THE MILKY WAY’S BERLUSCONI TO GUARANTEE SUSTAINED BUSINESS SUCCESS. HAVING ACKNOWLEDGED THAT, DOES “…TRANSFORMATIVE AND INTEGRATIVE RISK MANAGEMENT…” TAKE OVER OTHER FUNCTIONS?

Bart: Dad, I see that you are beginning to understand. “…TRANSFORMATIVE AND INTEGRATIVE RISK MANAGEMENT…” also takes charge, from Alpha to Omega, of the entire planned and executed Corporate Strategy, directly reporting to the CEO, Chairman, and Board of Directors.

Homer: Bart, What type of professional c-level executives are we going to need to institute the preceding?

Bart: Dad, Infinitely beyond the Black-Belt Senseis.

IN ALL SPECIFICITY, SEE THE FOLLOWING:

In any management undertaking today, and given the universal volatility and rampant and uninterrupted rate of change, one must think and operate in a fluid womb-to-tomb mode.

The manager must think and operate holistically, both systematically and systemically, at all times.

In the twentieth-one century, How top managers must be, in order to succeed?

The manager must also be: i) Multidimensional, ii) Interdisciplinary, iii) Cross-disciplinary (or Interdisciplinary), iv) Multifaceted, v) Cross-functional, vi) Multifarious, vii) Multi-specializing, viii) Multimodal, ix) Cross-Referential, x) Multitasking, xi) Cross-pollinating, xii) Cross-fertilizing, xiii) Cross-sectional and xiv) Longitudinal.

THAT IS, THE MANAGER MUST NOW BE AN EXPERT STATE-OF-THE-ART IN-ALL-PRACTICALITY (A) GENERALIST AND (B) ERUDITE.

An expert state-of-the-art in-all-practicality generalist and erudite INCLUDES, as per 1999’s SAIC (Science Applications International Corporation) CEO, Mr. Roger Brown, observed that my notion comprises of:

(I.-) A Knowledgist,

(II.-) A Champion, and

(III.-) A Braingainer.

ALSO:

THIS EXPERT STATE-OF-THE-ART IN-ALL-PRACTICALITY GENERALIST AND ERUDITE MUST ALSO CONJOINTLY INCLUDE A STRONG BONDING TO:

(A.-) Science,

(B.-) Punditry,

(C.-) Nerdiness,

(D.-) Wizardry. — (“… Any sufficiently advanced technology is virtually indistinguishable from magic…” —Arthur C. Clarke).

ERGO, THIS IS THE NEWEST SPECIALIST AND SPECIALIZATION.

Homer: What is therefore your own definition for Kaizen, Bart?

Bart: Dad, this is it:

“… Kaizen, Japanese for “improvement” or “change for the best”, refers to philosophy or practices that focus upon continuous improvement of processes in manufacturing, engineering, business management or any process. It has been applied in healthcare, [.…] psychotherapy, [.…] life-coaching, government, banking, and other industries. When used in the business sense and applied to the workplace, kaizen refers to activities that continually improve all functions, and involves all employees from the CEO to the assembly line workers. It also applies to processes, such as purchasing and logistics, that cross organizational boundaries into the supply chain. [.…] By improving standardized activities and processes, kaizen aims to eliminate waste (see lean manufacturing). Kaizen was first implemented in several Japanese businesses after the Second World War, influenced in part by American business and quality management teachers who visited the country. It has since spread throughout the world [.…] and is now being implemented in environments outside of business and productivity …”

Homer: And how about Six Sigma defined, Bart?

Bart: Dad, this is it:

“ … Six Sigma is a set of techniques and tools for process improvement. It was developed by Motorola in 1986, coinciding with the Japanese asset price bubble which is reflected in its terminology [.…] Jack Welch made it central to his business strategy at General Electric in 1995. Today, it is used in many industrial sectors …. Six Sigma seeks to improve the quality of process outputs by identifying and removing the causes of defects (errors) and minimizing variability in manufacturing and business processes. It uses a set of quality management methods, including statistical methods, and creates a special infrastructure of people within the organization (“Champions”, “Black Belts”, “Green Belts”, “Yellow Belts”, etc.) who are experts in these methods. Each Six Sigma project carried out within an organization follows a defined sequence of steps and has quantified value targets, for example: reduce process cycle time, reduce pollution, reduce costs, increase customer satisfaction, and increase profits. These are also core to principles of Total Quality Management (TQM) as described by Peter Drucker and Tom Peters (particularly in his book “In Search of Excellence” in which he refers to the Motorola six sigma principles) …”

Homer: Why is leadership so spoken against by Leadership Specialist and Harvard University Prof. Barbara Kellerman, PhD., author of The End of Leadership (ISBN: 978–0062069160), Bart?

Bart: Dad, while in a society there is Universal Drunk Sodom and Universal Cocained Gomorrah, you will never have REAL MORALITY, by any rational measure. AND IF YOU DO NOT HAVE OPTIMAL MORALITY IN PLACE, THERE ARE TWO THINGS THAT YOU WILL NEVER HAVE.

FIRST, YOU WILL NEVER HAVE AN OPTIMAL AND FUNCTIONAL ETHICAL STANDING TO CONDUCT BUSINESS CORRECTLY THAT ARE FISCALLY SOUND.

SECOND, NEITHER MANAGERS, NOR EMPLOYEES, NOR THEIR RESPECTIVE ORGANIZATIONS WILL NEVER ACHIEVE THE NECESSARY FUNCTIONAL OUTCOMES TO NURTURE AND SUSTAIN THE FINANCIALS OF THE ORGANIZATION IN QUESTION.

“FIRST” AND “SECOND” IS ALL OVER THE PLACE GLOBALLY, BOTH IN THE PRIVATE SECTOR AND PUBLIC SECTOR, AS WELL AS THE NGO SECTOR.

Homer: So, to the best of their abilities, How far and wide angle goes the Grand View of BlackBelts in tackling with real world operational and non-operational problems, Bart?

Bart: Dad, my evidence-based research show a calculus of about 270 degrees within the mean of top-performing BlackBelts.

Homer: Having said that, Bart, What those BlackBelts need to get the missing and remaining 90 degrees back into the top performing?

Bart: Dad, what it takes is to understand and to prepare for the forthcoming White Swan book’s excerpt. Please see it here!

IT LITERALLY GOES LIKE THIS:

1.-) “…HUMAN KNOWLEDGE IS DOUBLING EVERY TEN YEARS [AS PER THE 1998 STANDARDS]…” [226]

2.-) “…COMPUTER POWER IS DOUBLING EVERY EIGHTEEN MONTHS. THE INTERNET IS DOUBLING EVERY YEAR. THE NUMBER OF DNA SEQUENCES WE CAN ANALYZE IS DOUBLING EVERY TWO YEARS…” [226]

3.-) “… BEGINNING WITH THE AMOUNT OF KNOWLEDGE IN THE KNOWN WORLD AT THE TIME OF CHRIST, STUDIES HAVE ESTIMATED THAT THE FIRST DOUBLING OF THAT KNOWLEDGE TOOK PLACE ABOUT 1700 A.D. THE SECOND DOUBLING OCCURRED AROUND THE YEAR 1900. IT IS ESTIMATED TODAY THAT THE WORLD’S KNOWLEDGE BASE WILL DOUBLE AGAIN BY 2010 AND AGAIN AFTER THAT BY 2013 …” [226]

4.- ) “…KNOWLEDGE IS DOUBLING BY EVERY FOURTEEN MONTHS…” [226]

5.-) “…MORE THAN THE DOUBLING OF COMPUTATIONAL POWER [IS TAKING PLACE] EVERY YEAR…” [226]

6.-) “…The flattening of the world is going to be hugely disruptive to both traditional and developed societies. The weak will fall further behind faster. The traditional will feel the force of modernization much more profoundly. The new will get turned into old quicker. The developed will be challenged by the underdeveloped much more profoundly. I worry, because so much political stability is built on economic stability, and economic stability is not going to be a feature of the flat world. Add it all up and you can see that the disruptions and going to come faster and harder. No one is immune — not me, not you, not Microsoft. WE ARE ENTERING AN ERA OF CREATIVE DESTRUCTION ON STEROIDS. Dealing with flatism is going to be a challenge of a whole new dimension even if your country has a strategy. But if you don’t have a strategy at all, well, again, you’ve warned…” [226]

END OF THE INFORMATION CITED BY BART.

Homer: Don’t you find the solutions to this age esoteric, Bart?

Bart: Dad, No, and it is funny that you mentioned it. I once met a Scots executive who told me that beyond-insurance risk management methodology by DARPA (Defense Advanced Research Projects Agency), NASA, Boeing, Lockheed Martin (L.M.‘s motto, “… What’s impossible today won’t be tomorrow …” ) and Royal Dutch/Shell Group, practicing Systems Approach with the Non-Theological Applied Omniscience Problem-Solving Method since the late 1970’s, was “…esoteric…” I still treasure his aesthetic e-mail.

TO THE DISMAL AND UNCOMFORT OF SAID SCOTS EXECUTIVE, LET US SEE THE FOLLOWING:

Most-Honorable Mr. Willy Brandt (18 December 1913 – 8 October 1992) — was a German politician and Chancellor of Germany:

“…Those who adhere to the past won’t be able to cope with the future…”

Homer: Bart, Can you tell me one (1) thing BlackBelts and Senseis are missing in full-scope Quality Assurance and Continuous Improvements in the twentieth-one century?

Bart: Dad, Certainly! I will tell you six (6) examples below. In each example, BlackBelts and Senseis have to do SOMETING EXTRA and way beyond out-of-the-box foolishness.

BY WAY OF EXAMPLE:

If you are into Toyota Production System and you are the Sony Corporation, you need to know a lot of Five Rings (ISBN: 978–1935785972).

If you are into Continuous Process Improvement and you are General Electric, you ALSO need to know a lot of Niccolo Machiavelli’s The Prince (ISBN-13: 978–0226500447).

If you are into Juran’s Prescription and you are Jack Ma’s (http://bit.ly/1Ds2Mla) Alibaba Group (http://bit.ly/1uzDRIv), you ALSO need to know a lot of Sun Tzu’s The Art Of War (ISBN: 978–1454911869).

If you are into Six Sigma and you are Motorola, you ALSO need to know a lot of Joseph Fouché’s Portrait of a Politician (ISBN: 978–1165614356).

If you are into Kaizen and you are Bayerische Motoren Werke AG (BMW) and Mercedes-Benz, you ALSO need to know a lot of Frederick The Great On The Art Of War (ISBN: 978–0306809088).

If you are into Lean Manufacturing and you are DARPA or NASA, you ALSO need to know a lot of Napoleons Art of War (ISBN: 978–1566196956).

Homer: Bart, Really? I did not know that!

Bart: Dad, they are and will never be as open as I am. In the final analysis to be and to come, they will be instituting the Extra Miles above.

Homer: Son, How did you get to learn about the subject matter here?

Bart: Dad, The problem with you is that you forget it all. When I was 25 I was fully introduced and indoctrinated on worldwide Royal Dutch Shell’s scenario-planning methodology by Shell’s nationalized company Maraven. That introduction and indoctrination came through the kind deeds and executive decision of and by Maraven President, Dr. Carlos Castillo and the high-ranking strategic planners under his command. The view and application of scenario-planning methodology by Maraven, as well as the training to me, included all theoretical and practical quality-assurance and continuous-improvement prescriptions by: Dr. W. Edwards Deming, Dr. Joseph Juran, Mr. Bill Conway, U.S. Navy, Kaizen and Hitachi. With serious quality assurance and continuous improvement, one must exercise (like in scenario planning) great foresight and learning in advance in order to eliminate forthcoming “defects” or “flaws,” leaving leeway to countermeasure unknonwables. All of my Maraven training included all of the preceding. Maraven was a created and an acculturated full-scope petroleum company by Shell and then under the absolute tutelage and management control of state-owned PDVSA (Citgo’s parent company). Maraven was affluently applying scenario-planning methodology as it was exactly conceived, designed and practiced by Pierre Wack (an unconventional French oil executive who was the first to develop the use of scenario planning in the private sector, at Royal Dutch Shell’s London headquarters in the 1970s.) Wack is the father of scenario planning in the private sector. For more details on this noted Guru, go to the Economist at http://www.economist.com/node/12000502 .

Homer: Son, where is the repository of this Fenchman?

Bart: Dad, Wack’s public writings are meager and kept under the intellectual copyrighted ownership of Shell. Wack’s methodological heir was Honorable Mr. Peter Schwartz, a previous Shell executive, the former Chairman of Global Business Network (www.gbn.com) and author of the groundbreaking book on scenario planning: “…The Art of the Long View: Planning for the Future in an Uncertain World…” (ISBN-13: 978–1863160995). Wack and Schwartz are the greatest proponents of 3-scenario analysis. In my case, I institute “…hazard-scenario planning…” and the number of plausible and implausible outputted “outlooks” (scenarios) are only limited by the designated budget by the Client. I am going to explain what “ouput” means in Systems Engineering. You have the Systems Transformational “Box,” throughputting (marshaling) “…known inputs…” into “…desirable outputs…” (outcomes). BUT, FOR A LONG TIME UNTIL YEAR 1999, I HAD A REVOLTING QUESTION: WHERE DOES THE EXACT AND MOST UNDERLYING GENESIS OF SCENARIO PLANNING REALLY COME FROM? When I study contemporary authors I always wonder if I can find the actual “root” philosopher, scientist and thought leader that first brought about an idea, notion, maxim, theory or approach. For instance, great American self-help author Napoleon Hill got his first name and success tenets after and from (respectively) Napoleon Bonaparte. I research the works by the original ones first and then I might check out the works by the contemporary versions afterwards.

Homer: Son, and the U.S. Industrial-Military Complex’s involvement with this Dr. Strangeloves’ thinking thing?

Bart: Dad, COMING BACK TO THE MAIN SUBJECT MATTER, I finally founded that scenario planning was developed by the U.S. during the 1950’s and as great existential challenges were threatening the country’s National Security doctrine as a direct result of the Cold War (1947–1991). In consequence, I knew the exact and primordial, in-all-truth root and understood that DoD (1789 — present), DARPA (1958 — present), NASA (1958 — present) and Military-Industrial Complex (1961 — present) and other agencies and private contractors (including Skunkworks ones) were the founding fathers of advanced scenario-planning methodology. Many scientists and engineers worked extremely hard at it, including NASA’s Dr. Wernher von Braun ( http://en.wikipedia.org/wiki/Wernher_von_Braun ) and the plethora of elitist scientists collaborating with him. But the gravest inflection point came by the Sputnik Crisis (also known as the “Sputnik Moment”). From this point onward, one find the salient research by RAND Corporation’s polymath futurist Herman Kahn (February 15, 1922 – July 7, 1983). To give you an idea on Kahn’s intellectual mind-set style, please check these clear-eyed assertions by him: “…I’m against ignorance…I am against the whole cliché of the moment…I’m against fashionable thinking…I’m against sloppy, emotional thinking…” (http://www.brainyquote.com/quotes/authors/h/herman_kahn.html). United States Secretary of Defense Donald Rumsfeld argued, “…Reports that say that something hasn’t happened are always interesting to me, because as we know, there are known knowns; there are things we know that we know. There are known unknowns; that is to say, there are things that we now know we don’t know. But there are also unknown unknowns – there are things we do not know we don’t know…” But many years before “… unknown unknowns…” by Rumsfeld, Khan was the ultimate pioneer in superseding “…the unthinkable…” This by Khan is one of the pillars of Transformative and Integrative Risk Management and The Future of Scientific Management, Today! He did offer a theoretical body and practical mode of approaching its theory. The most important global institutions of the world, into profit or not, are into stern “unthinkable” discerning, beginning with those of us into advanced beyond-insurance risk management and strategy. Wikipedia’s citation (http://en.wikipedia.org/wiki/Herman_Kahn) on “…the unthinkable…” polymath futurist is extremely educational. An excerpt of this citation indicates, “…Herman Kahn was a founder of the Hudson Institute and one of the preeminent futurists of the latter part of the twentieth century. He originally come to prominence as a military strategist and systems theorist while employed at the RAND Corporation. He became known for analyzing the likely consequences of nuclear war and recommending ways to improve survivability, making him an inspiration for the title character of Stanley Kubrick’s classic black comedy film satire Dr. Strangelove. His theories contributed to the development of the nuclear strategy of the United States.…Kahn’s major contributions were the several strategies he developed during the Cold War to contemplate ‘the unthinkable’ – namely, nuclear warfare – by using applications of game theory. (Most notably, Kahn is often cited as the father of scenario planning. During the mid-1950s, the Eisenhower administration’s prevailing nuclear strategy had been one of ‘massive retaliation’, enunciated by Secretary of State John Foster Dulles. According to this theory, dubbed the ‘New Look’, the Soviet Army was considerably larger than that of the United States and therefore presented a potential security threat in too many locations for the Americans to counter effectively at once. Consequently, the United States had no choice but to proclaim that its response to any Soviet aggression anywhere would be a nuclear attack…Kahn considered this theory untenable because it was crude and potentially destabilizing. He argued that New-Look theory invited nuclear attack by providing the Soviet Union with an incentive to precede any conventional localized military action somewhere in the world with a nuclear attack on U.S. bomber bases, thereby eliminating the Americans’ nuclear threat immediately and forcing the United States into the land war it sought to avoid.…In 1960, as Cold War tensions were near their peak following the Sputnik crisis and amidst talk of a widening ‘missile gap’ between the United States and the Soviet Union, Kahn published On Thermonuclear War, the title of which clearly alluded to On War, the classic 19th-century treatise by the German military strategist Carl von Clausewitz.…Kahn rested his theory upon two premises, one obvious, one highly controversial. First, nuclear war was obviously feasible, since the United States and the Soviet Union currently had massive nuclear arsenals aimed at each other. Second, like any other war, it was winnable.…Whether hundreds of millions died or ‘merely’ a few major cities were destroyed, Kahn argued, life would go on – as it had, for instance, after the Black Death in Europe during the 14th century, or in Japan after the limited nuclear attack in 1945 – contrary to the conventional, prevailing doomsday scenarios. Various outcomes might be far more horrible than anything hitherto witnessed or imagined, but some of them nonetheless could be far worse than others. No matter how calamitous the devastation, Kahn argued that the survivors ultimately would not ‘envy the dead’ and to believe otherwise would mean that deterrence was unnecessary in the first place. If Americans were unwilling to accept the consequences, no matter how horrifying, of a nuclear exchange, then they certainly had no business proclaiming their willingness to attack. Without an unfettered, un-ambivalent willingness to ‘push the button’, the entire array of preparations and military deployments was merely an elaborate bluff.…The bases of his work were systems theory and game theory as applied to economics and military strategy. Kahn argued that for deterrence to succeed, the Soviet Union had to be convinced that the United States had second-strike capability in order to leave the Politburo in no doubt that even a perfectly-coordinated massive attack would guarantee a measure of retaliation that would leave them devastated as well: «…At the minimum, an adequate deterrent for the United States must provide an objective basis for a Soviet calculation that would persuade them that, no matter how skillful or ingenious they were, an attack on the United States would lead to a very high risk if not certainty of large-scale destruction to Soviet civil society and military forces…».…Superficially, this reasoning resembles the older doctrine of Mutual Assured Destruction (MAD) due to John von Neumann, although Kahn was one of its vocal critics. Strong conventional forces were also a key element in Kahn’s strategic thinking, for he argued that the tension generated by relatively minor flashpoints worldwide could be dissipated without resort to the nuclear option…”

Homer: Son, Do you have closing remarks about the preceding?

Bart: You bet it, Dad. Given all of the prior and to the end of all of the encompassed in the totality of this dialogue with you, Dad, I understand and summon the following:

1.- Scenario planning, also called scenario thinking or scenario analysis, is a strategic planning method that some organizations use to make flexible long-term plans. It is in large part an adaptation and generalization of classic methods used by military intelligence, government agencies and entities, NGOs, business enterprises and supranationals. Nonetheless, scenario planning as per Wack and Khan is breathtaking but in itself does not suffice in contemporary times.

2.- Along with item “1.-” there is also the concomitant application of 2.1.- Systems Theory, 2.2.- Game Theory, 2.3.- Wargaming Theory and 2.4.- Many other modes of practical strategic thinking and strategic execution. By example, Game Theory and Wargaming Theory ? both under the Systems Thinking Approach ? are extremely well addressed by Dr. Bruce Bueno de Mesquita, PhD. You may wish to explored his book: “The Predictioneer’s Game: Using the Logic of Brazen Self-Interest to See and Shape the Future” (ISBN-13: 978–0812979770). To me Napoleon Bonaparte is the greeters Systems Thinker ever beyond any military campaign. If you are hesitant about it, please ask systems engineers, physicists, other scholars and managers, as well as prominent historians. 80% of the teaching by Bonaparte are taught in all U.S. Military Academies. In my case and as per my own experience, NASA-beloved Leonado Da Vinci’s publications can be an over-learning device for the prepared mind.

3.- With “1.-” and “2.-” in place, there is also the application of Compound Forecasting without the ruling out the vast computing calculation and transformation of narrative data and numerical data. I have many reasons to state that I use 70% of Qualitative Analytics and 30% of Quantitative Analytics. Algorithms don’t outsmart the biological brain yet. By year 2000, before the Dawn of Strong Big Data, the Science Applications International Corporation (SAIC) CEO, Mr. Roger Brown and me were experimenting in seizing an optimal computerized reading pertaining to the “success likelihood” of a major business initiative to be jointly launched by our respective interests. This was another way of “futuring” that resulted exceedingly interesting.

4.- In addition to all posited in “1.-”, “2.-” and “3.-”, it is, too, highly advisable the concurrent implementation of other practical methodologies of and by 4.1.- Futures Studies and 4.2.- Foresight Research.

5.- All numbered items above and in a rogue yet subtle search and recursive exploration of the totality of the whole (by holistic means), everything there must be seamlessly integrated and instituted in accordance with Systems Thinking with the Systemic and Systematic Applied Omniscience Perspective as it is understood both by Applied Engineers and Physicists. All stakeholders institutes all the approaches hereby in order to avoid disruption potentials. One of the world’s best and more authoritative example is NASA and everything by it. For decades, NASA has been developing beyond-insurance risk management technologies and services for its own initiatives, projects and missions, as well as for august global corporations. In the Summer of 2013, an American group of oil-and-gas that operate globally asked NASA for a “Space-Age Risk Management” service to them as it was made official in a NASA Press Release at http://www.nasa.gov/centers/johnson/news/releases/2013/J13-014.html Kindly please remember that Risk Management is neither taking calculated risks in Wall Streets, nor managing “challenges” by insurance and reinsurance companies. Beyond-Insurance Risk Management is, in all actuality, OPTIMAL MANAGEMENT PER SE to greatly exploit upsides and downsides before they happen. Beyond-Insurance Risk Management or “…Transformative and Integrative Risk Management…” is, by far, much more than Beyond-”…Sarbanes–Oxley Act…” Risk Management. You see, “reinsurance” is a fancy term that equates to amounts of “…insurance purchased by and for insurance companies…” If a tiny or gargantuan insurance company instituted Transformative and Integrative Risk Management, said insurance company would not need at all to buy any reinsurance “protection” or so-called “cover.”

6.- There are many leading government agencies and entities, NGOs and global corporations that, since many years now, practice everything above in parallel (simultaneously). This is under universal practice by agencies and business enterprises into Aerospace and Defense. Institutions ? that I have worked with that practice avant-garde “scenario planing” and Transformative and Integrative Risk Management ? encompass: Toyota, Mitsubishi, World Bank, Shell, Statoil, Total, Exxon, Mobil, PDVSA/Citgo, GE, GMAC, TNT Express, GTE, Amoco, BP, Abbot Laboratories, World Health Organization, Ernst Young Consulting, SAIC (Science Applications International Corporation), Pak Mail, Wilpro Energy Services, Phillips Petroleum Company, Dupont, Conoco, ENI (Italy’s petroleum state-owned firm), Chevron, and LDG Management (HCC Benefits).

7.- Many professional futurists and other scientists and entrepreneurs have formidable notions and idea but lack the direct experience in managing a dangerous corporate theater of operations (framework). To find out more about relentless Scenario-Planning Methodology and Transformative and Integrative Risk Management, you are most welcome to consider the interview at http://lnkd.in/bYP2nDC (republished by the Lifeboat Foundation and Forward Metrics).

Homer: Son, A rhetoric question: How do you manage to be the top brain behind the Bilderberg Group (http://en.wikipedia.org/wiki/Bilderberg_Group )?

Homer: Bart, What else do I not know, Bart?

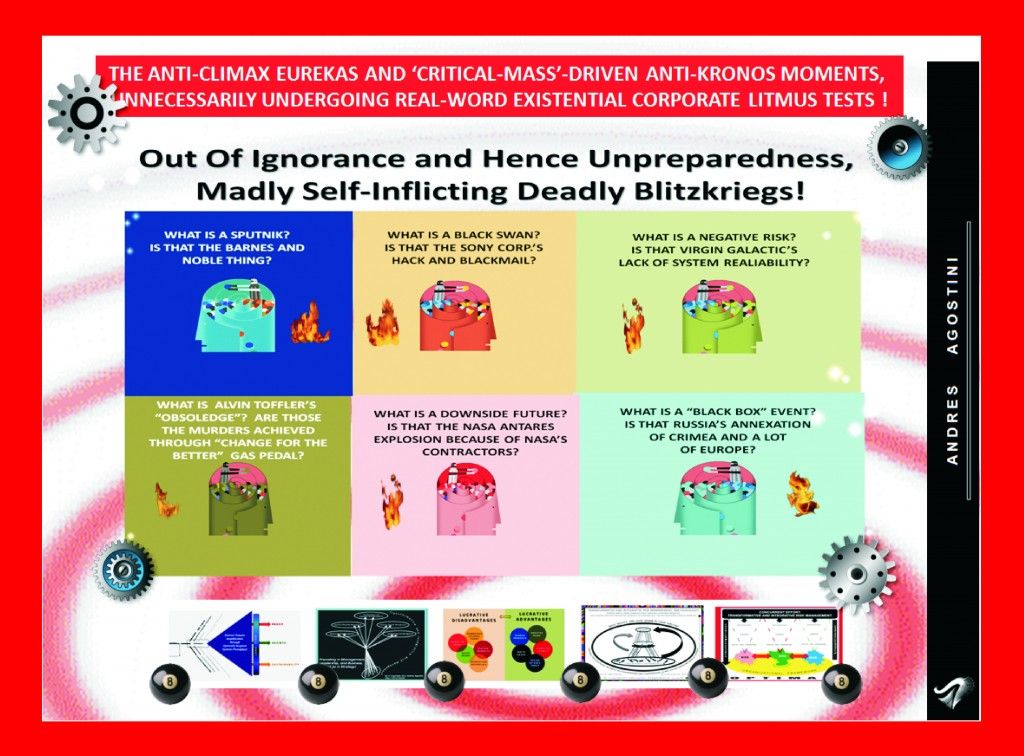

Bart: Dad, that the direct incumbents of systematically applying Kaizen, Toyota Production System, Lean Manufacturing, Agile, Extreme Project Management, Six Sigma, Process Engineer and the like, as well as cradle-to-grave Strategists and so-called Futurists, are blinded powerless and intellectually castrated to deal with all of the ensuing:

A.- Black Swans,

B.- Gray Swans,

C.- Sputnik Moments,

D.- Negative Risks (the opposite of Positive Risks),

E.- Downside Futures, and

F.- Black-Box Events. PERIOD!

ABSOLUTE END.

Authored By Copyright Mr. Andres Agostini

White Swan Book Author (Source of this Article)

http://www.LINKEDIN.com/in/andresagostini

http://www.AMAZON.com/author/agostini

http://LIFEBOAT.com/ex/bios.andres.agostini

https://www.FACEBOOK.com/agostiniandres

http://www.appearoo.com/aagostini

http://connect.FORWARDMETRICS.com/profile/1649/Andres-Agostini.html

https://www.FACEBOOK.com/amazonauthor

@AndresAgostini

@ThisSuccess

@SciCzar