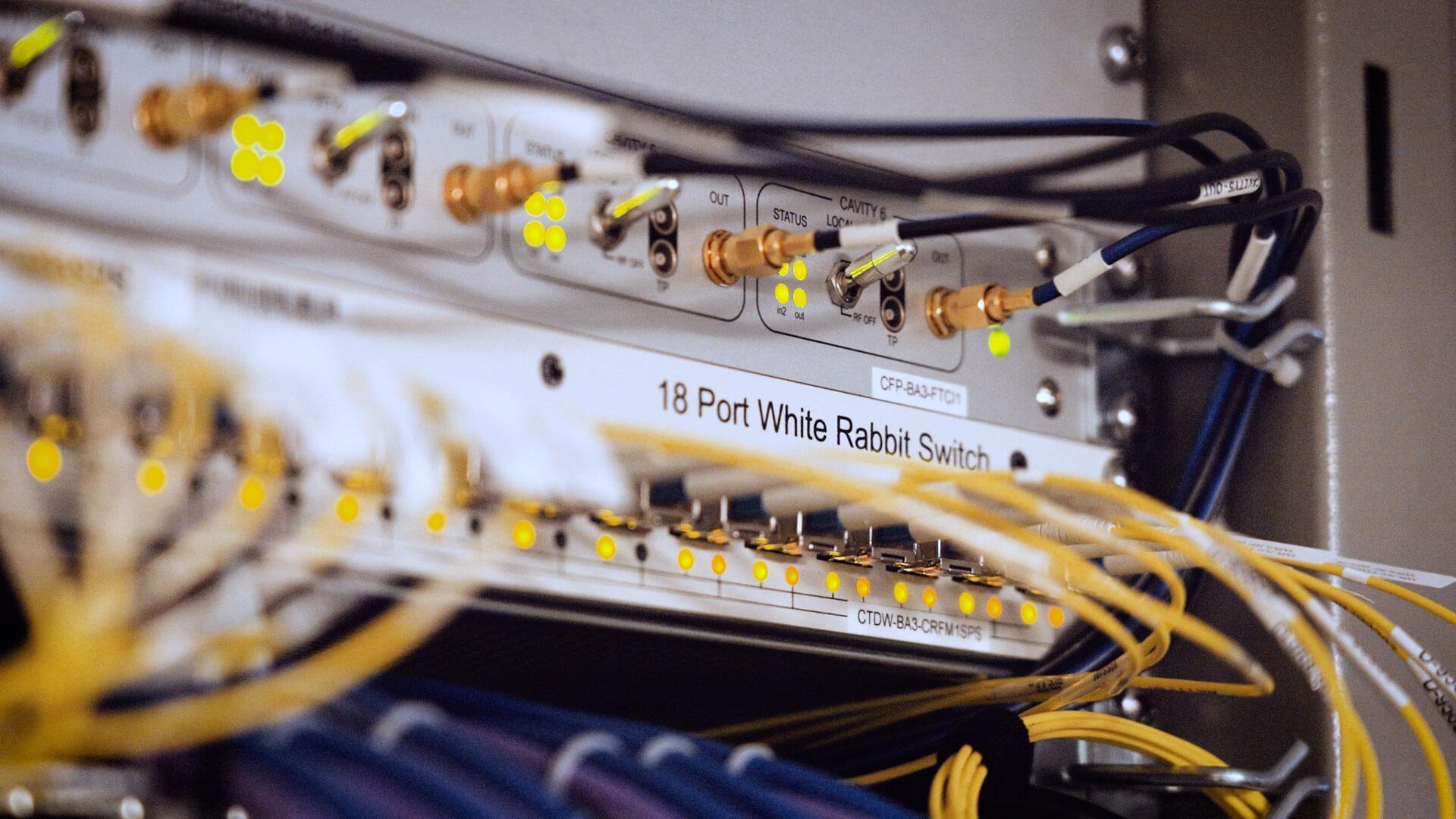

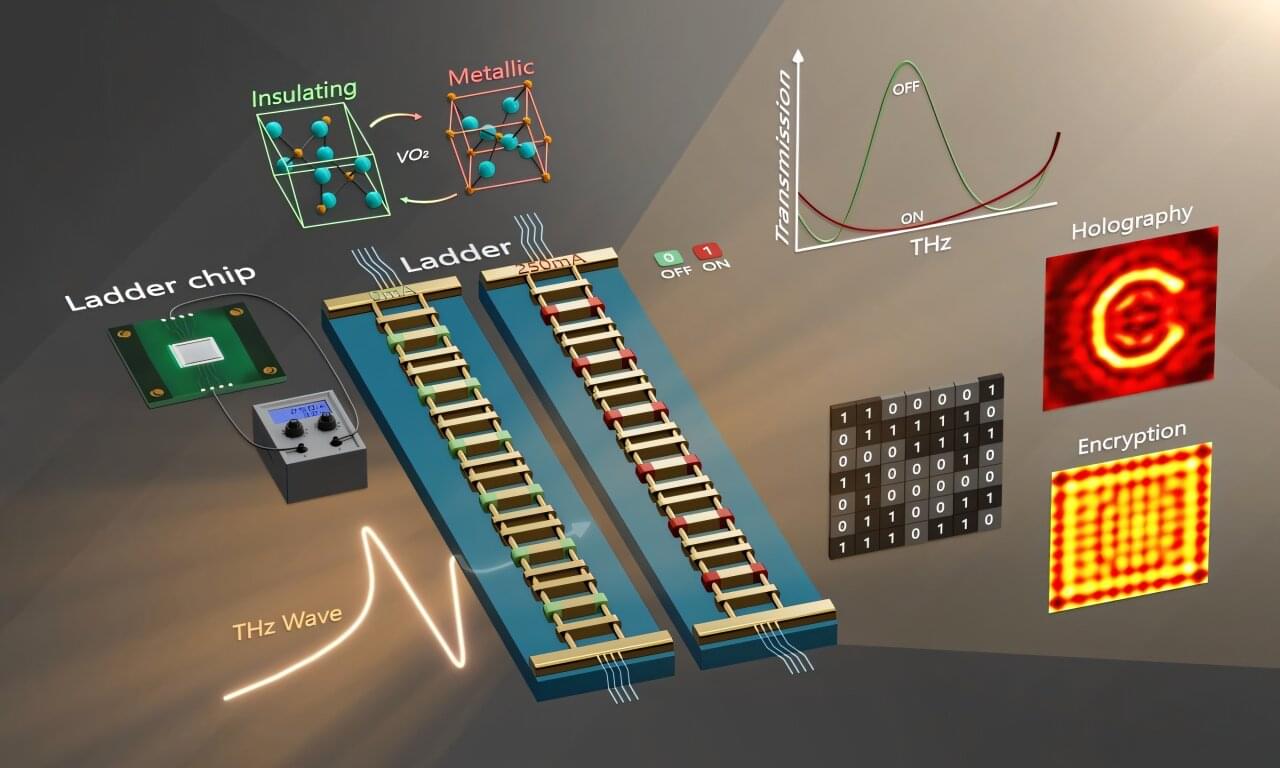

A small yet innovative experiment is taking place at CERN. Its goal is to test how the CERN-born optical timing signal—normally used in the Laboratory’s accelerators to synchronize devices with ultra-high precision—can best be sent through an optical fiber alongside a single-photon signal from a source of quantum-entangled photons. The results could pave the way for using this technique in quantum networks and quantum cryptography.

Research in quantum networks is growing rapidly worldwide. Future quantum networks could connect quantum computers and sensors, without losing any quantum information. They could also enable the secure exchange of information, opening up applications across many fields.

Unlike classical networks, where information is encoded in binary bits (0s and 1s), quantum networks rely on the unique properties of quantum bits, or “qubits,” such as superposition (where a qubit can exist in multiple states simultaneously) and entanglement (where the state of one qubit influences the state of another no matter how far apart they are).