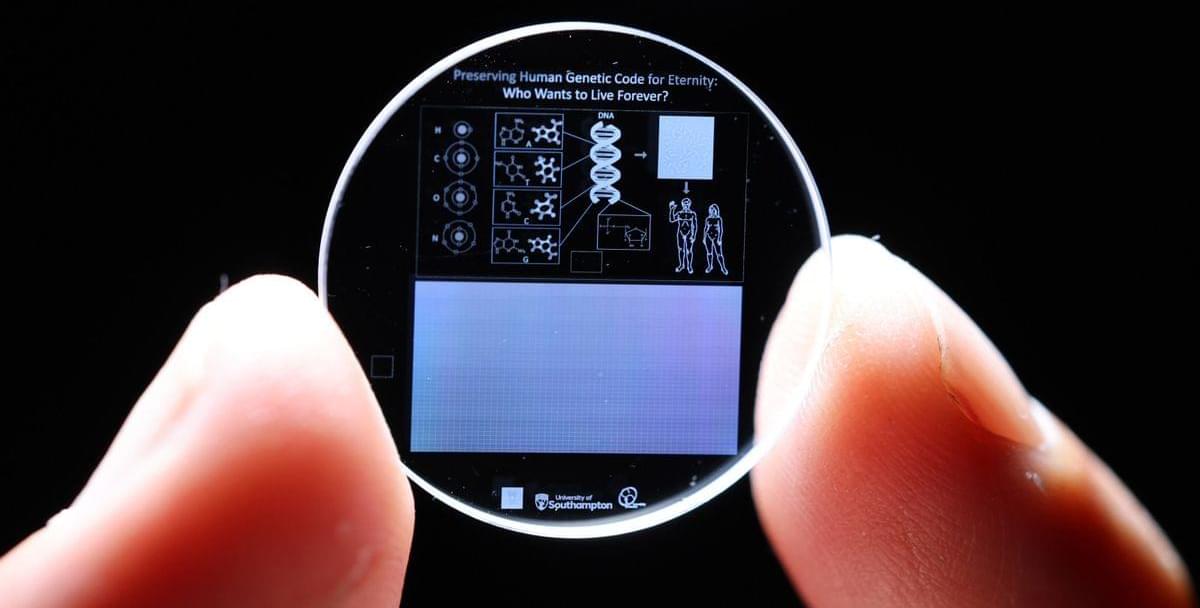

The data inscribed into the crystal is carefully annotated with universal elements like hydrogen, oxygen, carbon, and nitrogen, as well as the four DNA bases—adenine, cytosine, guanine, and thymine—that make up the genetic code. Additionally, the molecular structure of DNA and the arrangement of genes within chromosomes are depicted, offering clear instructions on how to interpret the genetic information stored within.

However, it is important to note that the 5D memory crystals require a highly specialized skill set and advanced equipment to inscribe and read the data stored within the crystals, so those looking to re-establish the human race after an extinction event may have to refer to more traditional means.

The crystal, made from fused quartz, is one of the most chemically and thermally resilient materials known on Earth, and can endure temperatures as high as 1000°C, resist direct impact forces up to 10 tons per square centimeter, and is unaffected by long-term exposure to cosmic radiation. The longevity and storage capacity of the 5D memory crystal earned it a Guinness World Record in 2014 for being the most durable data storage material ever created.