This is the latest Lifeboat Foundation update on our worldwide pandemic.

It is also at https://www.facebook.com/groups/lifeboatfoundation/permalink/10158811699298455.

Key summary of this report:

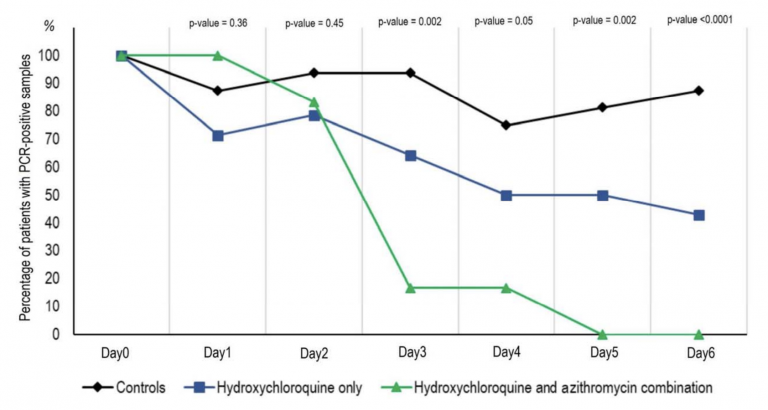

- Hydroxychloroquine, azithromycin, zinc sulfate, and massive amounts of vitamin C taken intravenously should reduce your chance of death by about 50% and any hospital stays should be reduced by 50% as well. (Freeing up ventilators.) This treatment should be begun within 5 days of getting coronavirus symptoms.

- Wearing any type of mask should reduce the chance of transmitting the virus by 50%. You can reuse a mask by placing it in an oven at 170℉/77℃ for 30 minutes.

- Taking 2,000 IU per day of vitamin D should reduce your chance of infection by 50%.

- Social distancing should reduce the rate of infection by 50%.

- Ventilators are being overhyped by the media and you should take steps to avoid being put on them since they are associated with up to 80% death rates.

Following these four recommendations should improve everyone’s chances by a factor of 16 and get this pandemic under control. (All percentages are approximate, of course.) Such recommendations would bring the end to our extreme quarantines.

VENTILATORS

Ventilators can be shared in an emergency by 2 to 8 patients each. Simply sharing with 2 people plus following our four recommendations will increase the overall ventilator supply by a factor of 32. Learn more at https://www.nytimes.com/2020/03/26/health/coronavirus-ventilator-sharing.html.

Patients are experiencing death rates as high as 80% on ventilators as discussed at https://time.com/5818547/ventilators-coronavirus. (50% is the best death rate being recorded anywhere.)

It is estimated that only 20–30% of coronavirus patients who are having problems breathing have the typical Acute Respiratory Distress Syndrome (ARDS) that calls for a ventilator. A CT scan will help identify such patients. Learn more at https://www.esicm.org/wp-content/uploads/2020/04/684_author-proof.pdf.

We recommend that the following be tried before a ventilator: