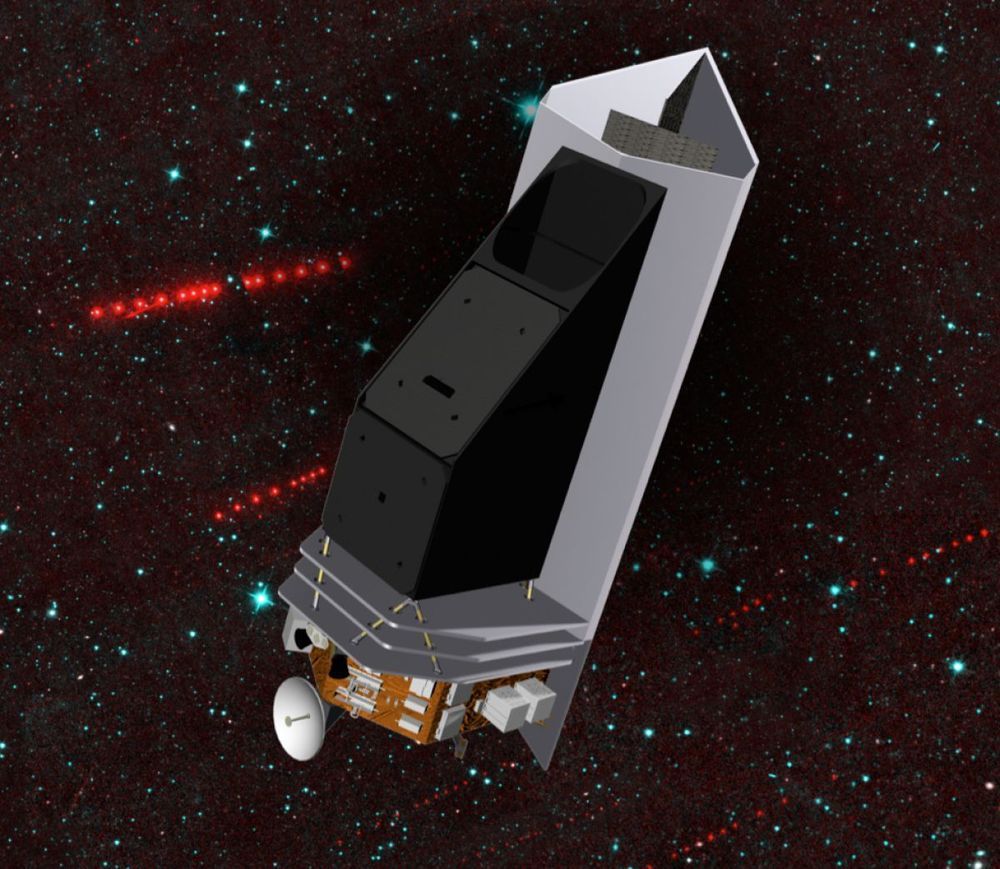

NASA is keeping a watchful eye on the mid-November close approach of a behemoth space rock that is almost half the size of Ben Nevis and hurtling towards earth at 18,000 miles per hour.

Are we alone in the universe? It comes down to whether intelligence is a probable outcome of natural selection, or an improbable fluke. By definition, probable events occur frequently, improbable events occur rarely—or once. Our evolutionary history shows that many key adaptations—not just intelligence, but complex animals, complex cells, photosynthesis, and life itself—were unique, one-off events, and therefore highly improbable. Our evolution may have been like winning the lottery … only far less likely.

The universe is astonishingly vast. The Milky Way has more than 100 billion stars, and there are over a trillion galaxies in the visible universe, the tiny fraction of the universe we can see. Even if habitable worlds are rare, their sheer number—there are as many planets as stars, maybe more—suggests lots of life is out there. So where is everyone? This is the Fermi paradox. The universe is large, and old, with time and room for intelligence to evolve, but there’s no evidence of it.

Could intelligence simply be unlikely to evolve? Unfortunately, we can’t study extraterrestrial life to answer this question. But we can study some 4.5 billion years of Earth’s history, looking at where evolution repeats itself, or doesn’t.

Dr. Stuart Russell, a distinguished AI researcher and computer scientist at UC Berkeley, believes there is a fundamental and potentially civilization-ending shortcoming in the “standard model” of AI, which is taught (and Dr. Russell wrote the main textbook) and applied virtually everywhere. Dr. Russell’s new book, Human Compatible: Artificial Intelligence and the Problem of Control, argues that unless we re-think the building blocks of AI, the arrival of superhuman AI may become the “last event in human history.”

That may sound a bit wild-eyed, but Human Compatible is a carefully written explanation of the concepts underlying AI as well as the history of their development. If you want to understand how fast AI is developing and why the technology is so dangerous, Human Compatible is your guide, literally starting with Aristotle and closing with OpenAI Five’s Dota 2 triumph.

New interview with author and researcher Dr. Josh Mitteldorf who runs the aging research blog Aging Matters.

Interview with author and researcher Dr. Josh Mitteldorf who runs the aging research blog ‘Aging Matters’.

Dr. Josh Mitteldorf is an evolutionary biologist and a long-time contributor to the growing field of aging science. His work in this field has focused on theories of aging. He asks the basic question: why do we age and die?

Josh is the co-author of ‘Cracking the Aging Code: The New Science of Growing Old — And What It Means for Staying Young’ : “A revolutionary examination of why we age, what it means for our health, and how we just might be able to fight it.

In Cracking the Aging Code, theoretical biologist Josh Mitteldorf and award-winning writer and ecological philosopher Dorion Sagan reveal that evolution and aging are even more complex and breathtaking than we originally thought. Using meticulous multidisciplinary science, as well as reviewing the history of our understanding about evolution, this book makes the case that aging is not something that “just happens,” nor is it the result of wear and tear or a genetic inevitability. Rather, aging has a fascinating evolutionary purpose: to stabilize populations and ecosystems, which are ever-threatened by cyclic swings that can lead to extinction.

When a population grows too fast it can put itself at risk of a wholesale wipeout. Aging has evolved to help us adjust our growth in a sustainable fashion as well as prevent an ecological crisis from starvation, predation, pollution, or infection.

If you are a Lifeboat subscriber or have been reading these pages for awhile, you may know why it’s called “Lifeboat”. A fundamental goal of our founder, board, writers and supporters is to sustain the environment, life in all its diversity, and—if necessary—(i.e. if we destroy our environment beyond repair, or face a massive incoming asteroid), to prepare for relocating. That is, to build a lifeboat, figuratively and literally.

But most of us never believed that we would face an existential crisis, except perhaps a potential for a 3rd World War. Yet, here we are: Burning the forests, killing off unspeakable numbers of species (200 each day), cooking the planet, melting the ice caps, shooting a hole in the ozone, and losing more land to the sea each year.

Regading the urgent message of Greta Thunberg, below, I am at a loss for words. Seriously, there is not much I can add to the 1st video below.

Information about climate change is all around us. Everyone knows about it; Most people understand that it is real and it that poses an existential threat, quite possibly in our lifetimes. In our children’s lives, it will certainly lead to war, famine, cancer, and massive loss of land, structures and money. It is already raising sea level and killing off entire species at thousands of times the natural rate.

Yet, few people, organizations or governments treat the issue with the urgency of an existential crisis. Sure! A treaty was signed and this week, Jeff Bezos committed to reducing the carbon footprint of the world’s biggest retailer. But have we moved in the right direction since the Paris Accords were signed 4 years ago? On the contrary, we have accelerated the pace of self-destruction.

Prof. Steve Fuller is the author of 25 books including a trilogy relating to the idea of a ‘post-’ or ‘trans-‘human future, and most recently, Nietzschean Meditations: Untimely Thoughts at the Dawn of the Transhuman Age.

During this 2h 15 min interview with Steve Fuller we cover a variety of interesting topics such as: the social foundations of knowledge and our shared love of books; Transhumanism as a scientistic way of understanding who we are; the proactionary vs the precautionary principle; Pierre Teilhard de Chardin and the Omega Point; Julian and Aldous Huxley’s diverging takes on Transhumanism; David Pearce’s Hedonistic Imperative as a concept straight out of Brave New World; the concept and meaning of being human, transhuman and posthuman; humanity’s special place in the cosmos; my Socratic Test of (Artificial) Intelligence; Transhumanism as a materialist theology – i.e. religion for geeks; Elon Musk, cosmism and populating Mars; de-extinction, genetics and the sociological elements of a given species; the greatest issues that humanity is facing today; AI, the Singularity and armed conflict; morphological freedom and becoming human; longevity and the “Death is Wrong” argument; Zoltan Istvan and the Transhumanist Wager; Transhumanism as a way of entrenching rather than transcending one’s original views…

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation or become a patron on Patreon.

Nick Bostrom is a Swedish philosopher at the University of Oxford known for his work on existential risk, the anthropic principle, human enhancement ethics, superintelligence risks, and the reversal test.

This is the first Russian x-risks newsletter, which will present news about Russia and global catastrophic risks from the last 3 months.

Given the combination of high technological capabilities, poor management, high risk tolerance and attempts to catch up with West and China in the military sphere, Russia is prone to technological catastrophes. It has a 10 times higher level of aviation catastrophes and car accidents than developed countries.

Thus it seems possible that a future global catastrophe may be somehow connected with Russia. However, most of the work in global catastrophic and existential risk (x-risks) prevention and policy efforts are happening in the West, especially in US, UK and Sweden. Even the best policies adopted by the governments of these countries may not help if a catastrophe occurs in another country or countries.