Harmful PFAS chemicals can now be detected in many soils and bodies of water. Removing them using conventional filter techniques is costly and almost infeasible. Researchers at the Fraunhofer Institute for Interfacial Engineering and Biotechnology IGB are now successfully implementing a plasma-based technology in the AtWaPlas joint research project.

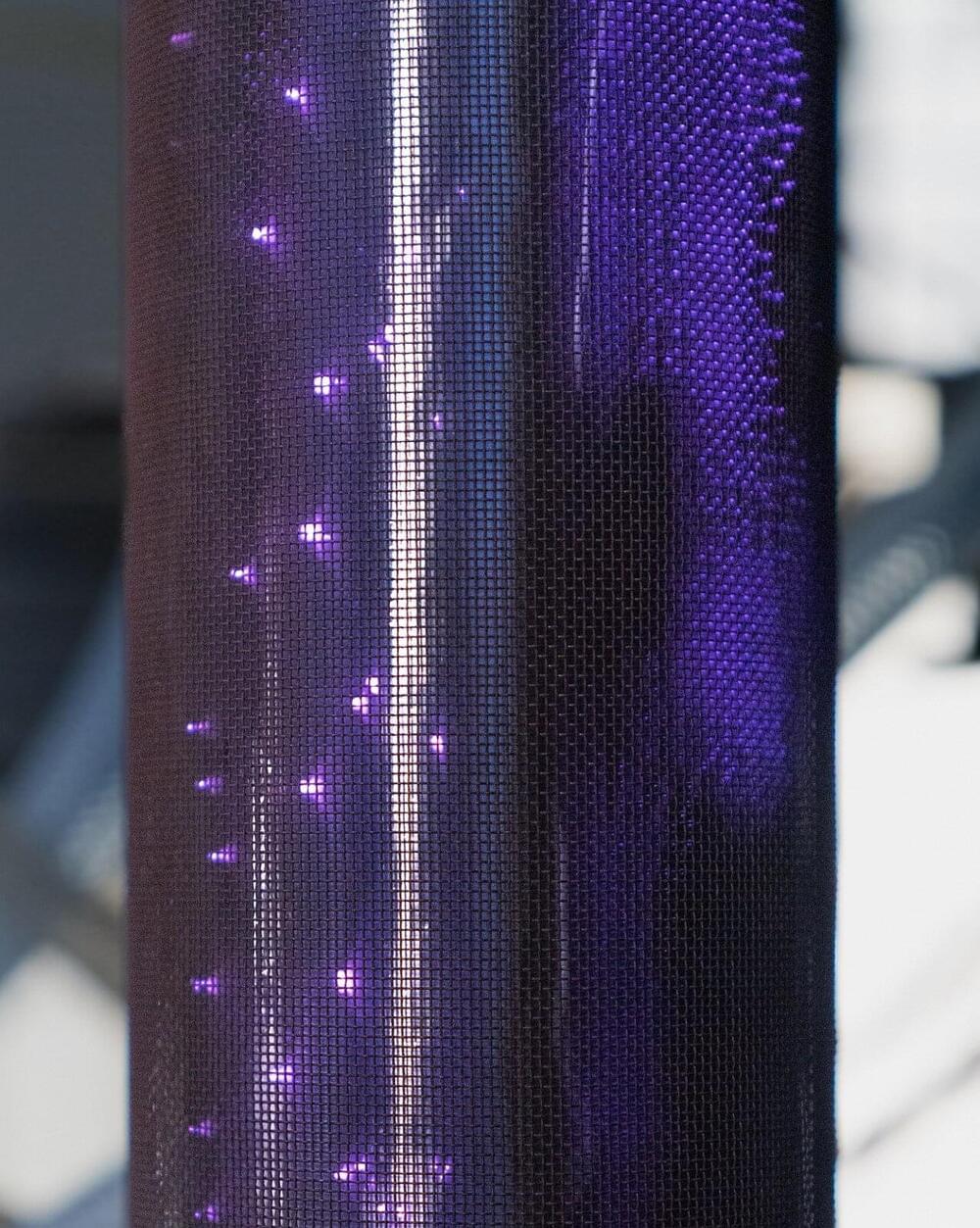

Contaminated water is fed into a combined glass and stainless steel cylinder where it is then treated with ionized gas, i.e., plasma. This reduces the PFAS molecular chains, allowing the toxic substance to be removed at a low cost.

Per-and polyfluoroalkyl substances (PFAS) have many special properties. As they are thermally and chemically stable as well as resistant to water, grease and dirt, they can be found in a large number of everyday products: Pizza boxes and baking paper are coated with them, for example, and shampoos and creams also contain PFAS. In industry they serve as extinguishing and wetting agents, and in agriculture they are used in plant protection products.