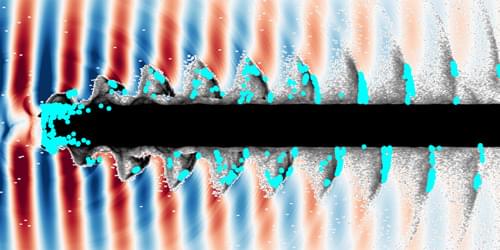

An atomically smooth surface that oscillates vertically can host drops that either bounce or hover in place, depending on the oscillation frequency.

China has launched CHIEF1300, the world’s largest centrifuge, capable of generating 300 times Earth’s gravity for research.

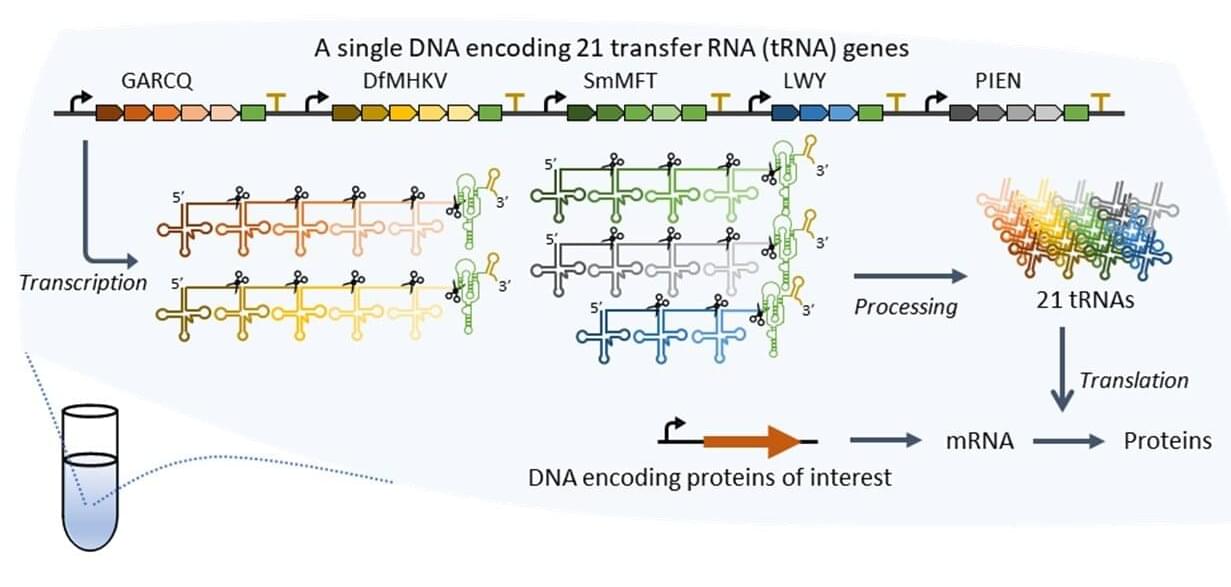

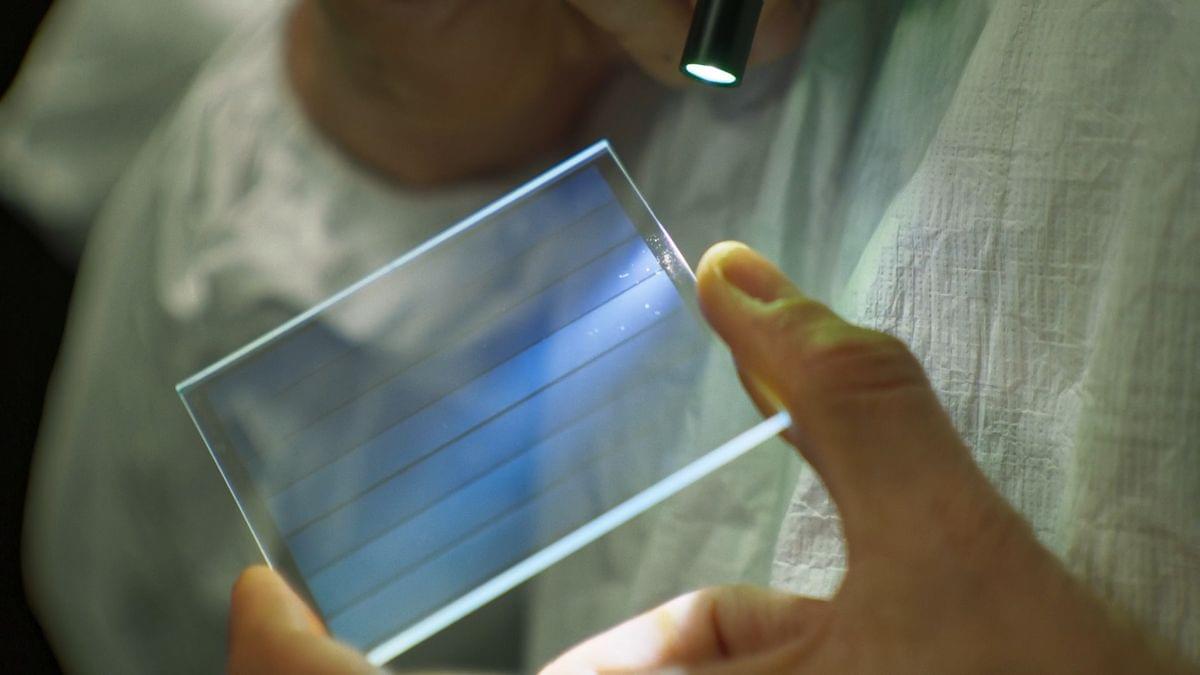

Scientists have built the first rechargeable hydride ion battery. Hydride ions (H⁻) have drawn interest as potential charge carriers for future electrochemical devices because of their extremely low mass and high redox potential. Yet, progress has been limited since no electrolyte has been able to

A recent study found that ground shaking caused by moonquakes, not meteorite impacts, was responsible for altering the terrain in the Taurus-Littrow valley, the site of the Apollo 17 landing in 1972. The research also identified a likely source of these surface changes and evaluated the potential hazards by applying new seismic models, with results that carry important implications for both future lunar exploration and the development of permanent bases on the Moon.

The paper authored by Smithsonian Senior Scientist Emeritus Thomas R. Watters and University of Maryland Associate Professor of Geology Nicholas Schmerr was published in the journal Science Advances.