AI is shaking up the finance sector. Soon, it may be predicting financial crises before they happen. Alongside that potential, robust governance is key.

How can rapidly emerging #AI develop into a trustworthy, equitable force? Proactive policies and smart governance, says Salesforce.

These initial steps ignited AI policy conversations amid the acceleration of innovation and technological change. Just as personal computing democratized internet access and coding accessibility, fueling more technology creation, AI is the latest catalyst poised to unlock future innovations at an unprecedented pace. But with such powerful capabilities comes large responsibility: We must prioritize policies that allow us to harness its power while protecting against harm. To do so effectively, we must acknowledge and address the differences between enterprise and consumer AI.

Enterprise versus consumer AI

Salesforce has been actively researching and developing AI since 2014, introduced our first AI functionalities into our products in 2016, and established our office of ethical and human use of technology in 2018. Trust is our top value. That’s why our AI offerings are founded on trust, security and ethics. Like many technologies, there’s more than one use for AI. Many people are already familiar with large language models (LLMs) via consumer-facing apps like ChatGPT. Salesforce is leading the development of AI tools for businesses, and our approach differentiates between consumer-grade LLMs and what we classify as enterprise AI.

Preparation requires technical research and development, as well as adaptive, proactive governance.

Yoshua Bengio, Geoffrey Hinton, […], Andrew Yao, Dawn Song, […], Pieter Abbeel, Trevor Darrell, Yuval Noah Harari, Ya-Qin Zhang, Lan Xue, […], Shai Shalev-Shwartz, Gillian Hadfield, Jeff Clune, Tegan Maharaj, Frank Hutter, Atılım Güneş Baydin, Sheila McIlraith, Qiqi Gao, Ashwin Acharya, David Krueger, Anca Dragan, Philip Torr, Stuart Russell, Daniel Kahneman, Jan Brauner [email protected], and Sören Mindermann +22 authors +20 authors +15 authors fewer Authors Info & Affiliations

Science.

Two OpenAI employees who worked on safety and governance recently resigned from the company behind ChatGPT.

Daniel Kokotajlo left last month and William Saunders departed OpenAI in February. The timing of their departures was confirmed by two people familiar with the situation. The people asked to remain anonymous in order to discuss the departures, but their identities are known to Business Insider.

Center for natural and artificial intelligence.

Chinese ambassador Chen Xu called for the high-quality development of artificial intelligence (AI), assistance in promoting children’s mental health, and protection of children’s rights while delivering a joint statement on behalf of 80 countries at the 55th session of the United Nations Human Rights Council (UNHRC) on Thursday.

Chen, China’s permanent representative to the UN Office in Geneva and other international organizations in Switzerland, said that artificial intelligence is a new field of human development and should adhere to the concept of consultation, joint construction, and shared benefits, while working together to promote the governance of artificial intelligence.

The new generation of children has become one of the main groups using and benefiting from AI technology. The joint statement emphasized the importance of children’s mental health issues.

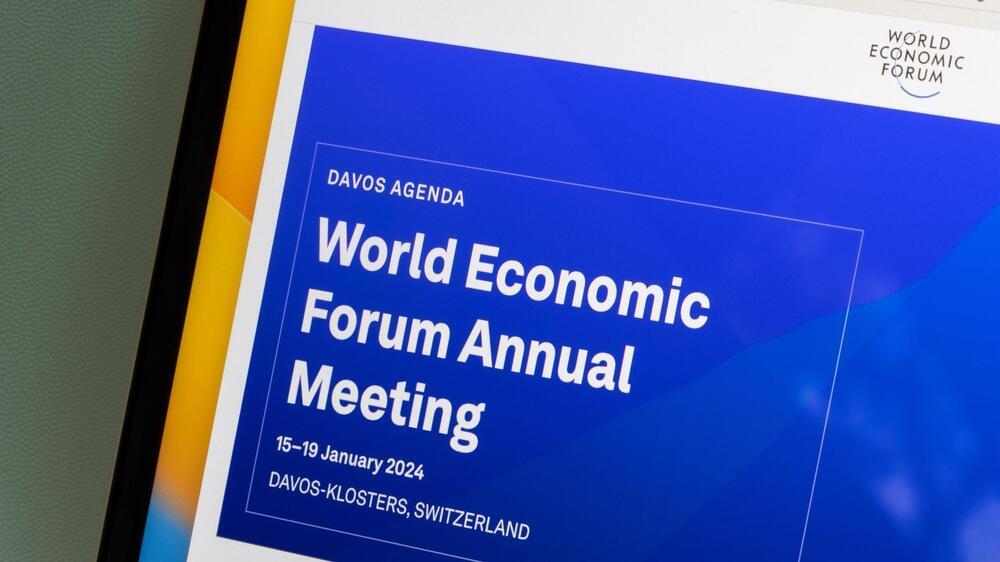

The “Beneficial AGI Summit & Unconference” is a new event organized by SingularityNet and TrueAGI in collaboration with others. The Millennium Project is one of the sponsors of the event and our Jerome Glenn, Executive Director and co-founder of The Millennium Project, and José Cordeiro, MP Board member and RIBER and Venezuela Nodes Chair, are members of the organizing committee of the event. The Beneficial AGI summit will take place both online and physically and c/o Hilton Panama in Panama City. The streaming is free, get your ticket.

The objective of the conference is to bring together the leading voices in AI in actions to catalyze the emergence of beneficial AGI. Key themes of the event are: Constitution & Governance Framework, Global Brain Collective, Simulation / Gaming Environments, Scenarios analysis process, Potential scenarios (from 1 to 7).

On the first two days of the BGI Summit, Feb. 27–28, top thought leaders from around the globe will engage in comprehensive, detailed discussions of a wide range of questions regarding various approaches to AGI and their ethical, economic, psychological, political, environmental and other implications. The focus will be on discussing issues, making conceptual progress, forming collaborations, and engaging in the practical actions aimed at catalyzing the emergence of beneficial AGI based on the ideas and connections set in motion by all involved.

Just after filming this video, Sam Altman, CEO of OpenAI published a blog post about the governance of superintelligence in which he, along with Greg Brockman and Ilya Sutskever, outline their thinking about how the world should prepare for a world with superintelligences. And just before filming Geoffrey Hinton quite his job at Google so that he could express more openly his concerns about the imminent arrival of an artificial general intelligence, an AGI that could soon get beyond our control if it became superintelligent. So, the basic idea is moving from sci-fi speculation into being a plausible scenario, but how powerful will they be and which of the concerns about superAI are reasonably founded? In this video I explore the ideas around superintelligence with Nick Bostrom’s 2014 book, Superintelligence, as one of our guides and Geoffrey Hinton’s interviews as another, to try to unpick which aspects are plausible and which are more like speculative sci-fi. I explore what are the dangers, such as Eliezer Yudkowsky’s notion of a rapid ‘foom’ take over of humanity, and also look briefly at the control problem and the alignment problem. At the end of the video I then make a suggestion for how we could maybe delay the arrival of superintelligence by withholding the ability of the algorithms to self-improve themselves, withholding what you could call, meta level agency.

▬▬ Chapters ▬▬

00:00 — Questing for an Infinity Gauntlet.

01:38 — Just human level AGI

02:27 — Intelligence explosion.

04:10 — Sparks of AGI

04:55 — Geoffrey Hinton is concerned.

06:14 — What are the dangers?

10:07 — Is ‘foom’ just sci-fi?

13:07 — Implausible capabilities.

14:35 — Plausible reasons for concern.

15:31 — What can we do?

16:44 — Control and alignment problems.

18:32 — Currently no convincing solutions.

19:16 — Delay intelligence explosion.

19:56 — Regulating meta level agency.

▬▬ Other videos about AI and Society ▬▬

AI wants your job | Which jobs will AI automate? | Reports by OpenAI and Goldman Sachs.

• Which jobs will AI automate? | Report…

How ChatGPT Works (a non technical explainer):