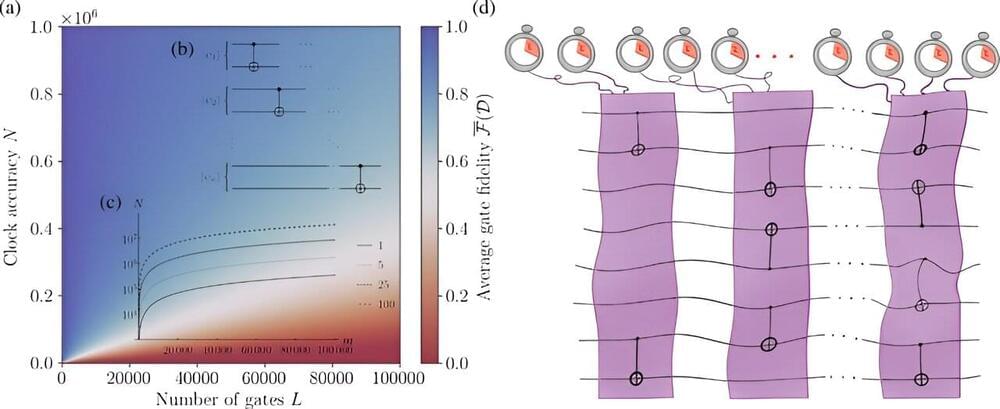

New research from a consortium of quantum physicists, led by Trinity College Dublin’s Dr. Mark Mitchison, shows that imperfect timekeeping places a fundamental limit to quantum computers and their applications. The team claims that even tiny timing errors add up to place a significant impact on any large-scale algorithm, posing another problem that must eventually be solved if quantum computers are to fulfill the lofty aspirations that society has for them.

The paper is published in the journal Physical Review Letters.

It is difficult to imagine modern life without clocks to help organize our daily schedules; with a digital clock in every person’s smartphone or watch, we take precise timekeeping for granted—although that doesn’t stop people from being late.