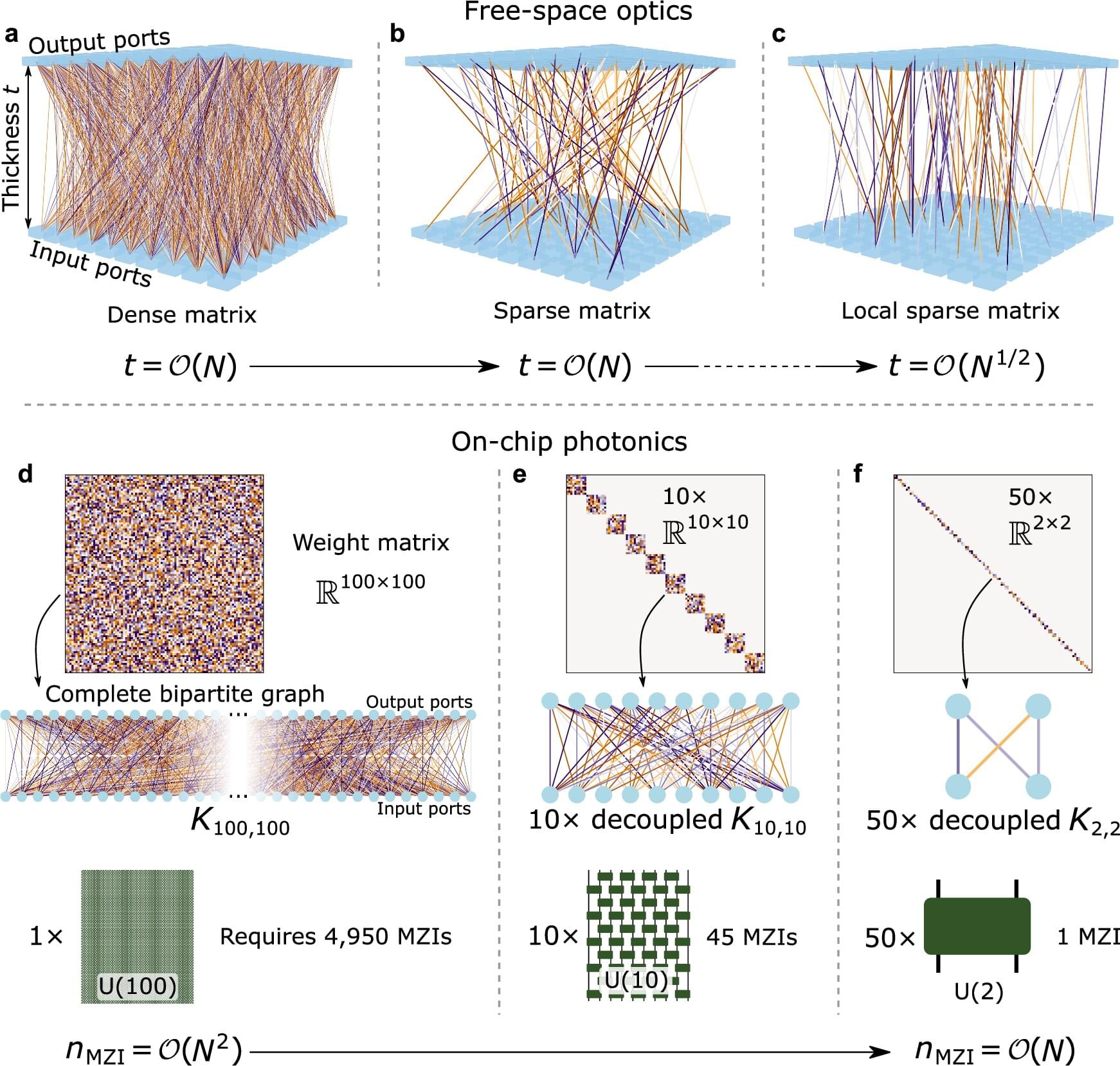

The research, published in Nature Communications, addresses one of the key challenges to engineering computers that run on light instead of electricity: making those devices small enough to be practical. Just as algorithms on digital computers require time and memory to run, light-based systems also require resources to operate, including sufficient physical space for light waves to propagate, interact and perform analog computation.

Lead authors Francesco Monticone, associate professor of electrical and computer engineering, and Yandong Li, Ph.D. ‘23, postdoctoral researcher, revealed scaling laws for free-space optics and photonic circuits by analyzing how their size must grow as the tasks they perform become more complex.