To effectively tackle everyday tasks, robots should be able to detect the properties and characteristics of objects in their surroundings, so that they can grasp and manipulate them accordingly. Humans naturally achieve this using their sense of touch and roboticists have thus been trying to provide robots with similar tactile sensing capabilities.

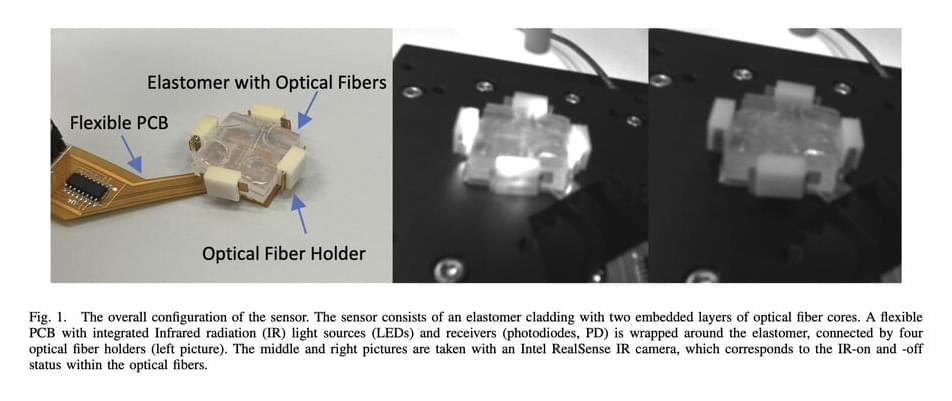

A team of researchers at the University of Hong Kong recently developed a new soft tactile sensor that could allow robots to detect different properties of objects that they are grasping. This sensor, presented in a paper pre-published on arXiv, is made up of two layers of weaved optical fibers and a self-calibration algorithm.

“Although there exist many soft and conformable tactile sensors on robotic applications able to decouple the normal force and shear forces, the impact of the size of object in contact on the force calibration model has been commonly ignored,” Wentao Chen, Youcan Yan, and their colleagues wrote in their paper.

עברית (Hebrew)

עברית (Hebrew)