How programmers turned the internet into a paintbrush. DALL-E 2, Midjourney, Imagen, explained.

Subscribe and turn on notifications 🔔 so you don’t miss any videos: http://goo.gl/0bsAjO

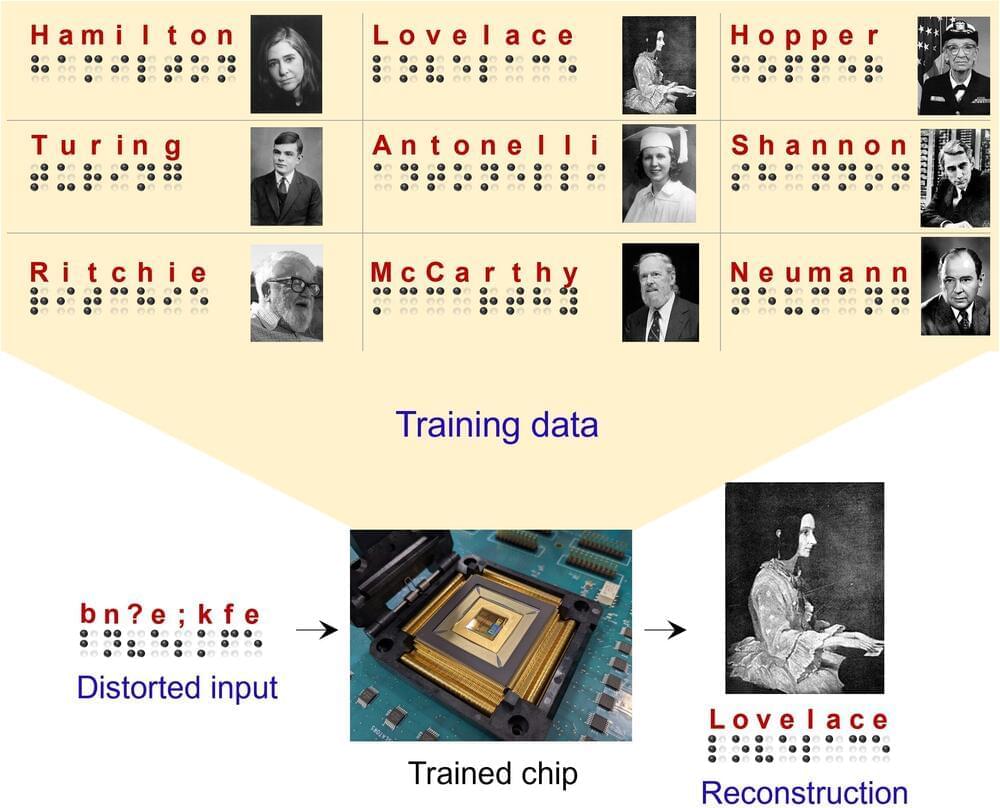

Beginning in January 2021, advances in AI research have produced a plethora of deep-learning models capable of generating original images from simple text prompts, effectively extending the human imagination. Researchers at OpenAI, Google, Facebook, and others have developed text-to-image tools that they have not yet released to the public, and similar models have proliferated online in the open-source arena and at smaller companies like Midjourney.

These tools represent a massive cultural shift because they remove the requirement for technical labor from the process of image-making. Instead, they select for creative ideation, skillful use of language, and curatorial taste. The ultimate consequences are difficult to predict, but — like the invention of the camera, and the digital camera thereafter — these algorithms herald a new, democratized form of expression that will commence another explosion in the volume of imagery produced by humans. But, like other automated systems trained on historical data and internet images, they also come with risks that have not been resolved.

The video above is a primer on how we got here, how this technology works, and some of the implications. And for an extended discussion about what this means for human artists, designers, and illustrators, check out this bonus video: https://youtu.be/sFBfrZ-N3G4

Midjourney: www.midjourney.com.