In recent years, deep learning algorithms have achieved remarkable results in a variety of fields, including artistic disciplines. In fact, many computer scientists worldwide have successfully developed models that can create artistic works, including poems, paintings and sketches.

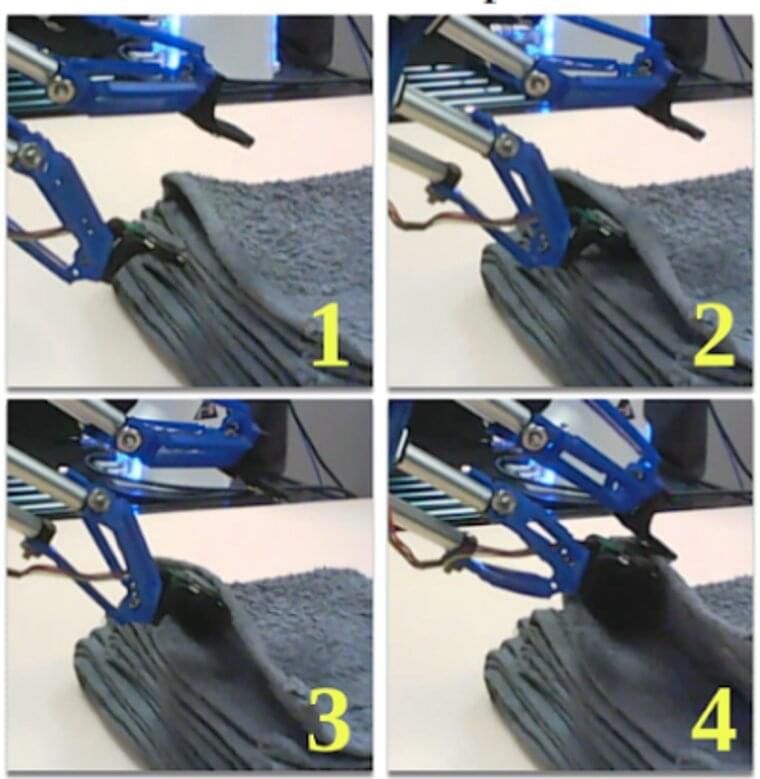

Researchers at Seoul National University have recently introduced a new artistic deep learning framework, which is designed to enhance the skills of a sketching robot. Their framework, introduced in a paper presented at ICRA 2022 and pre-published on arXiv, allows a sketching robot to learn both stroke-based rendering and motor control simultaneously.

“The primary motivation for our research was to make something cool with non-rule-based mechanisms such as deep learning; we thought drawing is a cool thing to show if the drawing performer is a learned robot instead of human,” Ganghun Lee, the first author of the paper, told TechXplore. “Recent deep learning techniques have shown astonishing results in the artistic area, but most of them are about generative models which yield whole pixel outcomes at once.”