A computer algorithm can use a technique called “ghost imaging” to reconstruct objects from a person’s brainwaves that the person themselves can’t see.

Text-to-image generation is the hot algorithmic process right now, with OpenAI’s Craiyon (formerly DALL-E mini) and Google’s Imagen AIs unleashing tidal waves of wonderfully weird procedurally generated art synthesized from human and computer imaginations. On Tuesday, Meta revealed that it too has developed an AI image generation engine, one that it hopes will help to build immersive worlds in the Metaverse and create high digital art.

A lot of work into creating an image based on just the phrase, “there’s a horse in the hospital,” when using a generation AI. First the phrase itself is fed through a transformer model, a neural network that parses the words of the sentence and develops a contextual understanding of their relationship to one another. Once it gets the gist of what the user is describing, the AI will synthesize a new image using a set of GANs (generative adversarial networks).

Thanks to efforts in recent years to train ML models on increasingly expandisve, high-definition image sets with well-curated text descriptions, today’s state-of-the-art AIs can create photorealistic images of most whatever nonsense you feed them. The specific creation process differs between AIs.

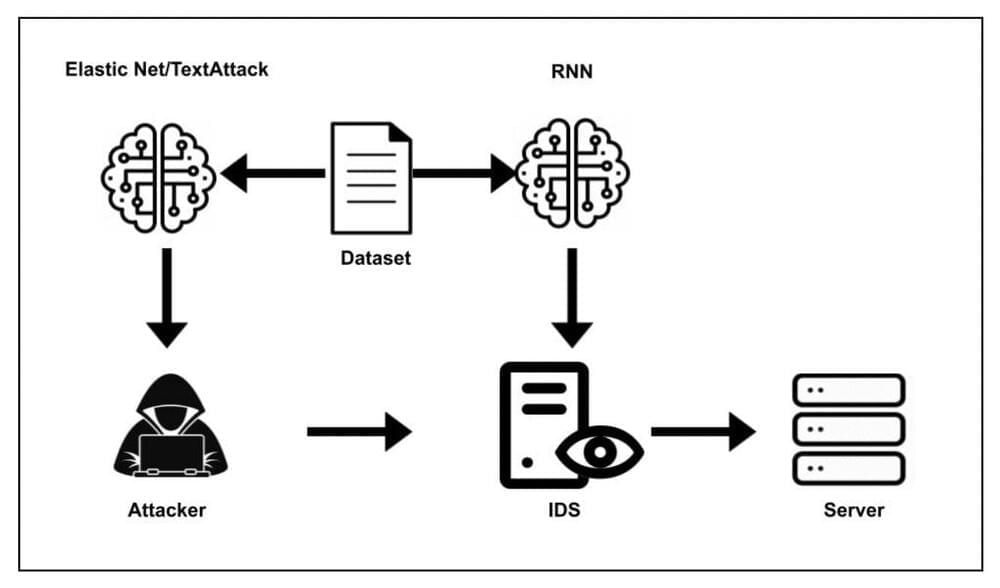

Deep learning techniques have recently proved to be highly promising for detecting cybersecurity attacks and determining their nature. Concurrently, many cybercriminals have been devising new attacks aimed at interfering with the functioning of various deep learning tools, including those for image classification and natural language processing.

Perhaps the most common among these attacks are adversarial attacks, which are designed to “fool” deep learning algorithms using data that has been modified, prompting them to classify it incorrectly. This can lead to the malfunctioning of many applications, biometric systems, and other technologies that operate through deep learning algorithms.

Several past studies have shown the effectiveness of different adversarial attacks in prompting deep neural networks (DNNs) to make unreliable and false predictions. These attacks include the Carlini & Wagner attack, the Deepfool attack, the fast gradient sign method (FGSM) and the Elastic-Net attack (ENA).

A new computer algorithm developed by the University of Toronto’s Parham Aarabi can store and recall information strategically—just like our brains.

The associate professor in the Edward S. Rogers Sr. department of electrical and computer engineering, in the Faculty of Applied Science & Engineering, has also created an experimental tool that leverages the new algorithm to help people with memory loss.

“Most people think of AI as more robot than human,” says Aarabi, whose framework is explored in a paper being presented this week at the IEEE Engineering in Medicine and Biology Society Conference in Glasgow. “I think that needs to change.”

EPFL researchers have used swarms of drones to measure city traffic with unprecedented accuracy and precision. Algorithms are then used to identify sources of traffic jams and recommend solutions to alleviate traffic problems.

Given the wealth of modern technology available—roadside cameras, big-data algorithms, Bluetooth and RFID connections, and smartphones in every pocket—transportation engineers should be able to accurately measure and forecast city traffic. However, current tools advance towards the direction of showing the symptom but systematically fail to find the root cause, let alone fix it. Researchers at EPFL utilize a monitoring tool that overcomes many problems using drones.

“They provide excellent visibility, can cover large areas and are relatively affordable. What’s more, they offer greater precision than GPS technology and eliminate the behavioral biases that occur when people know they’re being watched. And we use drones in a way that protects people’s identities,” says Manos Barmpounakis, a post-doc researcher at EPFL’s Urban Transport Systems Laboratory (LUTS).

NVIDIA introduces QODA, a new platform for hybrid quantum-classical computing, enabling easy programming of integrated CPU, GPU, and QPU systems.

The past decade has seen quantum computing leap out of academic labs into the mainstream. Efforts to build better quantum computers proliferate at both startups and large companies. And while it is still unclear how far we are away from using quantum advantage on common problems, it is clear that now is the time to build the tools needed to deliver valuable quantum applications.

To start, we need to make progress in our understanding of quantum algorithms. Last year, NVIDIA announced cuQuantum, a software development kit (SDK) for accelerating simulations of quantum computing. Simulating quantum circuits using cuQuantum on GPUs enables algorithms research with performance and scale far beyond what can be achieved on quantum processing units (QPUs) today. This is paving the way for breakthroughs in understanding how to make the most of quantum computers.

In addition to improving quantum algorithms, we also need to use QPUs to their fullest potential alongside classical computing resources: CPUs and GPUs. Today, NVIDIA is announcing the launch of Quantum Optimized Device Architecture (QODA), a platform for hybrid quantum-classical computing with the mission of enabling this utility.

TensorFlow.NET is a library that provides a. NET Standard binding for TensorFlow. It allows. NET developers to design, train and implement machine learning algorithms, including neural networks. Tensorflow. NET also allows us to leverage various machine learning models and access the programming resources offered by TensorFlow.

TensorFlow

TensorFlow is an open-source framework developed by Google scientists and engineers for numerical computing. It is composed by a set of tools for designing, training and fine-tuning neural networks. TensorFlow’s flexible architecture makes it possible to deploy calculations on one or more processors (CPUs) or graphics cards (GPUs) on a personal computer, server, without re-writing code.

Machine learning is transforming all areas of biological science and industry, but is typically limited to a few users and scenarios. A team of researchers at the Max Planck Institute for Terrestrial Microbiology led by Tobias Erb has developed METIS, a modular software system for optimizing biological systems. The research team demonstrates its usability and versatility with a variety of biological examples.

Though engineering of biological systems is truly indispensable in biotechnology and synthetic biology, today machine learning has become useful in all fields of biology. However, it is obvious that application and improvement of algorithms, computational procedures made of lists of instructions, is not easily accessible. Not only are they limited by programming skills but often also insufficient experimentally-labeled data. At the intersection of computational and experimental works, there is a need for efficient approaches to bridge the gap between machine learning algorithms and their applications for biological systems.

Now a team at the Max Planck Institute for Terrestrial Microbiology led by Tobias Erb has succeeded in democratizing machine learning. In their recent publication in Nature Communications, the team presented together with collaboration partners from the INRAe Institute in Paris, their tool METIS. The application is built in such a versatile and modular architecture that it does not require computational skills and can be applied on different biological systems and with different lab equipment. METIS is short from Machine-learning guided Experimental Trials for Improvement of Systems and also named after the ancient goddess of wisdom and crafts Μῆτις, or “wise counsel.”

A Swedish researcher tasked an AI algorithm to write an academic paper about itself. The paper is now undergoing a peer-review process.

Almira Osmanovic Thunstrom has said she “stood in awe” as OpenAI’s artificial intelligence algorithm, GPT-3, started generating a text for a 500-word thesis about itself, complete with scientific references and citations.

“It looked like any other introduction to a fairly good scientific publication,” she said in an editorial piece published by Scientific American. Thunstrom then asked her adviser at the University of Gothenburg, Steinn Steingrimsson, whether she should take the experiment further and try to complete and submit the paper to a peer-reviewed journal.

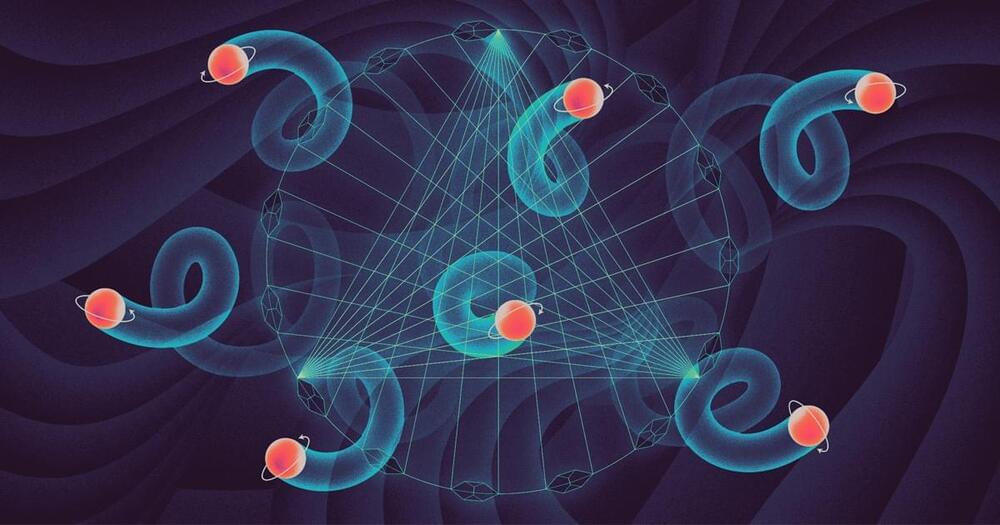

But Cabello and others are interested in investigating a lesser-known but equally magical aspect of quantum mechanics: contextuality. Contextuality says that properties of particles, such as their position or polarization, exist only within the context of a measurement. Instead of thinking of particles’ properties as having fixed values, consider them more like words in language, whose meanings can change depending on the context: “Time flies like an arrow. Fruit flies like bananas.”

Although contextuality has lived in nonlocality’s shadow for over 50 years, quantum physicists now consider it more of a hallmark feature of quantum systems than nonlocality is. A single particle, for instance, is a quantum system “in which you cannot even think about nonlocality,” since the particle is only in one location, said Bárbara Amaral, a physicist at the University of São Paulo in Brazil. “So [contextuality] is more general in some sense, and I think this is important to really understand the power of quantum systems and to go deeper into why quantum theory is the way it is.”

Researchers have also found tantalizing links between contextuality and problems that quantum computers can efficiently solve that ordinary computers cannot; investigating these links could help guide researchers in developing new quantum computing approaches and algorithms.