Architecture and construction have always been, rather quietly, at the bleeding edge of tech and materials trends. It’s no surprise, then, especially at a renowned technical university like ETH Zurich, to find a project utilizing AI and robotics in a new approach to these arts. The automated design and construction they are experimenting with show how homes and offices might be built a decade from now.

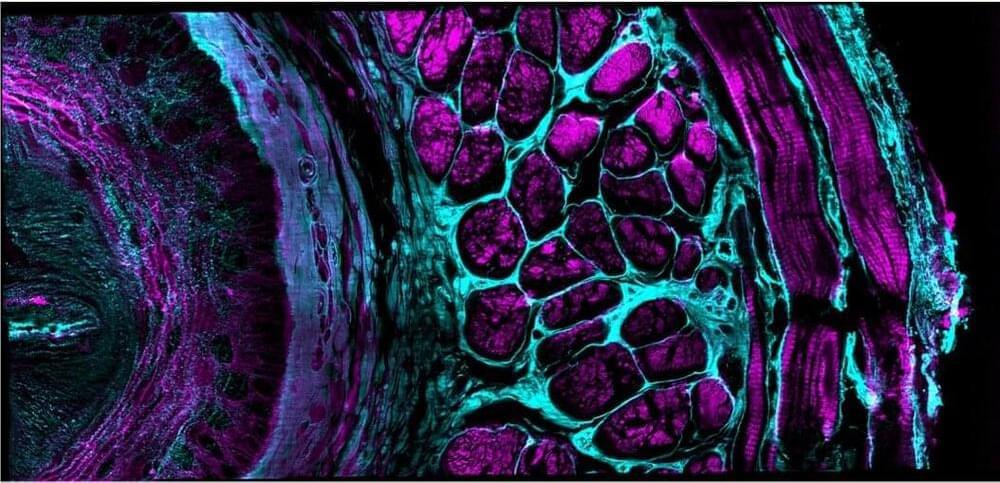

The project is a sort of huge sculptural planter, “hanging gardens” inspired by the legendary structures in the ancient city of Babylon. (Incidentally, it was my ancestor, Robert Koldewey, who excavated/looted the famous Ishtar Gate to the place.)

Begun in 2019, Semiramis (named after the queen of Babylon back then) is a collaboration between human and AI designers. The general idea of course came from the creative minds of its creators, architecture professors Fabio Gramazio and Matthias Kohler. But the design was achieved by putting the basic requirements, such as size, the necessity of watering and the style of construction, through a set of computer models and machine learning algorithms.