Anything that prevents the next big data leak is good news.

“We’re going to get these massive pools of sequenced genomic data,” Metzl said. “The real gold will come from comparing people’s sequenced genomes to their electronic health records, and ultimately their life records.” Getting people comfortable with allowing open access to their data will be another matter; Metzl mentioned that Luna DNA and others have strategies to help people get comfortable with giving consent to their private information. But this is where China’s lack of privacy protection could end up being a significant advantage.

To compare genotypes and phenotypes at scale—first millions, then hundreds of millions, then eventually billions, Metzl said—we’re going to need AI and big data analytic tools, and algorithms far beyond what we have now. These tools will let us move from precision medicine to predictive medicine, knowing precisely when and where different diseases are going to occur and shutting them down before they start.

But, Metzl said, “As we unlock the genetics of ourselves, it’s not going to be about just healthcare. It’s ultimately going to be about who and what we are as humans. It’s going to be about identity.”

The constant figures in other situations, making physicists wonder why. Why does nature insist on this number? It has appeared in various calculations in physics since the 1880s, spurring numerous attempts to come up with a Grand Unified Theory that would incorporate the constant since. So far no single explanation took hold. Recent research also introduced the possibility that the constant has actually increased over the last six billion years, even though slightly. If you’d like to know the math behind fine structure constant more specifically, the way you arrive at alpha is by putting the 3 constants h, c, and e together in the equation — As the units c, e, and h cancel each other out, the.

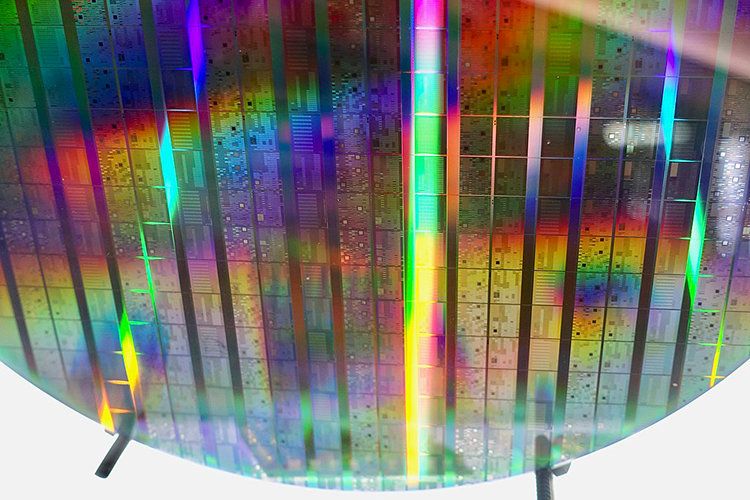

As multiple research groups around the world race to build a scalable quantum computer, questions remain about how the achievement of quantum supremacy will be verified.

Quantum supremacy is the term that describes a quantum computer’s ability to solve a computational task that would be prohibitively difficult for any classical algorithm. It is considered a critical milestone in quantum computing, but because the very nature of quantum activity defies traditional corroboration, there have been parallel efforts to find a way to prove that quantum supremacy has been achieved.

Researchers at the University of California, Berkeley, have just weighed in by giving a leading practical proposal known as random circuit sampling (RCS) a qualified seal of approval with the weight of complexity theoretic evidence behind it. Random circuit sampling is the technique Google has put forward to prove whether or not it has achieved quantum supremacy with a 72-qubit computer chip called Bristlecone, unveiled earlier this year.

That will require widening of the locations where AI is done. The vast majority of experts are in North America, Europe and Asia. Africa, in particular, is barely represented. Such lack of diversity can entrench unintended algorithmic biases and build discrimination into AI products. And that’s not the only gap: fewer African AI researchers and engineers means fewer opportunities to use AI to improve the lives of Africans. The research community is also missing out on talented individuals simply because they have not received the right education.

If AI is to improve lives and reduce inequalities, we must build expertise beyond the present-day centres of innovation, says Moustapha Cisse.

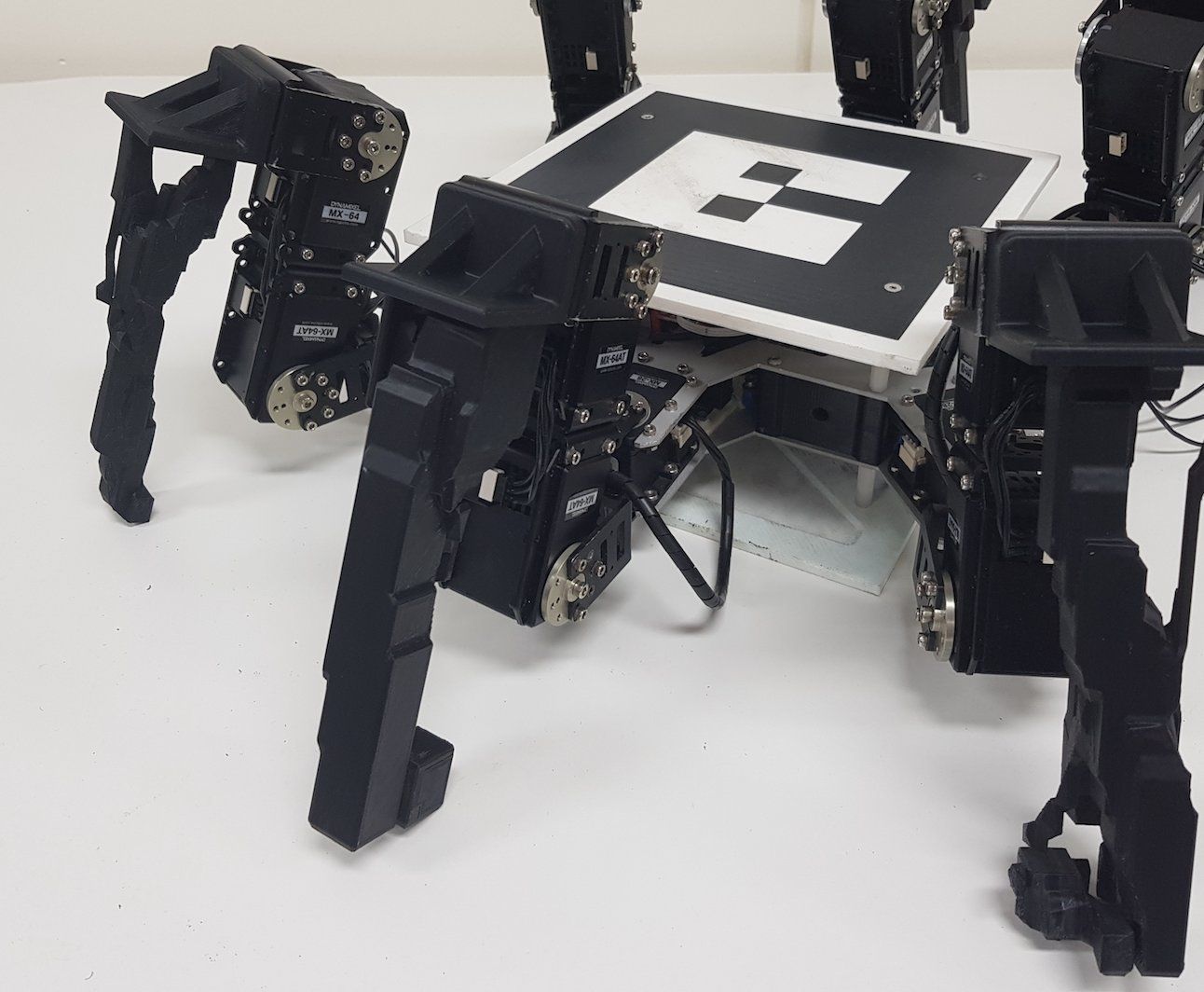

Researchers at CSIRO & Queensland University of Technology have recently carried out a study aimed at automatically evolving the physical structure of robots to enhance their performance in different environments. This project, funded by CSIRO’s Active Integrated Matter Future Science Platform, was conceived by David Howard, research scientist at Data61’s Robotics and Autonomous Systems Group (RASG).

“RASG focuses on field robotics, which means we need our robots to go out into remote places and conduct missions in adverse, difficult environmental conditions,” David Howard told TechXplore. “The research came about through an identified opportunity, as RASG makes extensive use of 3D printing to build and customise our robots. This research demonstrates a design algorithm that can automatically generate 3D printable components so that our robots are better equipped to function in different environments.”

The main objective of the study was to generate components automatically that can improve a robot’s environment-specific performance, with minimal constraints on what these components look like. The researchers particularly focused on the legs of a hexapod (6-legged) robot, which can be deployed in a variety of environments, including industrial settings, rainforests, and beaches.

Despite the simplicity of their visual system, fruit flies are able to reliably distinguish between individuals based on sight alone. This is a task that even humans who spend their whole lives studying Drosophila melanogaster struggle with. Researchers have now built a neural network that mimics the fruit fly’s visual system and can distinguish and re-identify flies. This may allow the thousands of labs worldwide that use fruit flies as a model organism to do more longitudinal work, looking at how individual flies change over time. It also provides evidence that the humble fruit fly’s vision is clearer than previously thought.

In an interdisciplinary project, researchers at Guelph University and the University of Toronto, Mississauga combined expertise in fruit fly biology with machine learning to build a biologically-based algorithm that churns through low-resolution videos of fruit flies in order to test whether it is physically possible for a system with such constraints to accomplish such a difficult task.

Fruit flies have small compound eyes that take in a limited amount of visual information, an estimated 29 units squared (Fig. 1A). The traditional view has been that once the image is processed by a fruit fly, it is only able to distinguish very broad features (Fig. 1B). But a recent discovery that fruit flies can boost their effective resolution with subtle biological tricks (Fig. 1C) has led researchers to believe that vision could contribute significantly to the social lives of flies. This, combined with the discovery that the structure of their visual system looks a lot like a Deep Convolutional Network (DCN), led the team to ask: “can we model a fly brain that can identify individuals?”

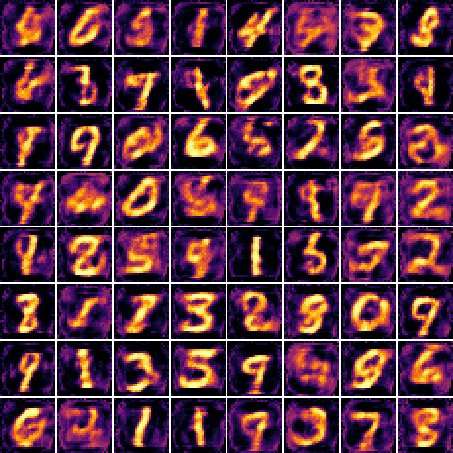

Researchers at the Research Center for IT Innovation of Academia Sinica, in Taiwan, have recently developed a novel generative adversarial network (GAN) that has binary neurons at the output layer of the generator. This model, presented in a paper pre-published on arXiv, can directly generate binary-valued predictions at test time.

So far, GAN approaches have achieved remarkable results in modeling continuous distributions. Nonetheless, applying GANs to discrete data has been somewhat challenging so far, particularly due to difficulties in optimizing the model distribution toward the target data distribution in a high-dimensional discrete space.

Hao-Wen Dong, one of the researchers who carried out the study, told Tech Xplore, “I am currently working on music generation in the Music and AI Lab at Academia Sinica. In my opinion, composing can be interpreted as a series of decisions—for instance, regarding the instrumentation, chords and even the exact notes to use. To move toward achieving the grand vision of a solid AI composer, I am particularly interested in whether deep generative models such as GANs are able to make decisions. Therefore, this work examined whether we can train a GAN that uses binary neurons to make binary decisions using backpropagation, the standard training algorithm.”

Many A.I. experts are concerned that Facebook, Google and a few other big companies are hoarding talent in the field. The internet giants also control the massive troves of online data that are necessary to train and refine the best machine learning programs.

Several start-ups hope to use the technology introduced by Bitcoin to give broader access to the data and algorithms behind artificial intelligence.