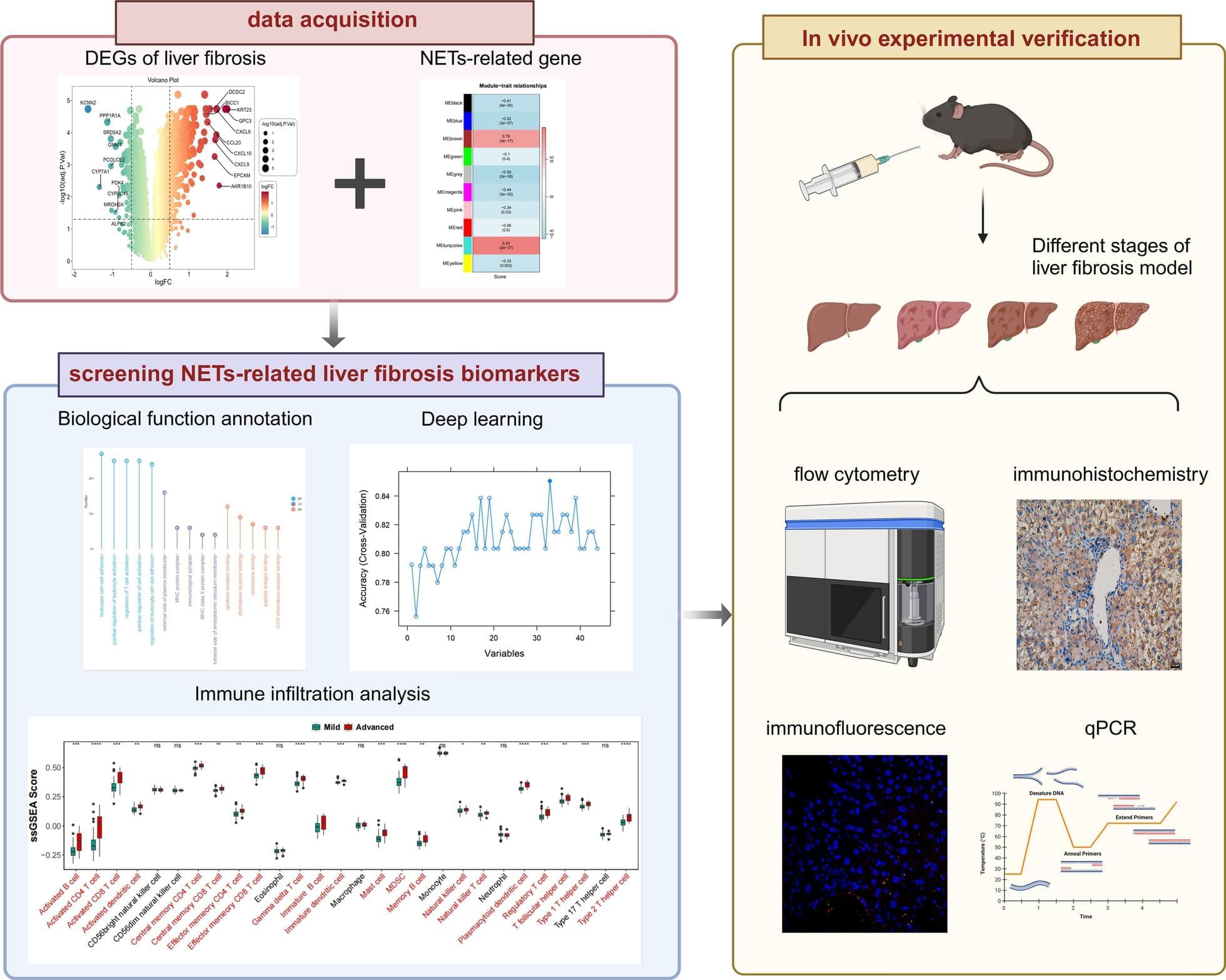

The diagnostic and therapeutic potential of neutrophil extracellular traps (NETs) in liver fibrosis (LF) has not been fully explored. We aim to screen and verify NETs-related liver fibrosis biomarkers through machine learning.

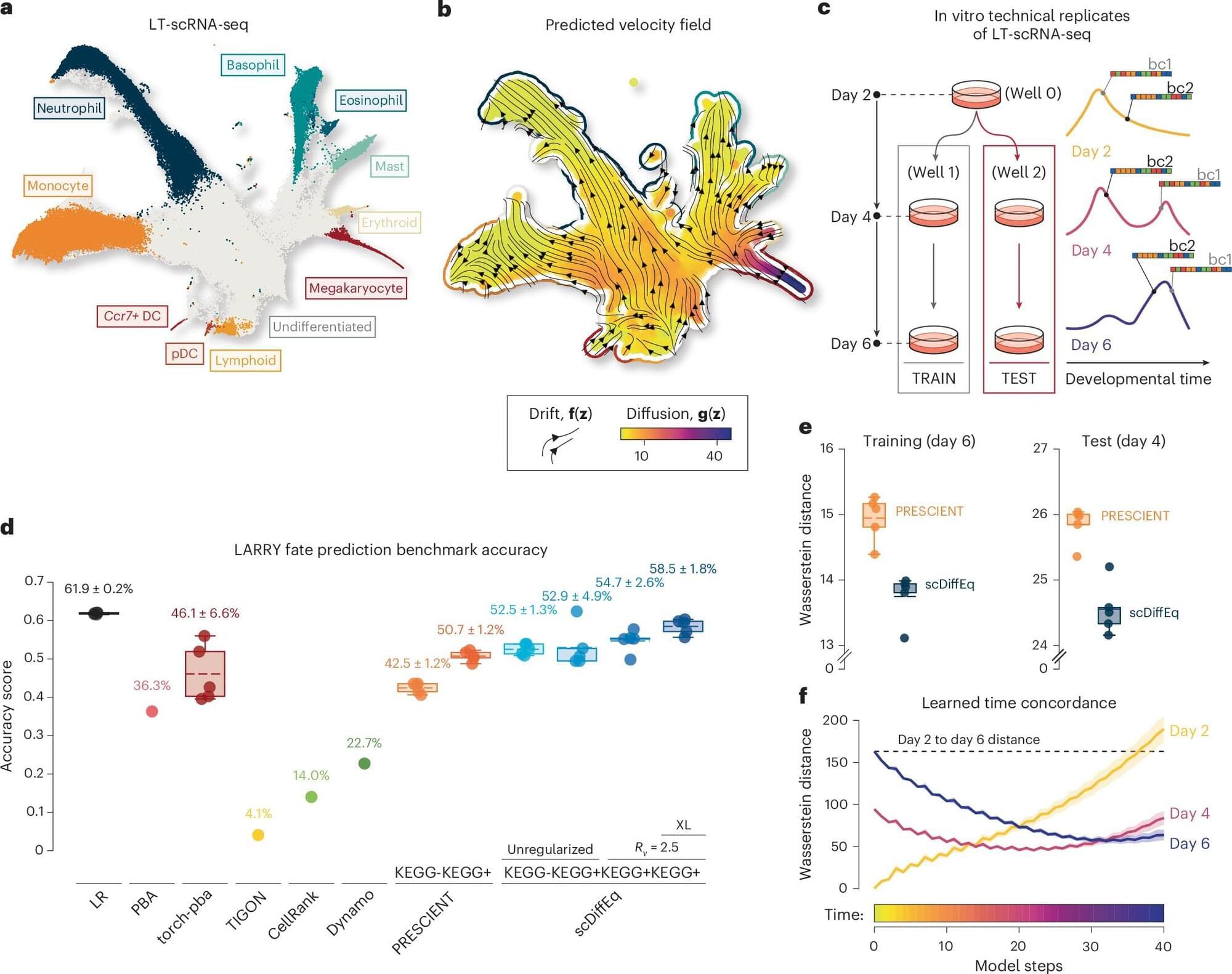

In order to obtain NETs-related differentially expressed genes (NETs-DEGs), differential analysis and WGCNA analysis were performed on the GEO dataset (GSE84044, GSE49541) and the NETs dataset. Enrichment analysis and protein interaction analysis were used to reveal the candidate genes and potential mechanisms of NETs-related liver fibrosis. Biomarkers were screened using SVM-RFE and Boruta machine learning algorithms, followed by immune infiltration analysis. A multi-stage model of fibrosis in mice was constructed, and neutrophil infiltration, NETs accumulation and NETs-related biomarkers were characterized by immunohistochemistry, immunofluorescence, flow cytometry and qPCR. Finally, the molecular regulatory network and potential drugs of biomarkers were predicted.

A total of 166 NETs-DEGs were identified. Through enrichment analysis, these genes were mainly enriched in chemokine signaling pathway and cytokine-cytokine receptor interaction pathway. Machine learning screened CCL2 as a NETs-related liver fibrosis biomarker, involved in ribosome-related processes, cell cycle regulation and allograft rejection pathways. Immune infiltration analysis showed that there were significant differences in 22 immune cell subtypes between fibrotic samples and healthy samples, including neutrophils mainly related to NETs production. The results of in vivo experiments showed that neutrophil infiltration, NETs accumulation and CCL2 level were up-regulated during fibrosis. A total of 5 miRNAs, 2 lncRNAs, 20 function-related genes and 6 potential drugs were identified based on CCL2.