Published in Plant Phenomics:Click the link to read the full article for free:

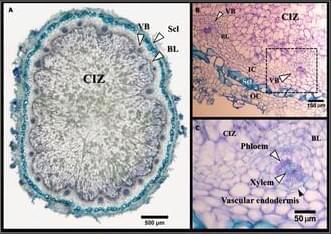

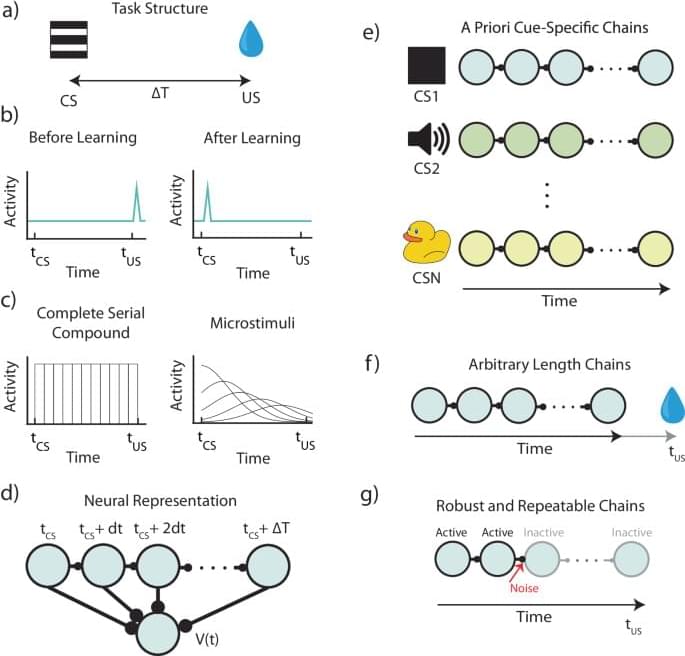

The efficiency of N2-fixation in legume–rhizobia symbiosis is a function of root nodule activity. Nodules consist of 2 functionally important tissues: (a) a central infected zone (CIZ), colonized by rhizobia bacteria, which serves as the site of N2-fixation, and (b) vascular bundles (VBs), serving as conduits for the transport of water, nutrients, and fixed nitrogen compounds between the nodules and plant. A quantitative evaluation of these tissues is essential to unravel their functional importance in N2-fixation. Employing synchrotron-based x-ray microcomputed tomography (SR-μCT) at submicron resolutions, we obtained high-quality tomograms of fresh soybean root nodules in a non-invasive manner. A semi-automated segmentation algorithm was employed to generate 3-dimensional (3D) models of the internal root nodule structure of the CIZ and VBs, and their volumes were quantified based on the reconstructed 3D structures. Furthermore, synchrotron x-ray fluorescence imaging revealed a distinctive localization of Fe within CIZ tissue and Zn within VBs, allowing for their visualization in 2 dimensions. This study represents a pioneer application of the SR-μCT technique for volumetric quantification of CIZ and VB tissues in fresh, intact soybean root nodules. The proposed methods enable the exploitation of root nodule’s anatomical features as novel traits in breeding, aiming to enhance N2-fixation through improved root nodule activity.